In collaboration with the Internet Archive, the Visual Explorer extracts one frame every 4 seconds from each broadcast to create a "visual ngram" that non-consumptively captures the core visual narratives of the broadcast. What if we took all of those images for a given Russian TV news broadcast and pairwise compared each image to every other image in that broadcast based on pixel-level visual similarity (using a perceptual hash)? The end result would allow us to not only identify contiguous sequences (marking "shot changes"), but, most importantly, to identify repeated content that makes an appearance multiple times throughout a broadcast, ranging from a clip that is aired multiple times at different points in the broadcast to repeated advertisements.

Let's apply this analysis to an episode of Russia 1's 60 Minutes from this past Monday.

First we'll download the every-four-seconds preview images from that broadcast from the Visual Explorer:

wget https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/RUSSIA1_20230313_083000_60_minut.zip unzip find RUSSIA1_20230313_083000_60_minut -depth -name "*.jpg" | wc -l

This broadcast contains 2,265 frames. Clustering the entire broadcast is as simple as the following two lines using an off-the-shelf tool called "findimagedupes":

apt-get -y install findimagedupes time find ./RUSSIA1_20230313_083000_60_minut/ -depth -name "*.jpg" -print0 | findimagedupes -f ./FINGERPRINTS-RUSSIA1_20230313_083000_60_minut.db -q -q -t 95% -0 -- - > MATCHES.RUSSIA1_20230313_083000_60_minut

Amazingly, despite computing perceptual hashes for 2,265 images and pairwise comparing every image to every other image, the entire process from start to finish takes just 21 seconds on a 64-core VM. Note that findimagedupes has the ability to save its fingerprints to an ondisk database which we enable here. This means that in future we can hand it a new image or set of images and have it compare them against this broadcast without recomputing all of the fingerprints – in practice this yields comparisons of new images in 0.1 seconds or less.

Here we look for 95% similarity, which is an extremely high threshold that captures only near-duplicates, but this could be reduced to capture more images that are "similar" but not identical.

The end result is a text file called "MATCHES.RUSSIA1_20230313_083000_60_minut" in which each line is a group of image filenames separated by spaces that were all judged to be above the similarity threshold based on the tool's perceptual hash representation of each.

We will then process the matches file using a simple Perl script to separate the groups into contiguous sequences of frames (ie, a single camera shot) versus groups where images have gaps between them, suggesting a clip, camera angle or advertisement being returned to.

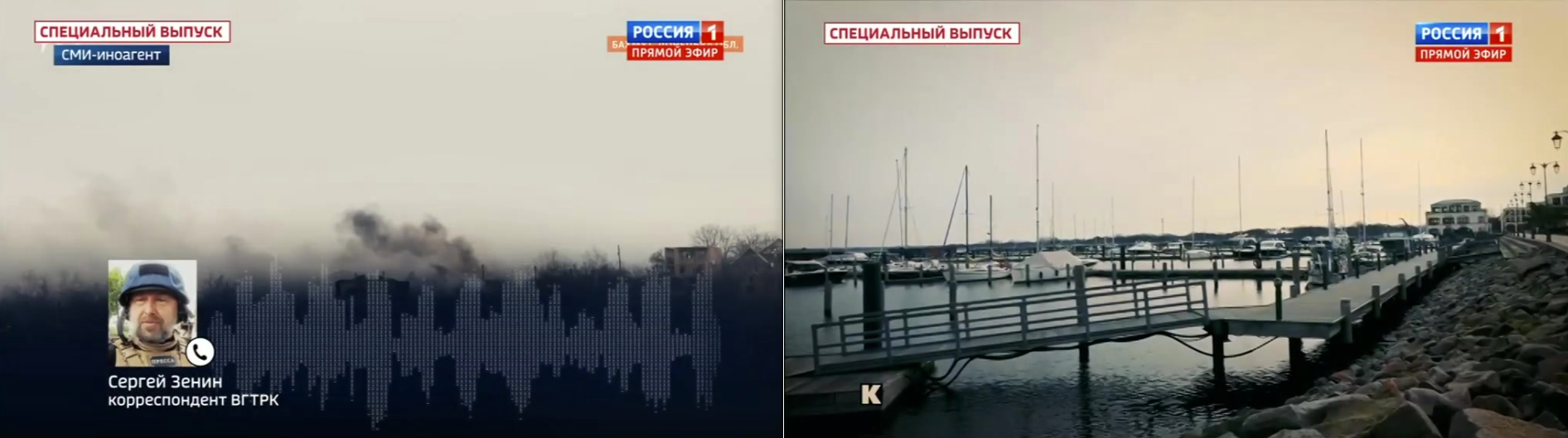

For example, here is an advertisement that aired twice, at 12:46PM and 1:02PM that the workflow clustered together:

And another ad that aired three times:

At the same time, even a 95% similarity threshold can yield false positives, like these two images with surprisingly similar color schemes, saturation and layouts. Though, a closer look suggests the use of a saturation filter that may suggest there is more to this similarity than pure coincidence:

Sequences with large gaps are strongly suggestive of advertisements, making this a potential workflow for identifying key advertising trends on Russian television news and how the ad economy is adjusting in the face of global sanctions. The ability to identify strikingly similar images and palettes suggests that other forms of analysis like histogram-based palette analysis, filter detection, texture and structural analyses would all offer powerful insights into the visual narratives of Russian television news.

Perhaps most critically, the efficiency of findimagedupes and its ability to pairwise compare an entire broadcast in just 21 seconds on a 64-core CPU-only system resets expectations around the tractability of scaling such analyses upwards to larger and larger volumes of content.

For those interested in exploring further, the following Perl script processes the above MATCHES file:

#!/usr/bin/perl

open(FILE, $ARGV[0]);

open(OUTS, ">$ARGV[0].seq");

open(OUTG, ">$ARGV[0].gap");

open(OUTC, ">$ARGV[0].crossshow");

while(<FILE>) {

my @arr = sort (split/\s+/, $_); $arrlen = scalar(@arr);

$lastshow = ''; $lastframe = 0; $hasgap = 0; $crossshow = 0;

for($i=0;$i<$arrlen;$i++) {

($show, $frame) = $arr[$i]=~/.*\/(.*?)\-(\d+)\.jpg/;

#$arr[$i]=~s/^.*\///;

$arr[$i] = $frame;

if ($i > 0 && ($show ne $lastshow || $frame > ($lastframe+1))) { $hasgap = 1; };

if ($i > 0 && $show ne $lastshow) { $crossshow = 1; };

$lastshow = $show; $lastframe = $frame;

}

if ($crossshow == 1) { print OUTC "@arr\n"; next; }

if ($hasgap == 1) { $gap = $arr[-1] - $arr[0]; print OUTG "$gap\t@arr\n"; } else { print OUTS "@arr\n"; };

}

close(FILE);

close(OUTS);

close(OUTG);

close(OUTC);

This generates three files. One containing any cross-show matches (not relevant here), one containing sequential matches (a sequence of images) and one containing matches with gaps between them.

Matches with gaps between them, with the first column indicating the number of intervening frames:

4 001654 001656 001657 001658 20 001602 001622 1049 001176 001384 001415 002009 002194 002225 818 001397 001994 002215 2 000238 000240 4 000590 000591 000593 000594 948 000637 001585 2 002071 002073 14 000522 000523 000524 000525 000532 000533 000534 000535 000536 6 000816 000819 000822 5 000057 000062 30 001204 001205 001234 4 002143 002147 6 000871 000877 814 001389 002203 10 001467 001477 7 000665 000672 5 001630 001635 3 000508 000511 2 000367 000369 12 001469 001474 001475 001481 2036 000020 002056 707 001041 001748 1043 001159 002202 33 001051 001084 818 001368 002006 002186 597 001398 001995 34 001268 001302 1043 001161 002204 18 000423 000441 234 001153 001387 5 000614 000618 000619 917 000197 001049 001052 001054 001083 001108 001109 001110 001112 001113 001114 638 001369 002007 7 001218 001220 001222 001225 29 000417 000418 000420 000424 000427 000429 000430 000432 000443 000446 223 001191 001414 7 000058 000063 000064 000065 12 002114 002121 002122 002123 002124 002125 002126 3 002117 002120 2 001088 001090 5 000277 000282 12 002096 002108 6 001470 001476 6 001242 001243 001248 179 002008 002187 26 000993 001019

Similarly, sequential matches:

002069 002070 000352 000353 000354 002088 002089 002090 000318 000319 000361 000362 000575 000576 000577 000578 001765 001766 001767 001768 001769 000339 000340 000341 000364 000365 000358 000359 000360 000156 000157 000158 000615 000616 000251 000252 001251 001252 000235 000236 000237 001853 001854 001855 001856 001857 001858 001859 001860 001861 001862 001863 001864 001865 001866 001867 001868 001439 001440 001697 001698 000596 000597 000331 000332 001972 001973 000580 000581 001487 001488 001548 001549 001649 001650 000173 000174 000435 000436 000437 000438 000439 000586 000587 000588 001551 001552 001553 001554 001555 001556 001560 001561 001562 000186 000187 000283 000284 000259 000260 000261 000262 001669 001670 001671 001672 002132 002133 001525 001526 000406 000407 000408 000409 000410 000911 000912 000570 000571 000572 000882 000883 000854 000855 000856 000857 000858 000859 000860 000861 000862 000863 000864 000865 000866 000867 000868 001181 001182 000373 000374 001212 001213 001617 001618 001826 001827 001828 001829 001830 001831 001832 001833 001834 001835 001836 001199 001200 001201 001528 001529 000474 000475 000089 000090 000083 000084 000337 000338 000350 000351 001673 001674 000219 000220 000221 000222 000223 000224 000225 000226 000888 000889

We hope this inspires you to explore new kinds of visual analyses on this collection!

This analysis is part of an ongoing collaboration between the Internet Archive and its TV News Archive, the multi-party Media-Data Research Consortium and GDELT.