As we continue to explore how journalists and scholars can make sense of our ever more unstable world, we've examined how multimodal image embeddings can be used to visualize an entire day of television news from Russia and from Iran, identifying the visual narrative landscapes of their coverage. Let's apply the same approach to an entire day of a single channel of Chinese television news: CCTV-13.

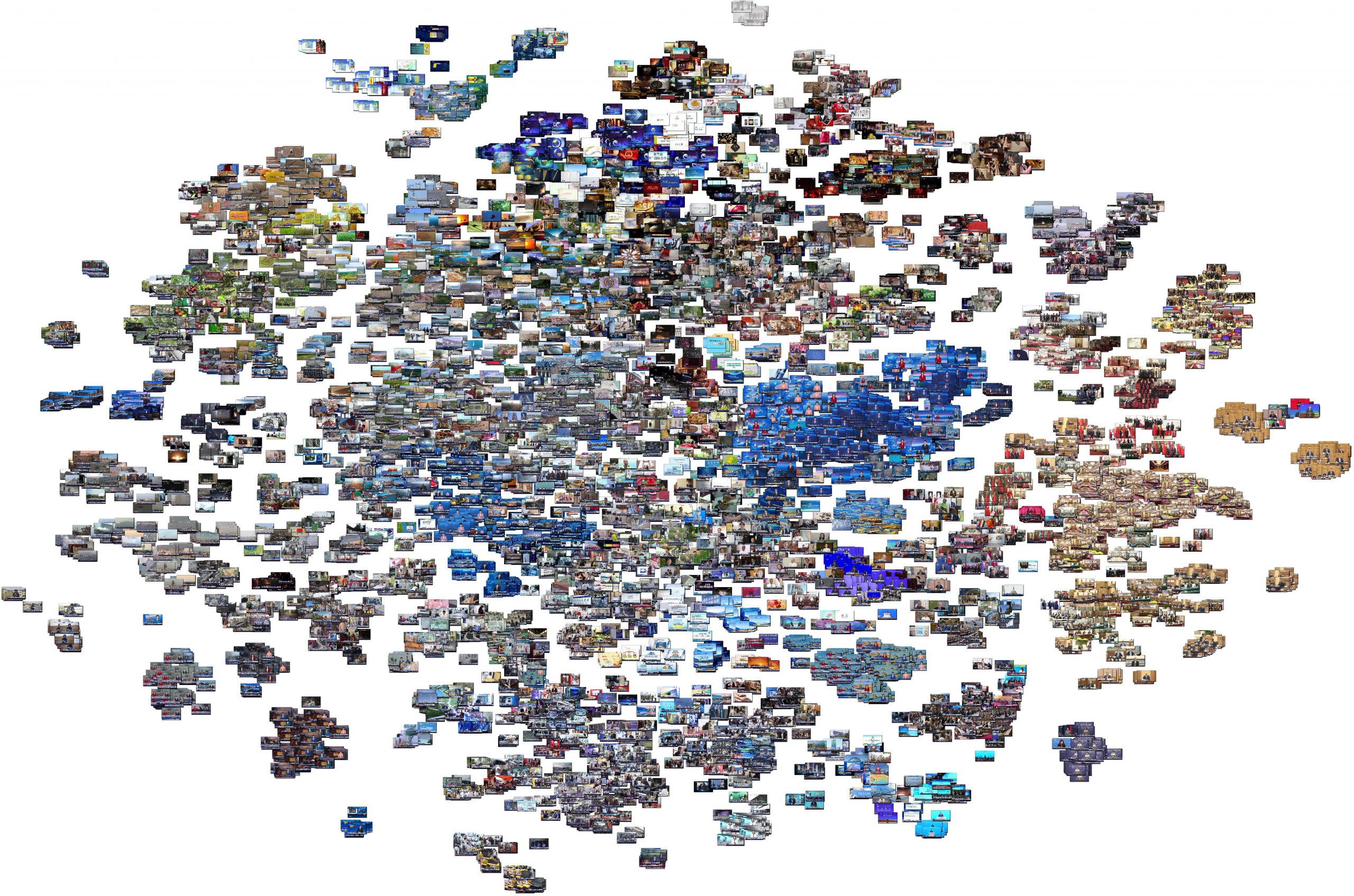

Using the exact same code and workflow as before, we use GCP's Vertex AI Multimodal Embedding model to compute embedding representations of one frame every four seconds from the complete 24 hours of coverage October 17, 2023 (in UTC timezone). The embeddings were then clustered and visualized using PCA (which attempts to preserve macro-level trends) and t-SNE (which attempts to cluster images more tightly to yield more strongly-defined micro-level patterns and clusters). You can see the final images below.

Both the global-scale PCA and micro-scale t-SNE layouts are more structured and stratified than Iranian television and more similar to Russian television, with clearly-defined thematic and topical clustering.

PCA

t-SNE