Embeddings are highly sensitive to input length, where highly similar texts can yield very different embeddings depending on their size. Just how stark is this effect and does it have a meaningful impact on clustering? To explore this question, we'll use our embedding visualization template to cluster the one-word sentence "dog." repeated once, twice, three times, four times, up to 11 times. This synthetic benchmark has the downside of producing an unrealistic token sequence that might trigger edge cases in the model's knowledgestore (especially the LLM-based Gecko), but has the benefit of not introducing confounding factors such as additional textual detail that might legitimately yield different scores (such as comparing "dog" with "the dog ran fast" in which running and speed would be expected to yield a different embedding than just "dog" by itself).

We'll use the same set of models as before: the English-only USEv4, the larger English-only USEv5-Large, the 16-language USEv3-Multilingual and the larger 16-language USEv3-Multilingual-Large models (supporting 16 languages: Arabic, Chinese-simplified, Chinese-traditional, English, French, German, Italian, Japanese, Korean, Dutch, Polish, Portuguese, Spanish, Thai, Turkish, Russian), the 100-language LaBSEv2 model optimized for translation-pair scoring and the Vertex AI Embeddings for Text API.

All six models strongly stratify the sentences by length, all isolate "dog" by itself and all exhibit dramatically decreasing distance scores as length increases. Overall, input length has an extreme impact on embedding distance in the case of this synthetic benchmark.

sentences = [

"dog.",

"dog. dog.",

"dog. dog. dog.",

"dog. dog. dog. dog.",

"dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog. dog. dog. dog.",

]

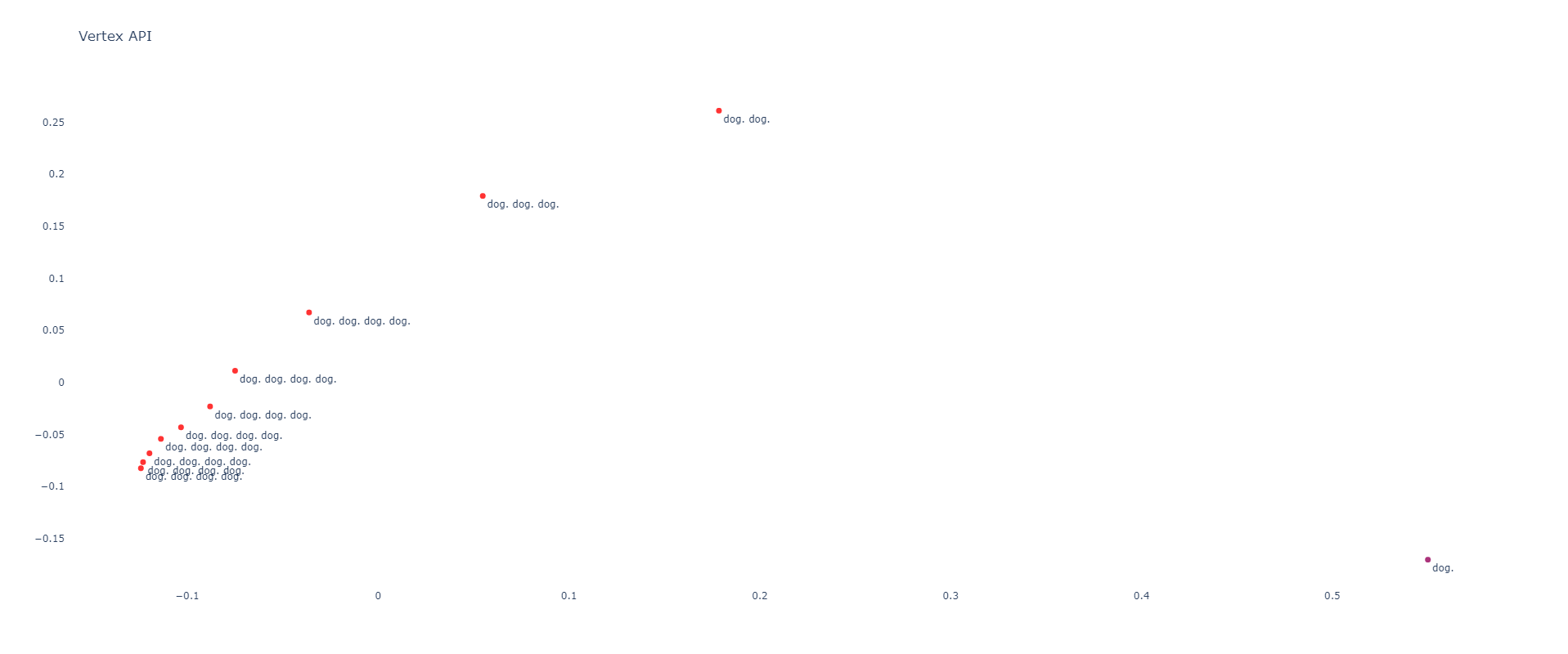

Vertex AI

Vertex places the single word "dog" by itself and clusters the repeated-word sentences in a broad arc, with distances dropping exponentially as word length increases. This means that a search for "dog dog dog dog dog" would yield very high similarity scores to large-length sentences, while a search for just "dog" would yield a poor similarity score with longer sentences:

[[0.99999975 0.82641526 0.80889414 0.79397982 0.78533461 0.78430985 0.7777905 0.77187479 0.76870128 0.76609353 0.76456109] [0.82641526 0.99999987 0.95554087 0.92860867 0.91687083 0.91013262 0.90422738 0.90051271 0.89581038 0.89241343 0.88980185] [0.80889414 0.95554087 0.99999978 0.97932796 0.96645097 0.95902761 0.95226223 0.94717566 0.94201016 0.93806084 0.93523524] [0.79397982 0.92860867 0.97932796 0.99999975 0.99472319 0.98916679 0.98380042 0.97910198 0.97420873 0.97011639 0.96683917] [0.78533461 0.91687083 0.96645097 0.99472319 0.99999982 0.99814142 0.99503228 0.99135086 0.98778857 0.98436983 0.98148073] [0.78430985 0.91013262 0.95902761 0.98916679 0.99814142 0.99999954 0.99883548 0.99637759 0.99397064 0.99139934 0.98908539] [0.7777905 0.90422738 0.95226223 0.98380042 0.99503228 0.99883548 0.99999951 0.99903892 0.99746555 0.99557987 0.99367589] [0.77187479 0.90051271 0.94717566 0.97910198 0.99135086 0.99637759 0.99903892 0.9999995 0.99921839 0.99806486 0.99664509] [0.76870128 0.89581038 0.94201016 0.97420873 0.98778857 0.99397064 0.99746555 0.99921839 0.99999944 0.99964678 0.99886494] [0.76609353 0.89241343 0.93806084 0.97011639 0.98436983 0.99139934 0.99557987 0.99806486 0.99964678 0.99999954 0.99975477] [0.76456109 0.88980185 0.93523524 0.96683917 0.98148073 0.98908539 0.99367589 0.99664509 0.99886494 0.99975477 0.99999953]]

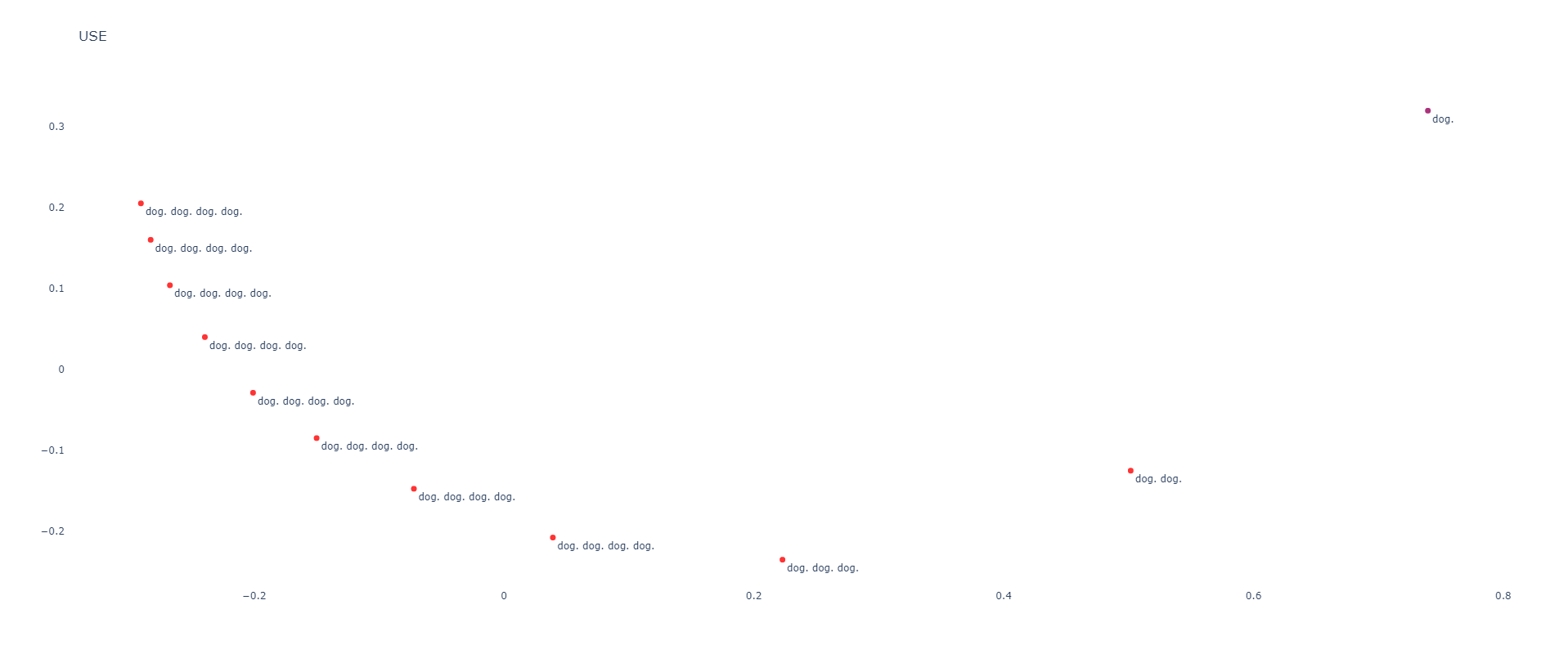

Universal Sentence Encoder

USE results in a similar phenomena, but lays the sentences in an inverted arc:

[[0.99999976 0.836509 0.69519377 0.6118257 0.5606864 0.5213977 0.49471593 0.47717237 0.4610212 0.44734502 0.43385786] [0.836509 0.9999999 0.9457048 0.8645296 0.8030176 0.7529305 0.71828926 0.69061583 0.66716623 0.6457858 0.62502766] [0.69519377 0.9457048 0.9999998 0.9741863 0.9368095 0.899614 0.8706771 0.8434928 0.8181821 0.79283595 0.76780236] [0.6118257 0.8645296 0.9741863 1.0000002 0.98923475 0.9679816 0.94707745 0.92362285 0.8992692 0.87270725 0.84585106] [0.5606864 0.8030176 0.9368095 0.98923475 1. 0.9935412 0.98101866 0.96305376 0.9416253 0.916304 0.889917 ] [0.5213977 0.7529305 0.899614 0.9679816 0.9935412 0.9999999 0.99598885 0.9840876 0.9663594 0.9432925 0.9182836 ] [0.49471593 0.71828926 0.8706771 0.94707745 0.98101866 0.99598885 0.99999964 0.9948007 0.98161536 0.9618306 0.9390698 ] [0.47717237 0.69061583 0.8434928 0.92362285 0.96305376 0.9840876 0.9948007 0.9999995 0.99473584 0.9809712 0.962709 ] [0.4610212 0.66716623 0.8181821 0.8992692 0.9416253 0.9663594 0.98161536 0.99473584 1. 0.9953166 0.98417866] [0.44734502 0.6457858 0.79283595 0.87270725 0.916304 0.9432925 0.9618306 0.9809712 0.9953166 1. 0.9965749 ] [0.43385786 0.62502766 0.76780236 0.84585106 0.889917 0.9182836 0.9390698 0.962709 0.98417866 0.9965749 1.0000004 ]]

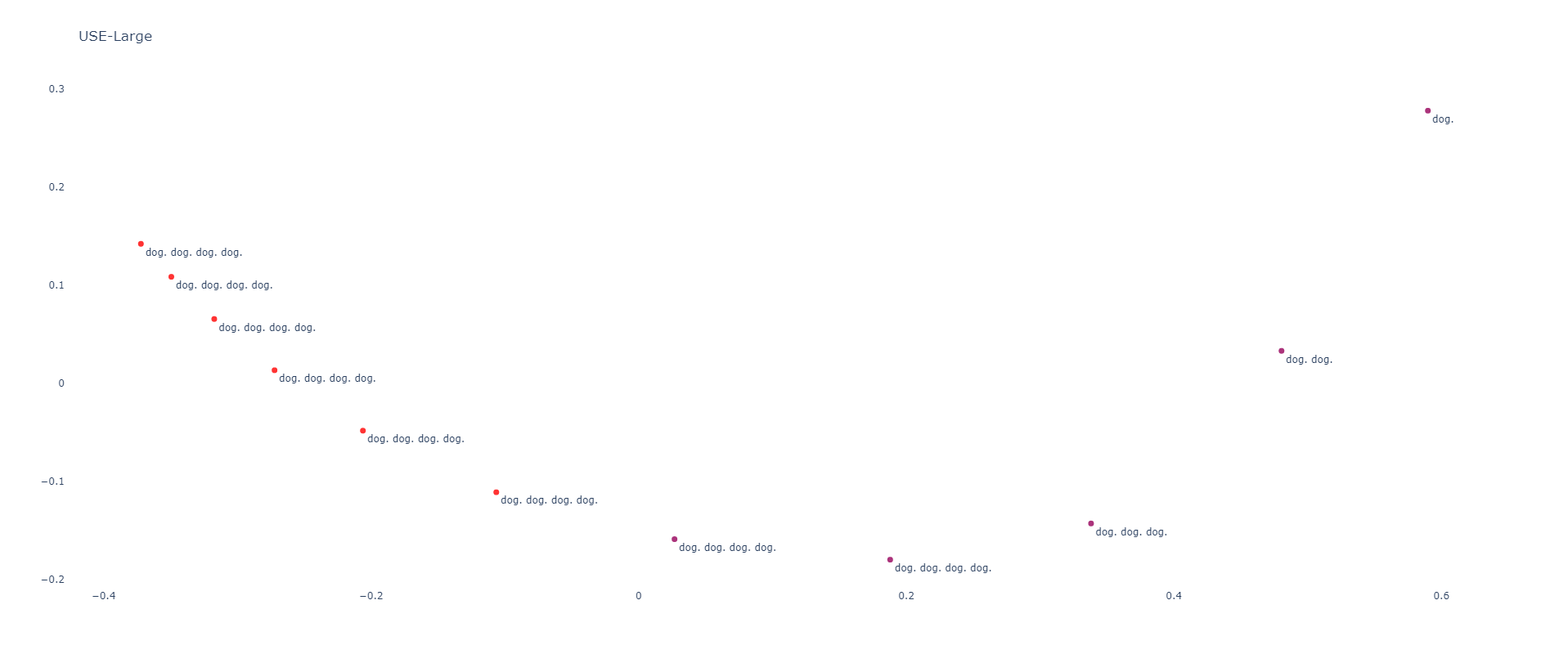

Universal Sentence Encoder Large

USE Large is nearly identical to USE, except that the points are redistributed to make "dog" less of an outlier:

[[0.9999998 0.957119 0.8744787 0.8128081 0.74579334 0.68155485 0.62926996 0.59227306 0.56405103 0.54142386 0.5234394 ] [0.957119 0.99999976 0.9722537 0.9311801 0.8740438 0.81218636 0.7567806 0.71376663 0.67940354 0.65124315 0.62869954] [0.8744787 0.9722537 0.9999995 0.9866938 0.9473151 0.8951643 0.8428252 0.7988117 0.762236 0.7315916 0.7068284 ] [0.8128081 0.9311801 0.9866938 0.9999999 0.9856782 0.95192695 0.91154605 0.8743277 0.84182626 0.81373656 0.7905967 ] [0.74579334 0.8740438 0.9473151 0.9856782 0.99999964 0.98954475 0.96604633 0.9397451 0.9145904 0.8917124 0.8722645 ] [0.68155485 0.81218636 0.8951643 0.95192695 0.98954475 0.9999999 0.9928639 0.9778124 0.96054274 0.9434415 0.9281698 ] [0.62926996 0.7567806 0.8428252 0.91154605 0.96604633 0.9928639 0.9999996 0.9956316 0.9861915 0.97498155 0.9640389 ] [0.59227306 0.71376663 0.7988117 0.8743277 0.9397451 0.9778124 0.9956316 1.0000001 0.99729097 0.9912721 0.98421997] [0.56405103 0.67940354 0.762236 0.84182626 0.9145904 0.96054274 0.9861915 0.99729097 0.9999999 0.9982609 0.994473 ] [0.54142386 0.65124315 0.7315916 0.81373656 0.8917124 0.9434415 0.97498155 0.9912721 0.9982609 0.99999976 0.99891865] [0.5234394 0.62869954 0.7068284 0.7905967 0.8722645 0.9281698 0.9640389 0.98421997 0.994473 0.99891865 1.0000001 ]]

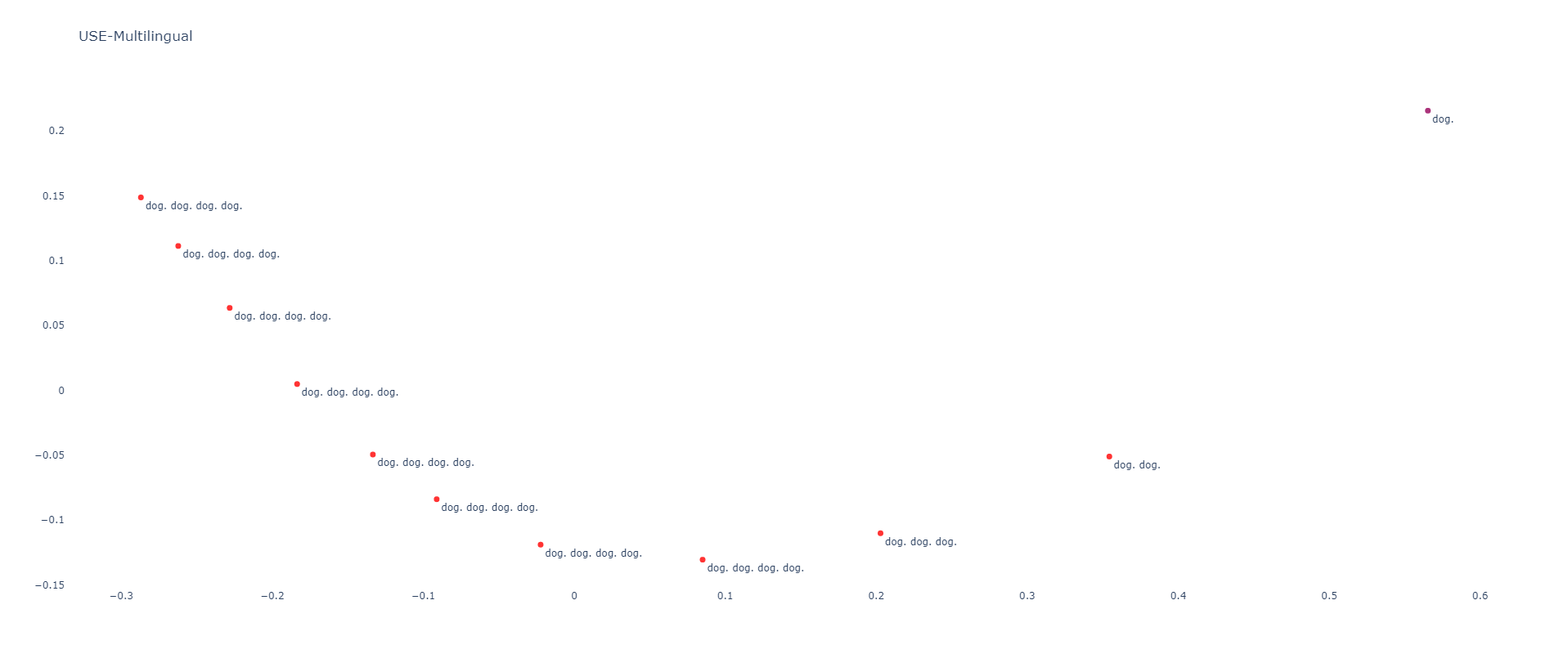

Universal Sentence Encoder Multilingual

USE Multilingual is nearly identical to USE Large:

[[0.9999999 0.92415893 0.870756 0.82075846 0.770645 0.73929507 0.71983165 0.69593215 0.669991 0.6451962 0.6237942 ] [0.92415893 1. 0.9826983 0.9519581 0.9126611 0.88452625 0.86547637 0.8426802 0.8176596 0.7930702 0.77140796] [0.870756 0.9826983 0.9999997 0.99054503 0.96691006 0.94621325 0.9307738 0.91182256 0.8894202 0.86649203 0.84576607] [0.82075846 0.9519581 0.99054503 1. 0.9919983 0.9790244 0.9673419 0.9514489 0.93087524 0.90931106 0.88943475] [0.770645 0.9126611 0.96691006 0.9919983 1. 0.9961469 0.98927236 0.9774553 0.95970416 0.9403297 0.92199504] [0.73929507 0.88452625 0.94621325 0.9790244 0.9961469 0.99999946 0.9980106 0.9906516 0.9765413 0.9599916 0.943807 ] [0.71983165 0.86547637 0.9307738 0.9673419 0.98927236 0.9980106 0.99999976 0.99655896 0.98573124 0.9716984 0.9574975 ] [0.69593215 0.8426802 0.91182256 0.9514489 0.9774553 0.9906516 0.99655896 0.9999999 0.9960333 0.98718977 0.97681904] [0.669991 0.8176596 0.8894202 0.93087524 0.95970416 0.9765413 0.98573124 0.9960333 1.0000002 0.99739134 0.9916526 ] [0.6451962 0.7930702 0.86649203 0.90931106 0.9403297 0.9599916 0.9716984 0.98718977 0.99739134 1. 0.99830234] [0.6237942 0.77140796 0.84576607 0.88943475 0.92199504 0.943807 0.9574975 0.97681904 0.9916526 0.99830234 1.0000002 ]]

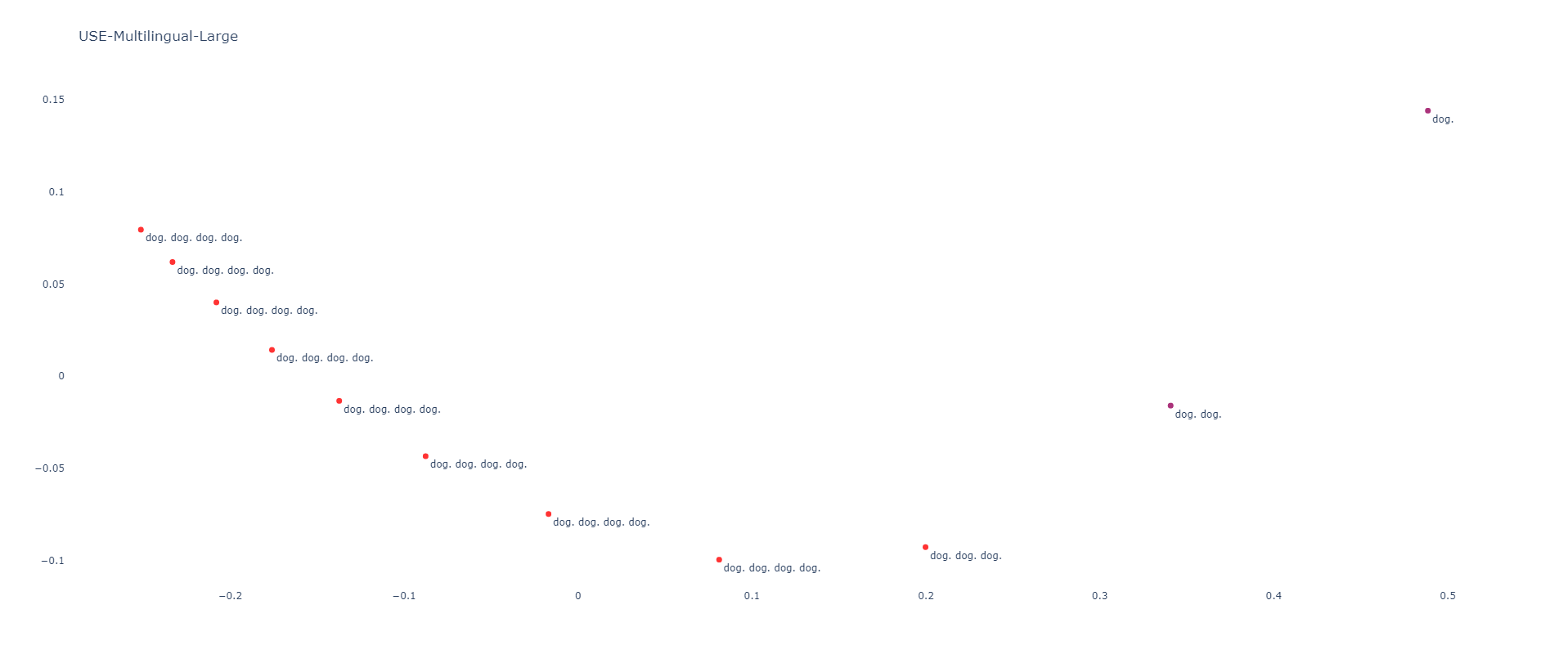

Universal Sentence Encoder Multilingual Large

The Large edition is nearly identical to its smaller counterpart:

[[0.99999964 0.9734046 0.928742 0.8870569 0.84823096 0.81632686 0.79155874 0.7704358 0.7514149 0.73513997 0.7226167 ] [0.9734046 1.0000002 0.9865414 0.96108484 0.93179464 0.9054351 0.883829 0.86472344 0.8470632 0.8317225 0.81971437] [0.928742 0.9865414 0.99999976 0.9923179 0.97494364 0.95597196 0.9388887 0.92298365 0.90776646 0.89423907 0.8833701 ] [0.8870569 0.96108484 0.9923179 0.99999964 0.9946449 0.9837817 0.97209615 0.9602522 0.9482739 0.937238 0.9281069 ] [0.84823096 0.93179464 0.97494364 0.9946449 0.9999996 0.9969413 0.9907315 0.98314476 0.9746828 0.96640587 0.9592637 ] [0.81632686 0.9054351 0.95597196 0.9837817 0.9969413 0.9999999 0.99826324 0.9942197 0.9887623 0.9828851 0.977512 ] [0.79155874 0.883829 0.9388887 0.97209615 0.9907315 0.99826324 0.9999997 0.9987958 0.99576944 0.9918616 0.98797536] [0.7704358 0.86472344 0.92298365 0.9602522 0.98314476 0.9942197 0.9987958 0.9999999 0.9990654 0.99686265 0.994277 ] [0.7514149 0.8470632 0.90776646 0.9482739 0.9746828 0.9887623 0.99576944 0.9990654 1. 0.9993417 0.99792993] [0.73513997 0.8317225 0.89423907 0.937238 0.96640587 0.9828851 0.9918616 0.99686265 0.9993417 1.0000002 0.999598 ] [0.7226167 0.81971437 0.8833701 0.9281069 0.9592637 0.977512 0.98797536 0.994277 0.99792993 0.999598 1.0000002 ]]

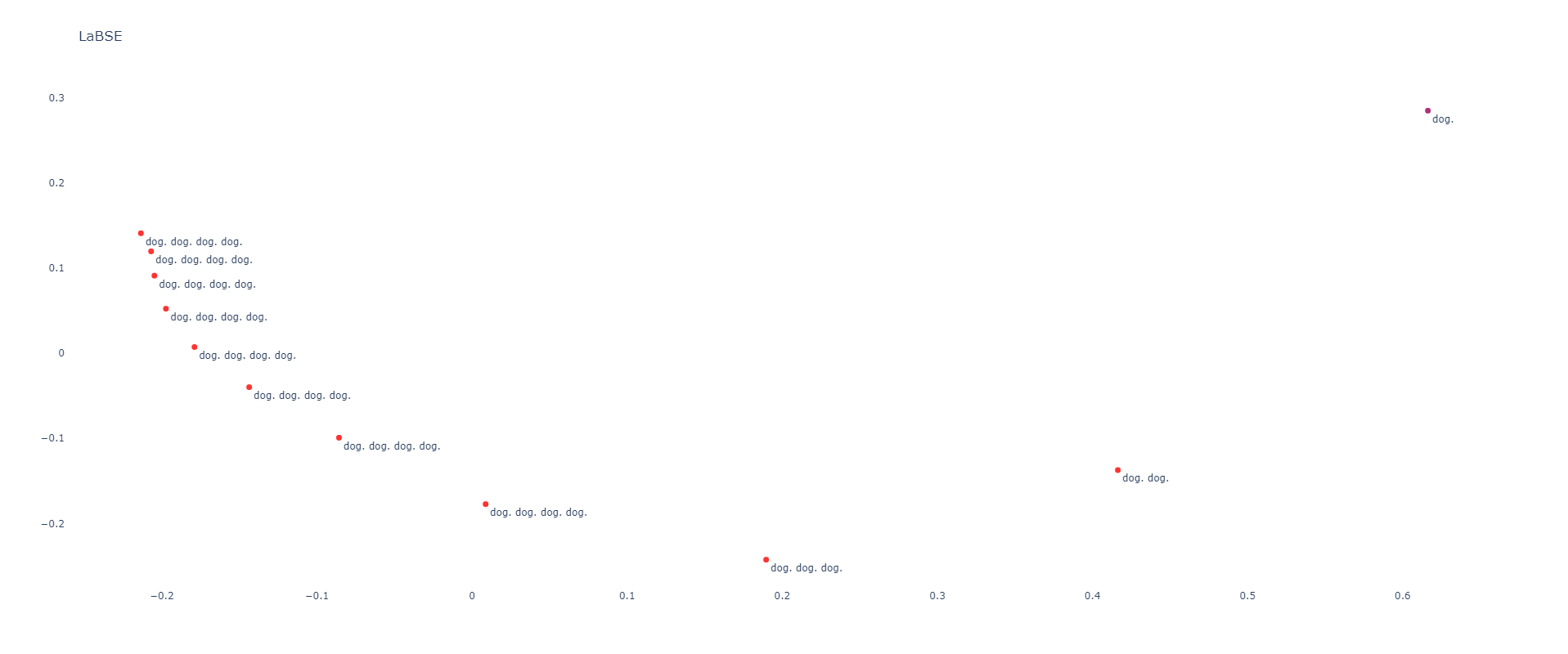

LaBSE

LaBSE's exponentially decreasing similarity distance manifests as a blend of Vertex and USE:

[[0.99999976 0.8664909 0.76577914 0.7084024 0.6793747 0.65785915 0.6439845 0.63916564 0.63882697 0.63886976 0.6337432 ] [0.8664909 1. 0.95426214 0.89318067 0.85028195 0.81846714 0.79658693 0.78265584 0.77371734 0.7676935 0.758533 ] [0.76577914 0.95426214 1.0000001 0.9776583 0.9462485 0.9191165 0.8973955 0.87943584 0.8646666 0.85318196 0.84160817] [0.7084024 0.89318067 0.9776583 0.99999976 0.99200416 0.9780177 0.96386963 0.94970846 0.93637156 0.924906 0.9142369 ] [0.6793747 0.85028195 0.9462485 0.99200416 0.9999999 0.996289 0.98891294 0.9795922 0.9694664 0.95993483 0.9511211 ] [0.65785915 0.81846714 0.9191165 0.9780177 0.996289 1.0000002 0.99788696 0.9926326 0.98546374 0.97794175 0.97088087] [0.6439845 0.79658693 0.8973955 0.96386963 0.98891294 0.99788696 0.99999964 0.9982574 0.9938351 0.988309 0.98288244] [0.63916564 0.78265584 0.87943584 0.94970846 0.9795922 0.9926326 0.9982574 1. 0.9985611 0.99530953 0.9916431 ] [0.63882697 0.77371734 0.8646666 0.93637156 0.9694664 0.98546374 0.9938351 0.9985611 1.0000001 0.9990207 0.9969927 ] [0.63886976 0.7676935 0.85318196 0.924906 0.95993483 0.97794175 0.988309 0.99530953 0.9990207 1. 0.99938893] [0.6337432 0.758533 0.84160817 0.9142369 0.9511211 0.97088087 0.98288244 0.9916431 0.9969927 0.99938893 1.0000002 ]]

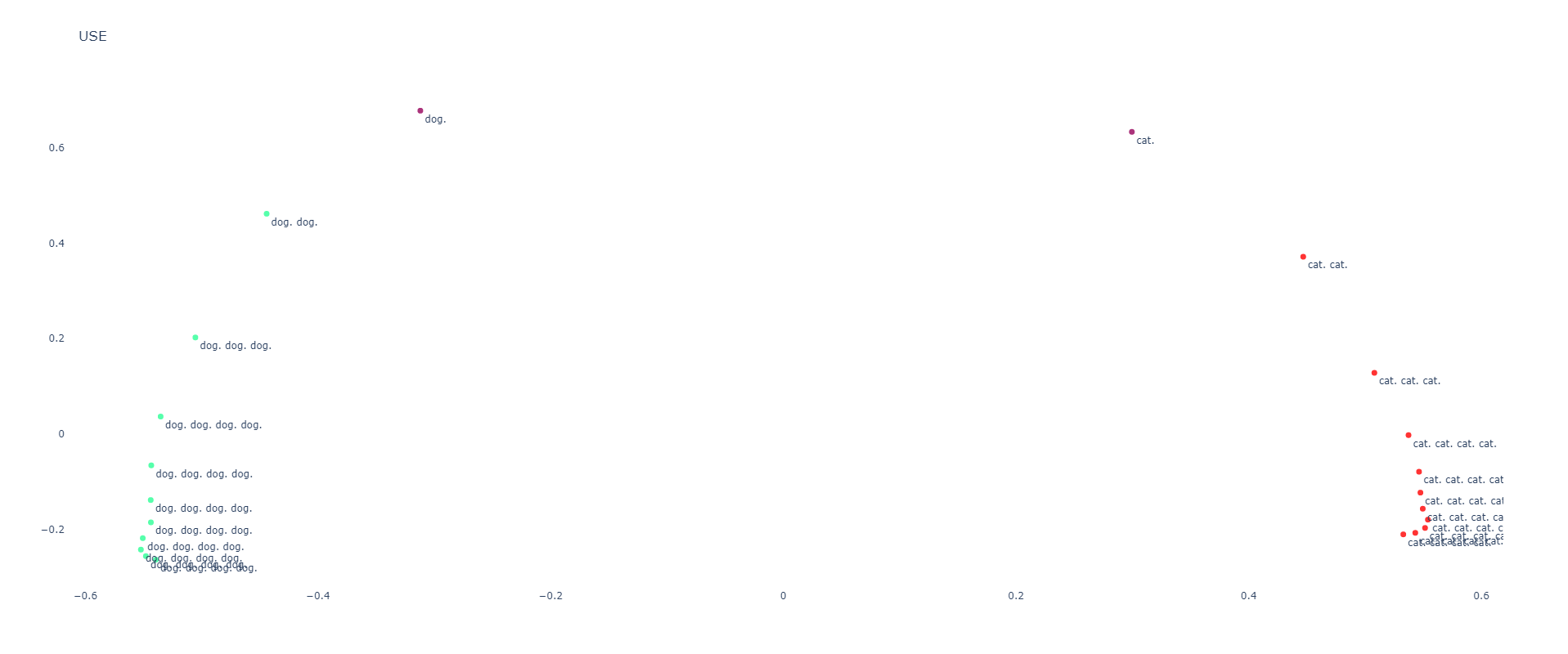

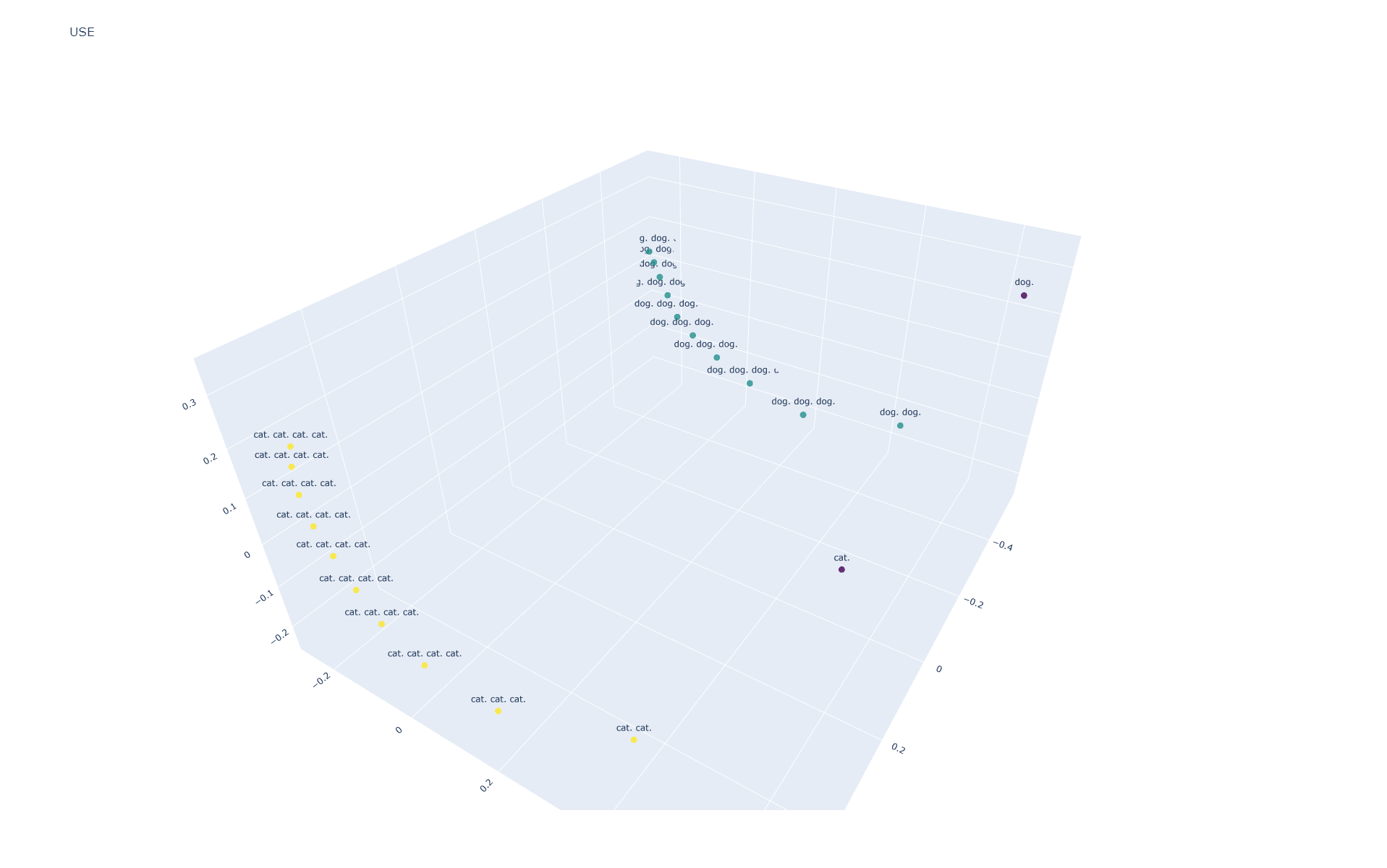

Dissimilar Concepts

If we repeat the process and add the same sequence for "cat":

sentences = [

"dog.",

"dog. dog.",

"dog. dog. dog.",

"dog. dog. dog. dog.",

"dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog. dog. dog.",

"dog. dog. dog. dog. dog. dog. dog. dog. dog. dog. dog.",

"cat.",

"cat. cat.",

"cat. cat. cat.",

"cat. cat. cat. cat.",

"cat. cat. cat. cat. cat.",

"cat. cat. cat. cat. cat. cat.",

"cat. cat. cat. cat. cat. cat. cat.",

"cat. cat. cat. cat. cat. cat. cat. cat.",

"cat. cat. cat. cat. cat. cat. cat. cat. cat.",

"cat. cat. cat. cat. cat. cat. cat. cat. cat. cat.",

"cat. cat. cat. cat. cat. cat. cat. cat. cat. cat. cat.",

]

We get the same results. Here are the results for USE:

[[0.99999976 0.836509 0.69519377 0.6118257 0.5606864 0.5213977 0.49471593 0.47717237 0.46102107 0.44734502 0.43385774 0.62375844 0.42188084 0.2995427 0.2489526 0.2239392 0.21388264 0.20650811 0.19982098 0.19345307 0.18798691 0.18394005] [0.836509 0.9999999 0.9457048 0.8645296 0.8030176 0.7529305 0.71828926 0.69061583 0.6671661 0.64578587 0.62502754 0.46960324 0.5013697 0.4105473 0.34599966 0.30868387 0.2877372 0.2710406 0.25788787 0.24595784 0.2367419 0.2310476 ] [0.69519377 0.9457048 0.9999998 0.9741863 0.9368095 0.899614 0.8706771 0.8434928 0.818182 0.792836 0.76780224 0.369168 0.4550584 0.4289844 0.39002663 0.36588383 0.35020107 0.33616513 0.3227144 0.3095587 0.29859114 0.29102653] [0.6118257 0.8645296 0.9741863 1.0000002 0.98923475 0.9679816 0.94707745 0.92362285 0.8992691 0.87270725 0.8458509 0.314009 0.39690074 0.40858 0.39344144 0.3823551 0.3729577 0.36266407 0.35043573 0.33795795 0.3267981 0.31850007] [0.5606864 0.8030176 0.9368095 0.98923475 1. 0.9935412 0.98101866 0.96305376 0.9416252 0.9163039 0.8899168 0.27703318 0.35779878 0.39173025 0.39109093 0.38843626 0.3840467 0.37678707 0.36621553 0.35504368 0.34447306 0.33599 ] [0.5213977 0.7529305 0.899614 0.9679816 0.9935412 0.9999999 0.99598885 0.9840876 0.9663594 0.9432924 0.9182834 0.24835461 0.32727185 0.37522632 0.38455212 0.38802135 0.38787082 0.38397223 0.37558204 0.3661738 0.35651007 0.3482211 ] [0.49471593 0.71828926 0.8706771 0.94707745 0.98101866 0.99598885 0.99999964 0.9948007 0.9816152 0.9618306 0.93906975 0.22919765 0.30524132 0.3598668 0.37520272 0.3827371 0.38567376 0.38484448 0.37884068 0.37158215 0.36316767 0.35546193] [0.47717237 0.69061583 0.8434928 0.92362285 0.96305376 0.9840876 0.9948007 0.9999995 0.9947358 0.9809711 0.96270883 0.21173713 0.27893636 0.33617434 0.35624105 0.3672477 0.37327588 0.375571 0.37136114 0.36607265 0.35915467 0.35220307] [0.46102107 0.6671661 0.818182 0.8992691 0.9416252 0.9663594 0.9816152 0.9947358 0.99999976 0.99531645 0.9841784 0.1971588 0.25966263 0.3175702 0.34030318 0.35353678 0.3616891 0.36619446 0.36376154 0.36051077 0.3561116 0.3508696 ] [0.44734502 0.64578587 0.792836 0.87270725 0.9163039 0.9432924 0.9618306 0.9809711 0.99531645 0.9999999 0.99657476 0.18644166 0.24448124 0.3018936 0.32625923 0.3409991 0.3507415 0.35707474 0.35614192 0.35471284 0.35245854 0.34882596] [0.43385774 0.62502754 0.76780224 0.8458509 0.8899168 0.9182834 0.93906975 0.96270883 0.9841784 0.99657476 0.9999999 0.17786276 0.2325545 0.28933817 0.31480902 0.3304906 0.34127086 0.34878892 0.3489812 0.34891963 0.3482889 0.34596545] [0.62375844 0.46960324 0.369168 0.314009 0.27703318 0.24835461 0.22919765 0.21173713 0.1971588 0.18644166 0.17786276 0.99999976 0.8138282 0.6939877 0.6299654 0.5886438 0.56377375 0.54557765 0.52963656 0.5125126 0.4949625 0.48013783] [0.42188084 0.5013697 0.4550584 0.39690074 0.35779878 0.32727185 0.30524132 0.27893636 0.25966263 0.24448124 0.2325545 0.8138282 0.9999997 0.9506396 0.8875934 0.8380843 0.8029097 0.7744355 0.7542992 0.73171645 0.7081555 0.6884301 ] [0.2995427 0.4105473 0.4289844 0.40858 0.39173025 0.37522632 0.3598668 0.33617434 0.3175702 0.3018936 0.28933817 0.6939877 0.9506396 0.99999994 0.9803884 0.9501207 0.9231065 0.8985571 0.8785187 0.8532599 0.825616 0.80142605] [0.2489526 0.34599966 0.39002663 0.39344144 0.39109093 0.38455212 0.37520272 0.35624105 0.34030318 0.32625923 0.31480902 0.6299654 0.8875934 0.9803884 1.0000002 0.9912418 0.975399 0.9570545 0.9381983 0.9123611 0.8832873 0.85710776] [0.2239392 0.30868387 0.36588383 0.3823551 0.38843626 0.38802135 0.3827371 0.3672477 0.35353678 0.3409991 0.3304906 0.5886438 0.8380843 0.9501207 0.9912418 1. 0.9954494 0.98413026 0.96834254 0.94400847 0.91555846 0.88930553] [0.21388264 0.2877372 0.35020107 0.3729577 0.3840467 0.38787082 0.38567376 0.37327588 0.3616891 0.3507415 0.34127086 0.56377375 0.8029097 0.9231065 0.975399 0.9954494 0.99999994 0.9955021 0.9832448 0.9614412 0.934625 0.9091706 ] [0.20650811 0.2710406 0.33616513 0.36266407 0.37678707 0.38397223 0.38484448 0.375571 0.36619446 0.35707474 0.34878892 0.54557765 0.7744355 0.8985571 0.9570545 0.98413026 0.9955021 1. 0.99458045 0.97886354 0.9564743 0.9338423 ] [0.19982098 0.25788787 0.3227144 0.35043573 0.36621553 0.37558204 0.37884068 0.37136114 0.36376154 0.35614192 0.3489812 0.52963656 0.7542992 0.8785187 0.9381983 0.96834254 0.9832448 0.99458045 1.0000002 0.994178 0.97967637 0.9624954 ] [0.19345307 0.24595784 0.3095587 0.33795795 0.35504368 0.3661738 0.37158215 0.36607265 0.36051077 0.35471284 0.34891963 0.5125126 0.73171645 0.8532599 0.9123611 0.94400847 0.9614412 0.97886354 0.994178 1.0000001 0.99518174 0.9846251 ] [0.18798691 0.2367419 0.29859114 0.3267981 0.34447306 0.35651007 0.36316767 0.35915467 0.3561116 0.35245854 0.3482889 0.4949625 0.7081555 0.825616 0.8832873 0.91555846 0.934625 0.9564743 0.97967637 0.99518174 1. 0.99658024] [0.18394005 0.2310476 0.29102653 0.31850007 0.33599 0.3482211 0.35546193 0.35220307 0.3508696 0.34882596 0.34596545 0.48013783 0.6884301 0.80142605 0.85710776 0.88930553 0.9091706 0.9338423 0.9624954 0.9846251 0.99658024 0.9999998 ]]