How exactly does one manage and analyze a dataset that last July totaled more than 3.2 trillion datapoints whose Global Frontpage Graph (GFG) alone is now three quarters of a trillion datapoints and where a small exploratory dataset might involve a 3.8-billion-edge graph, while a single analysis might analyze half a petabyte of data or construct a trillion-edge graph? What does the analytic pipeline look like for a project of GDELT’s scale?

Behind the scenes GDELT relies on a rich landscape of infrastructure from Google’s BigQuery to open source packages like ElasticSearch to an incredible array of bespoke systems that build upon the incredible compute, storage and networking capabilities of Google’s cloud environment. GDELT’s globally distributed frontier management system that backs its global crawler fleet across Google Cloud’s worldwide data center footprint is only possible because of the extraordinary global networking and storage fabric Google has created.

Sometimes infrastructure drives design decisions. The original Television Explorer was based around the measurement unit of sentences not because we believed that was the best methodological unit (in fact we disliked it), but because of a mismatch between ElasticSearch’s document-centric model and the continuous unsegmented speech model of television closed captioning. With a document model, search, matching and relevance calculations are computed at the document level. With a continuous captioning model, all calculations must be performed at the level of each individual match, with the full range of search and relevance operators being available at the word and phrase level. There are ways of approximating such behavior within ElasticSearch, but the majority of such approaches yield matches that are not first-class ElasticSearch citizens from the standpoint of important aggregation and query operators or yield unsustainable computational and IO loading and access patterns.

In the case of ElasticSearch, transitioning to the continuous airtime model we originally envisioned required translating the enormous complexity of ElasticSearch into a continuous text model. This involved not only substantial software development, but more importantly, complex architectural design to handle the myriad edge cases that come from supporting production research-grade systems that are pushed right up to the envelope by researchers exploring the very edges of what can be done today.

In short, building systems of GDELT’s scale isn’t just about writing code or technical architectures. It is about reimagining systems for applications for which their creators never dreamed of.

Today GDELT relies on a core production distributed ElasticSearch infrastructure running on Google’s Compute Engine, accessed through a number of abstraction infrastructure layers that adapt ElasticSearch to specific domains like continuous text or use specialized representations to enable the extremely high IO demands of GDELT’s hybrid GIS needs which combine aggregations (for which ElasticSearch is highly optimized) with large-N document recall (for which it requires very high IOP capacity). Many of GDELT’s APIs and analytic layers rely on infrastructure layers that essentially use ElasticSearch as an intelligent storage base layer, building on top of it to provide their unique capabilities.

Of course, indexed search comprises only a tiny fraction of GDELT’s daily analytic needs.

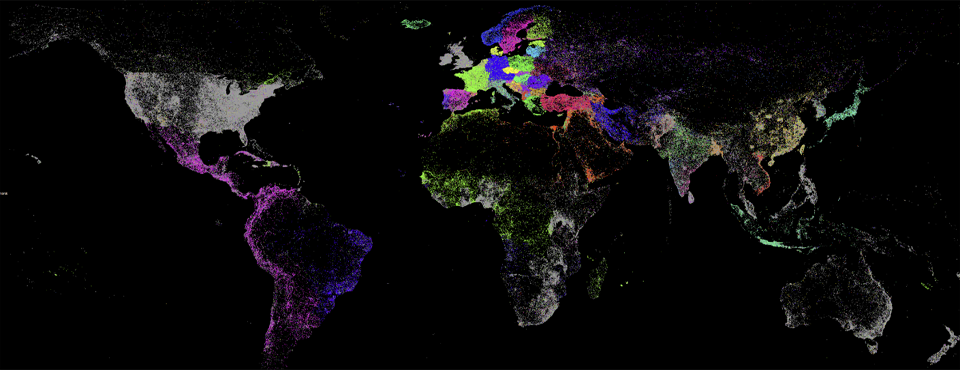

GDELT’s core infrastructure needs are met through a range of bespoke purpose-built systems that rely on Compute Engine’s unique networking and storage capabilities. Applications can quite literally span the entire globe, with elevated latency the only visible difference between communication among VMs in Ohio and LA compared with Ohio and Mumbai. Messaging infrastructure relies on this incredible networking fabric to tie GDELT’s global footprint together, while Google’s Cloud Storage is used as an extraordinary global backing, allowing any GDELT system anywhere in the world to share a common data fabric with every other GDELT system everywhere else in the world. Thus, a crawler in Sao Paulo experiencing a strange server response can coordinate with crawlers in London and Tokyo to verify it and all three can share their results with a diagnostic and research node in Finland coordinating with a node in LA to compare the results and investigate root causes.

Such global infrastructure unification lies at the heart of GDELT’s planetary-scale crawling.

This distributed compute and storage fabric also makes it possible for GDELT to bring to bear highly specialized analytic pipelines. Some resource-intensive tools make highly unique demands of their host environment, including specific hardware configurations or library versions that make them incompatible with other major tools. Within Google Cloud’s holistic environment, this is no longer an obstacle, since a custom-built VM can simply be installed anywhere and connect to GDELT’s global data pipeline.

For example, a tool that requires the enormous IOP capability of Local SSD can be run on a purpose-built VM with a Local SSD array, while another tool that requires large amounts of RAM, but no local disk can be run on a similarly customized VM, with both VMs simply connected into GDELT’s global data fabric from wherever in the world they are run.

Cloud Storage also makes it possible for any node in GDELT’s globally distributed infrastructure to participate in constructing public datasets. Any node anywhere in the world can take responsibility for producing any of GDELT’s realtime public datasets, uploading any given update file to Cloud Storage and BigQuery, allowing us to globally distribute our workload.

Of course, the crown jewel of GDELT’s planetary-scale analytics is Google’s BigQuery platform. Everything from network analysis to GIS analytics to adhoc sentiment mining and ngram construction to petascale JSON parsing to almost any kind of analysis imaginable happens through BigQuery.

In fact, BigQuery lies at the heart of almost every large-scale analysis we do with GDELT, allowing us to ask any question of GDELT without worrying about scale or tractability.

Behind the scenes, we rely upon BigQuery for many of our large whole-of-data analyses, including powering some of our public-facing analytics tools. When it comes to the adhoc analyses we do, nearly every one of them relies on BigQuery in some way, often in multiple stages, with the primary dataset filtered, parsed and reshaped in BigQuery, additional datasets imported, parsed, shaped and merged in successive passes until the final result is produced. In fact, BigQuery is so powerful that even our most complex tasks like graph construction can now be completed entirely in BigQuery, outputting a multi-billion-edge Gephi edge list file suitable for importing directly into Gephi for visualization without any further work.

Of course, this represents just the briefest glimpse into the global infrastructure that makes GDELT possible, but we hope it gives you ideas of how to fully leverage the incredible capabilities of Google’s global cloud infrastructure in your own applications!