Running a global crawling and processing infrastructure that monitors news outlets in nearly every country in over 65 languages is an immense undertaking involving an incredible number of moving parts that teaches us a tremendous amount each day about the technical underpinnings of the global news landscape. Few open data projects operate at the scale GDELT does and we get a lot of interest in the lessons we’ve learned building GDELT. We hope to begin publishing a regular series here on the GDELT Blog summarizing some of the experiences we’ve found most interesting, lessons we think will be most useful to others, unusual behavior we’ve observed, trends we’re seeing, why we do things the way we do and other advice we think may be useful to the broader community.

As we gear up for the debut of GDELT 3, we thought it would be useful to summarize a few highlights of some of the lessons learned that have heavily informed its evolution:

- Relentless Logging. Google’s BigQuery platform makes it possible to rapidly interrogate and summarize tens or even hundreds of billions of rows of unstructured data in near-realtime, making it an ideal platform for log analysis. Our global crawling fleet logs a regular heartbeat with a wealth of data about each crawler’s active running state, along with indicators of major state change, which helps us diagnose emerging trends, triage problems, canary new capabilities and optimize both performance and overall behaviors at both the local crawler level and the regional and global fleet level. We also log every single crawl failure allowing us to understand global fleet patterns and even route specific domains around a crawler that has launched on a bad IP address or launch a replacement. Such holistic and detailed logging was one of the recommendations of Kalev's 2012 IIPC Opening Keynote and despite considerable pushback from many corners of the web archiving community that such logging would generate impossibly large data files and would have minimal utility, GDELT 3's massive logging infrastructure has proven to be absolutely crucial to every aspect of its design, tuning and long-term self-healing and automated response capabilities while consuming minimal storage resources.

- Domain+IP Routing And Rate Limiting. Historically our URL routing and rate limiting infrastructure has been based on root domain names. Yet, as the media around the world have increasingly consolidated, more and more independent domain names bearing no resemblance to each other all resolve to the same IP address of their parent company. In some cases, a single media conglomerate may own hundreds of distinct small local outlets that are all hosted on the same set of IP addresses, resulting in 429 throttling. In other cases, large numbers of unrelated sites may use the same managed hosting platform that performs traffic monitoring globally across all hosted sites, meaning requests to ten different domain names are all treated as the same and count towards access throttling. Towards this end, GDELT 3 has switched to Domain+IP routing and rate limiting in which each URL queued for crawling is routed and scheduled based on both its root domain name and the first IP it resolves to. A few sites use hosting providers that filter at the data center or even global level, but continual changes in their IP ranges make block filtering difficult and we are working on alternative approaches to handle this relatively small number of edge cases. It is important to note that for those domain names that resolve to multiple IP addresses, an increasing number of sites perform traffic analysis across all of their IP addresses as a single block, meaning that sharding requests across the IP addresses will still result in 429's. Thus, URLs must be routed and rate limited by both the root domain name and the complete list of all IP addresses the domain resolves to at that time.

- DNS Resolvers. While most domains resolve fairly simply, either directly to an A record or as a CNAME to an A, we regularly come across more complex scenarios, such as a CNAME pointing to a CNAME pointing to a CNAME pointing to a CNAME pointing to an A record. These are often sites that have layered multiple external services together through DNS chaining. Not all high speed parallel DNS resolvers are tolerant of such deep chaining or other edge behaviors and can fail to resolve or result in strange behaviors. Thus, GDELT 3 uses two different DNS resolution subsystems. The first is a distributed high-speed resolver system that feeds our routing and rate limiting systems and may not properly handle every edge case. Given that routing can tolerate a few errors in conjunction with aggressive caching, the relatively small number of errors at this level are acceptable. The second system is a standard robust resolver that is used by the crawlers for actual request issuance and can handle all edge cases and guarantees resolution for extant domains.

- Blacklisted IPs. One of the most powerful aspects of the cloud is the ability to launch a new crawler instantly anywhere in the world. At the same time, as new crawlers launch, they are assigned an ephemeral IP address that someone else previously used and may have abused, causing it to be blacklisted by some sites and services. This means that one crawler may have no problems accessing a site, while another crawler launches and finds all connections to the site are rejected because of what someone else using that IP address did some time ago. Some sites will simply reject all connection attempts, others may return a 403 and still others may return 5XX errors that can complicate proper error handling and understanding of whether the result was a genuine server error or merely an ill-conceived IP blacklist response. We’ve been exploring a number of approaches to work around these situations, such as maintaining internal blacklist tables and routing domains around known bad IP addresses that are assigned to any of our crawlers or simply automatically exiting and relaunching the new crawler elsewhere. For those running in the public cloud, it is worth noting the impact of IP reuse on large crawling fleets and the unusual behaviors that can result as transient crawlers launch and are assigned previously used IP addresses from other projects. Our fleet’s central realtime logging allows us to observe these situations in realtime and eventually automatically mitigate them in realtime without human intervention.

- External Factors. In the past when a crawler received a timeout, a 500 response or other non-rate-limiting error, it would requeue the URL to try it again after a period of time, but if it failed a second time we simply treated the page as dead and moved on. As we’ve been exploring this issue in more depth, we’ve found a lot of fascinating and complex behavior relating to external factors affecting sites at the same time as we are accessing them. For example, sites may use naïve DDOS protection services that respond to elevated traffic levels from other sources by simply taking the entire site offline, responding to all traffic with a 404 or 500 or replacing all pages with a small block of JavaScript code that sets a temporary authentication cookie and reloads the page. We might be crawling the site at a global rate of one request per 5 minutes and find that for hours at a time the site will return blank JavaScript refresh pages or 404’s for all content, while other times working just fine. Over time we were able to confirm that these kinds of sites exhibit the same behavior even with no crawling traffic from GDELT and just being accessed manually via a standard web browser – the sites would be live for the majority of a day, but disappear for an hour or more at a time randomly throughout the day due to either legitimate DDOS attacks or merely ill-configured crawlers run by those without the necessary understanding or experience. These sites are typically found in specific countries or regions and typically use relatively primitive blunt global DDOS services. Transient network interruptions, severe weather that impacts power or data centers in an area and even the interference of state actors to throttle or disable internet access to specific sites or portions of a country (or even an entire country) during major events can also impact our fleet – though more and more local outlets in repressive countries are using centralized regional and international hosting providers that place their sites outside the reach of national authorities to throttle or block international access to them during times of crisis.

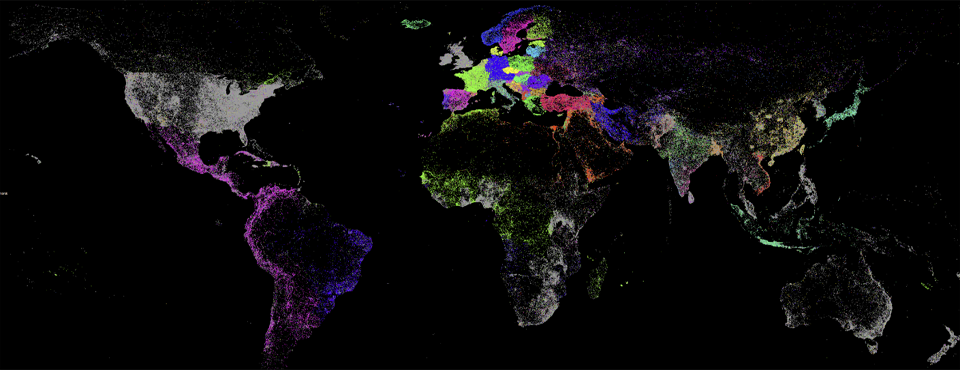

- Geographic Location. In Google Cloud Platform, regardless of which data center globally a crawler runs in, its ephemeral IP address will resolve in most IP geocoding databases to Google’s California headquarters. In theory, this means that the simplistic IP-based geotargeting and geofiltering used by some news outlets should be rendered moot, with all crawlers globally appearing to be from the US and seeing the same geotargeted content. In practice, however, we observe many unusual behaviors when testing differences between fetching the same page from different corners of the globe. For example, fetching the same URL in short succession from data centers in the US, Sao Paulo, Montreal, London, Belgium, Frankfurt, Finland, the Netherlands, Mumbai, Singapore, Taiwan, and Tokyo may all yield the exact same page, subtle differences in the returned page or dramatic differences in what each receives. Sometimes this is due to DNS round robining or caching behaviors of the remote site as requests are directed to different CDNs with different copies of the page, while other times we’ve seen isolated cases that are more difficult to explain except due to geographic distance and last mile network latency differences or manual IP range targeting by sites that uses manually defined blocks of IP addresses instead of IP geodatabases. Understanding repeatability of requests and how the content returned by news outlets differs per request and requester is an area we are actively exploring and especially its impact on understanding the evolution of the news landscape over time.

- The Power Of Cloud Networking. Google Cloud’s incredible global network infrastructure means that traffic between GDELT’s global crawling fleet and the external services they interact with, from GDELT’s global orchestration infrastructure to core Google services like GCS, Cloud Vision, Cloud Natural Language, behaves little differently regardless of where the components are located geographically. Beyond the short speed of light delay, a crawler running in Tokyo sees the world exactly the same as one in Ohio and can read and write the same GCS files, talk to the same orchestration services, write to the same centralized BigQuery logs, forward content to Cloud AI APIs and even coordinate amongst other crawlers scattered across the globe. The ability to launch crawlers and other components in any Google data center globally with no modification and have them see exactly the same environment no matter where they are and be able to talk to other modules anywhere in the world across Google’s infrastructure is truly incredible and stands testament to the power of GCP and how tailor made it is for the kind of massive data-centric global processing that is at the core of GDELT.

For this inaugural post we listed just a few highlights from the vast body of lessons learned that have driven the networking architecture of GDELT 3’s crawling fleet. As we get this series off the ground we’ll be posting a lot more with much more detail that we hope others will find of great use!