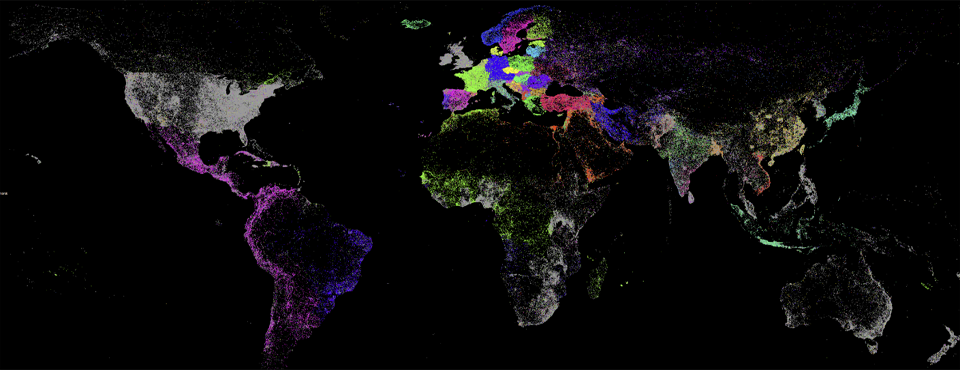

The GDELT Project encompasses an incredible array of datasets spanning the entire planet, reaching across 65 languages and making sense of modalities from text to images to video.

This enormous realtime open firehose of data cataloging planet earth is available as downloadable files in Google Cloud Storage, JSON APIs for powering web interfaces, and for those that need to really dive into the data at limitless scale, BigQuery tables that can bring the full power of BigQuery to brute force through trillions of datapoints and seamlessly merge GDELT data with other datasets like NOAA weather information. Indeed, you’ll notice that nearly every analysis we do with GDELT involves BigQuery somewhere in the analytic pipeline and most use BigQuery as the entire core pipeline!

In addition to the core GDELT datasets, we also regularly release countless specialty extracts and computed datasets through the GDELT Blog, from mapping media geography to estimating the geographic affinity of language to a 9.5 billion word Arabic ngram dataset to a 38-year conflict timeline computed in just 3 seconds!

A question we get a lot is “Just how big is GDELT?” The answer? Very big.

Read on to see just how large GDELT’s primary datasets are as of the end of July 2018.

EVENTS

GDELT’s original Events 1.0 dataset has cataloged more than half a billion worldwide events in 200 categories since 1979, totaling 30 billion datapoints. The Events 2.0 dataset which runs from 2015 to present has already cataloged more than 1.1 billion references to 360 million events, totaling 18 billion reference datapoints and 22 billion event datapoints.

In total, the GDELT 1.0 and 2.0 Events and EventMentions datasets contain 83 billion datapoints, making them the smallest in our collection.

FRONT PAGE GRAPH (GFG)

GDELT’s newest dataset, its Front Page Graph (GFG) has recorded more than 35 billion outlinks to more than 240 million unique URLs, making it larger after just five months than Facebook's entire internal research archive of every URL posted by at least 20 people and at least once publicly on its platform over more than a year and a half. If you thought social media datasets were large, its only because there's never been an attempt like GDELT's to catalog the world at these scales.

Despite the dataset’s vast scale, it takes BigQuery takes just 4 minutes to count the total number of unique URLs in those 35 billion rows of data.

For each of those 35 billion records, we record 6 different attributes, meaning the dataset encodes a total of 221 billion datapoints.

IMAGERY

Over two and a half years, GDELT’s Global Visual Knowledge Graph (GVKG) has processed nearly half a billion global news images totaling almost a quarter trillion pixels. From that immense global visual archive, Google Cloud Vision API computed more than 321 billion datapoints totaling 8.9TB.

As with the Front Page Graph, BigQuery makes working with all this data trivial. The complete 8.9TB JSON archive can be broken into its component 321 billion individual values in just 2.5 minutes!

GLOBAL KNOWLEDGE GRAPH (GKG)

GDELT’s primary Global Knowledge Graph (GKG) has processed just short of 800 million articles 2015-present in 65 languages, totaling 22 billion data fields. Of course, many of the GKG fields are actually delimited blobs containing large numbers of datapoints packed within a single field. For example, stored within those fields are 6.6 billion geographic references, each of which encodes 8 separate fields, for a total of 52.9 billion actual datapoints. There are 29 billion thematic references, encoding a total of 58 billion datapoints, 1.9 billion person and 2.3 billion organization mentions, encoding respectively 3.8 billion and 4.7 billion datapoints, together with a total of 8.1 billion capitalized phrase mentions, totaling 16.3 billion datapoints.

GCAM alone encodes 2.3 trillion emotional datapoints. Since only non-zero values are actually written to the GKG file to conserve space, the on-disk GCAM file encodes 717 billion non-zero emotional values spanning 6TB, with BigQuery able to parse the delimited format into all 717 billion individual records in just 2.5 minutes!

Of course, these numbers reflect only the primary news GKG, not our 21-billion word Academic Literature GKG, our half-century Human Rights GKG, our million-broadcast Television News GKG, or our 3.5 million 200-year Google Books and Internet Archive Books GKG special collections!

In total, the primary GKG dataset encodes 2.5 trillion datapoints, with 918 billion non-zero datapoints written to the files.

GRAND TOTAL

Putting these numbers together and looking just at the four primary datasets and ignoring our myriad other specialty datasets, including the GKG special collections, which expand these numbers even further, we have a combined dataset of more than 3.2 trillion datapoints covering the evolution of global human society over the past 200 years and all of it is available as completely open data.

That’s a lot of open data!