Over the past year we've explored multimodal image embedders through the eyes of both OpenAI's CLIP and GCP's Vertex AI Multimodal Embedding model. For those interested in comparing the two models in real-world use cases, we've created a simple Python notebook that you can use with Colab on your own small sample datasets.

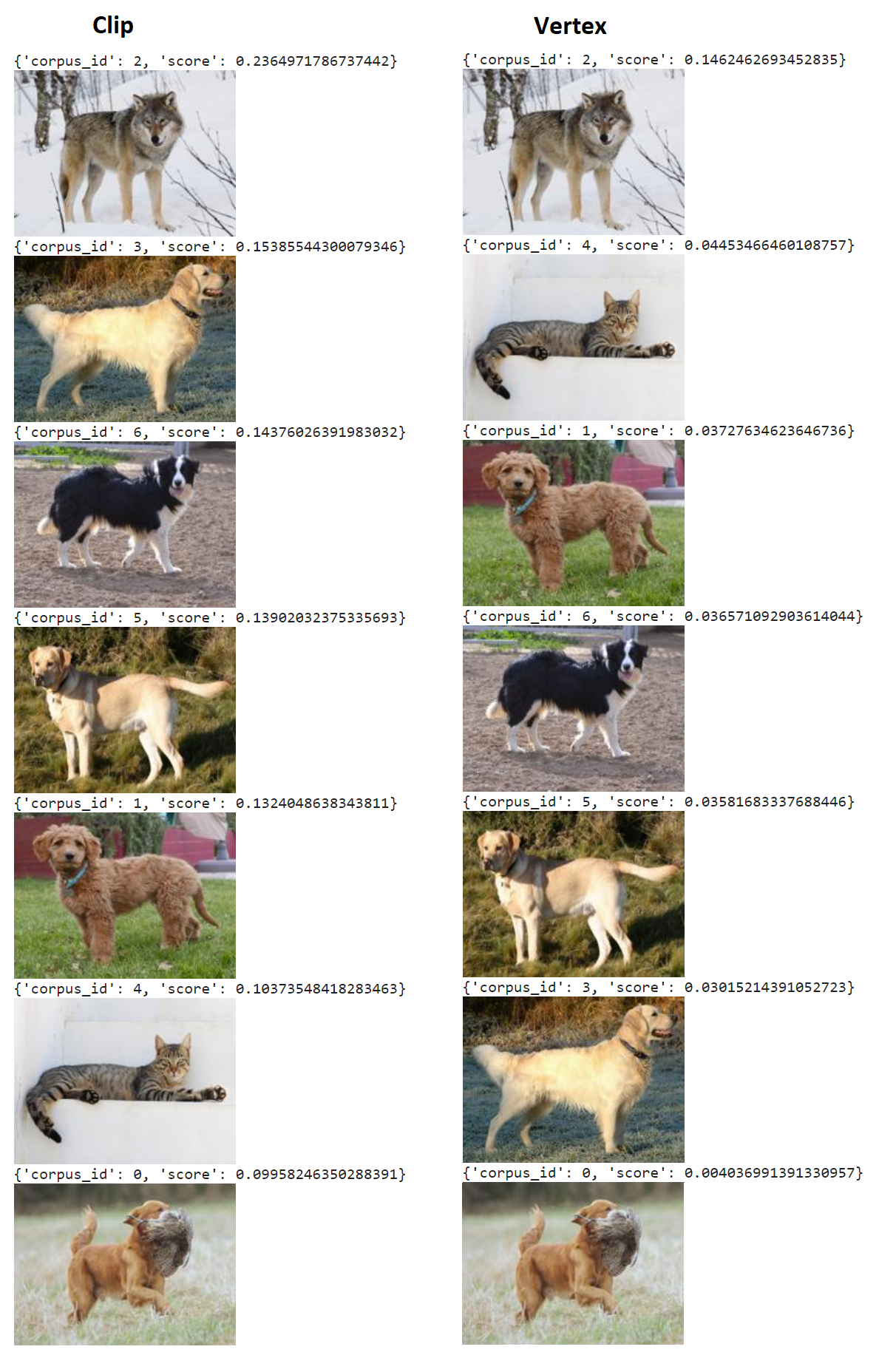

Comparing the two models on a collection of dog, wolf and cat images from Wikipedia, we find that both models yield the same top results for clearly-defined search terms and equally reasonable results for others. Overall, the two models are nearly indistinguishable on overall performance. The main difference is that Vertex tends to offer better score stratification, with strong discriminatory breakpoints between the relevant and irrelevant images, whereas Clip tends to exhibit much smaller divides that make it more difficult to determine the end of the relevant results. For human-assisted visual search, both models yield similarly useable results, while for automated filtering workflows, Vertex's stronger stratification would be beneficial for categorical thresholding.

First install the necessary libraries:

!pip install sentence-transformers

import sentence_transformers

from sentence_transformers import SentenceTransformer, util

from PIL import Image

import glob

import torch

import pickle

import zipfile

from IPython.display import display

from IPython.display import Image as IPImage

import os

from tqdm.autonotebook import tqdm

torch.set_num_threads(4)

import re

#First, we load the respective CLIP model

model = SentenceTransformer('clip-ViT-L-14')

Now download a set of sample images. Replace the code below with the list of images you wish to analyze. You can download up to a few hundred images depending on your Colab VM type, though a larger number of images can take a substantial amount of time. You will want to make sure that you are using a GPU type VM for your Colab notebook (change via the dropdown at top-right of the notebook) or using the "Change runtime type" option under the "Runtime" menu. You can even download a ZIP file and unpack to provide a larger number of images all at once:

!rm -rf images !mkdir images !wget -q "https://upload.wikimedia.org/wikipedia/commons/thumb/b/bd/Golden_Retriever_Dukedestiny01_drvd.jpg/640px-Golden_Retriever_Dukedestiny01_drvd.jpg" -O "images/1.jpg" !wget -q "https://upload.wikimedia.org/wikipedia/commons/thumb/3/34/Labrador_on_Quantock_%282175262184%29.jpg/1200px-Labrador_on_Quantock_%282175262184%29.jpg" -O "images/2.jpg" !wget -q "https://upload.wikimedia.org/wikipedia/commons/a/aa/FTCB181207.jpg" -O "images/3.jpg" !wget -q "https://upload.wikimedia.org/wikipedia/commons/f/f2/Golden_Doodle_Standing_%28HD%29.jpg" -O "images/4.jpg" !wget -q "https://upload.wikimedia.org/wikipedia/commons/5/5f/Border_Collie_Macho_Blanco_y_Negro_%28Batman%2C_los_Baganes_Border_Collie%29.jpg" -O "images/5.jpg" !wget -q "https://upload.wikimedia.org/wikipedia/commons/thumb/6/68/Eurasian_wolf_2.jpg/1200px-Eurasian_wolf_2.jpg" -O "images/6.jpg" !wget -q "https://upload.wikimedia.org/wikipedia/commons/1/15/Cat_August_2010-4.jpg" -O "images/7.jpg"

Now we'll compute the the CLIP embeddings for the images. This does not require any authentication and runs entirely locally within your notebook's VM:

img_names = list(glob.glob("./images" + '/*'))

print("Images:", len(img_names))

img_emb = model.encode([Image.open(filepath) for filepath in img_names], batch_size=128, convert_to_tensor=True, show_progress_bar=True)

Then we'll compute the GCP Vertex AI Multimodal Embedding embeddings. Note that this will require you to authenticate Colab to your Google account (you are only granting Google's Colab access to your account for the duration of the notebook's run). You'll also need to change the "[YOURPROJECTIDHERE]" in the URL. Note that since GCP enforces a maximum base64-encoded image filesize of 2MB we resize each image to 1000 x 1000 pixels:

from google.colab import auth

auth.authenticate_user()

import base64

import json

import numpy as np

import torch

import io

from PIL import Image

#compute the embeddings

img_emb_gcp = []

for i in range(len(img_names)):

!rm cmd.json

!rm results.json

print("Embedding: ", img_names[i])

#this works, but if image is larger than 2MB it fails, so let's resize...

#with open(img_names[i], "rb") as image_file:

#image_data = image_file.read()

with open(img_names[i], "rb") as image_file:

image = Image.open(image_file)

image = image.resize((1000, 1000)) # Resize the image to 1000x1000 pixels

with io.BytesIO() as buffer:

image.save(buffer, format="JPEG") # You can change the format if needed

image_data = buffer.getvalue()

base64_encoded = base64.b64encode(image_data).decode('utf-8')

data = {"instances": [ {"image": { "bytesBase64Encoded": base64_encoded} } ]}

with open("cmd.json", "w") as file: json.dump(data, file)

cmd = 'curl -s -X POST -H "Authorization: Bearer $(gcloud auth print-access-token)" -H "Content-Type: application/json; charset=utf-8" -d @cmd.json "https://us-central1-aiplatform.googleapis.com/v1/projects/[YOURPROJECTIDHERE]/locations/us-central1/publishers/google/models/multimodalembedding:predict" > results.json'

!{cmd}

with open('results.json', 'r') as f: data = json.load(f)

print(data)

img_emb_gcp.append(torch.tensor(data['predictions'][0]['imageEmbedding']))

Now we define our two search functions, one for CLIP and one for GCP:

def searchclip(query, k=20):

# First, we encode the query (which can either be an image or a text string)

query_emb = model.encode([query], convert_to_tensor=True, show_progress_bar=False)

# Then, we use the util.semantic_search function, which computes the cosine-similarity

# between the query embedding and all image embeddings.

# It then returns the top_k highest ranked images, which we output

hits = util.semantic_search(query_emb, img_emb, top_k=k)[0]

print("Query:")

display(query)

for hit in hits:

print(hit)

display(IPImage(os.path.join(img_names[hit['corpus_id']]), width=200))

from IPython.display import Javascript

display(Javascript('''google.colab.output.setIframeHeight(0, true, {maxHeight: 3000})'''))

import torch

def searchgcp(query, k=20):

#get the embedding for the search phrase...

!rm cmd.json

!rm results.json

data = {"instances": [ {"text": query} ]}

with open("cmd.json", "w") as file: json.dump(data, file)

cmd = 'curl -s -X POST -H "Authorization: Bearer $(gcloud auth print-access-token)" -H "Content-Type: application/json; charset=utf-8" -d @cmd.json "https://us-central1-aiplatform.googleapis.com/v1/projects/[YOURPROJECTID]/locations/us-central1/publishers/google/models/multimodalembedding:predict" > results.json'

!{cmd}

with open('results.json', 'r') as f: data = json.load(f)

print(data)

query_emb = torch.tensor(data['predictions'][0]['textEmbedding'])

# Then, we use the util.semantic_search function, which computes the cosine-similarity

# between the query embedding and all image embeddings.

# It then returns the top_k highest ranked images, which we output

hits = util.semantic_search(query_emb, img_emb_gcp, top_k=k)[0]

print("Query:")

display(query)

for hit in hits:

print(hit)

display(IPImage(os.path.join(img_names[hit['corpus_id']]), width=200))

from IPython.display import Javascript

display(Javascript('''google.colab.output.setIframeHeight(0, true, {maxHeight: 3000})'''))

And make resized versions of the images for rapid display in our search results:

#make resized versions of all of the images to speed display below...

img_names = list(glob.glob("./images" + '/*'))

print("Images:", len(img_names))

for img_name in img_names:

img = Image.open(img_name)

img = img.resize((200,200))

output_path = img_name + ".resized.jpg"

img.save(output_path, "JPEG")

Now you can search using:

search = 'dog with bird in its mouth'

print("CLIP RESULTS")

searchclip(search)

print("GCP RESULTS")

searchgcp(search)

You can compare some of the results below:

For "dog with bird in its mouth" the ordering of the results is identical, but Vertex shows much greater score discrimination, with an existential drop between the single relevant image and the following image, while Clip exhibits a much more gradual decrease over the top few images, making it more difficult to distinguish the breakpoint.

For "golden retriever" the top result is the same, but Clip incorrectly ranks the lab second whereas Vertex correctly orders them, though both rank the lab above the golden doodle. Interestingly, Vertex ranks the grey wolf as slightly more "golden retrieverish" than the border collie. Once again, Vertex offers greater score discrimination, with the similarity score dropping sharply before the lab, while Clip maintains a more gradual score decrease and lumps in the golden doodle before dropping sharply before the border collie.

A search for "wolf" yields the same top result, while Clip ranks the cat towards the bottom and Vertex ranks it second-highest, showing its fixation on the coat color and ear structure versus Clip's emphasis on facial and overall body structure. Both Clip and Vertex show a strong drop of roughly the same amount between the top and second-highest results.

Finally, what about just "dog"? Clip and Vertex rank the dogs in different orders, but cluster them with fairly similar scores until their respective breakpoints. Clip drops sharply with the dog carrying the bird, which is ranked roughly equally similar to the cat (with the wolf being grouped with the dogs), while Vertex experiences a drop between the dogs and wolf, but a less pronounced one, and drops, though not existentially, when it reaches the cat.