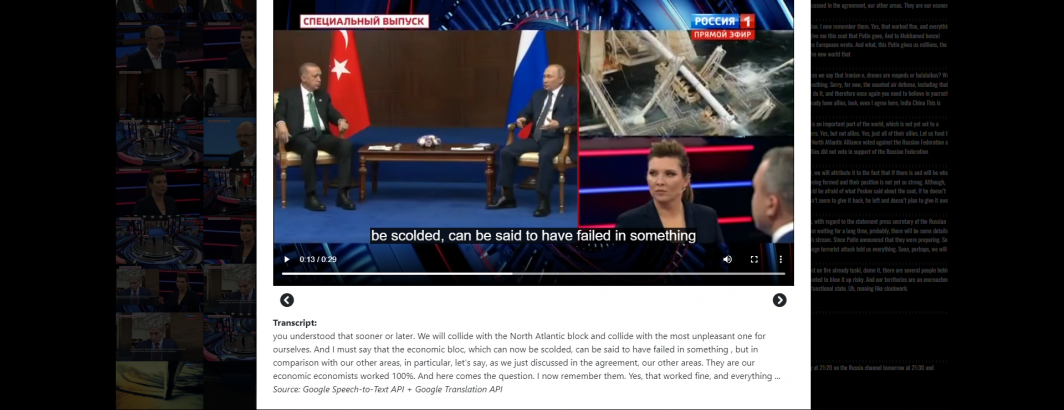

In November we announced the availability of 1.4 million minutes of machine transcribed Belarusian, Russian & Ukrainian television news broadcasts in collaboration with the Internet Archive's Television News Archive. Earlier today we unveiled a complete archive of all nine months of those broadcasts machine translated into English for Ukrainian channel Espreso and Russian channels Russia 1 and Russia 24, with all six channels available for November and December 2022.

For those interested in applying various kinds of content analysis tools to this archive, from entity extraction and sentiment analysis to more sophisticated narrative analysis like topical clustering and embedding, we've created a simple workflow that makes it easy to bulk download the machine transcripts and their English machine translations.

First, compile the list of inventory files for the dates and channels of interest. Change the start and end dates in the first line to set the specific date range of interest and delete the lines for the channels that are not of interest:

start=20220325; end=20221231; while [[ ! $start > $end ]]; do echo $start; start=$(date -d "$start + 1 day" "+%Y%m%d"); done > DATES

rm -rf INVENTORIES

mkdir INVENTORIES

time cat DATES | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/ESPRESO.{}.inventory.json -P ./INVENTORIES/'

time cat DATES | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/RUSSIA1.{}.inventory.json -P ./INVENTORIES/'

time cat DATES | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/RUSSIA24.{}.inventory.json -P ./INVENTORIES/'

time cat DATES | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/1TV.{}.inventory.json -P ./INVENTORIES/'

time cat DATES | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/NTV.{}.inventory.json -P ./INVENTORIES/'

time cat DATES | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/BELARUSTV.{}.inventory.json -P ./INVENTORIES/'

rm IDS; find ./INVENTORIES/ -depth -name '*.json' | parallel --eta 'cat {} | jq -r .shows[].id >> IDS'

wc -l IDS

rm -rf ./INVENTORIES/

This will compile a list of all IDs of transcribed broadcasts from those channels for those dates.

To download their English machine translations (NOTE – this will download 1GB of text if you included the full set of dates and channels above):

mkdir TRANSLATEDTRANSCRIPTS

time cat IDS | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/{}.transcript.en.txt -P ./TRANSLATEDTRANSCRIPTS/'

If you want to analyze the original native Russian and Ukrainian language transcripts, use (NOTE – this will download 2.3GB of text):

mkdir TRANSCRIPTS

time cat IDS | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/{}.transcript.txt -P ./TRANSCRIPTS/'

Finally, for those interested in performing advanced content analysis that requires the precise subsecond timecode information for each word and potential alternative transcriptions, you can also download the complete raw output of the Speech-to-Text API JSON for each broadcast (NOTE – this will download 37GB of JSON):

mkdir STTJSON

time cat IDS | parallel --eta 'wget -q https://storage.googleapis.com/data.gdeltproject.org/gdeltv3/iatv/visualexplorer/{}.stt.latest_long.json -P ./STTJSON/'

We hope this seeds new ideas for at-scale computational content analysis of this collection and stay tuned for a series of "getting started" examples and tutorials!