Last month Google's Laurent Picard published this fascinating demo using Google's Video AI API to detect all of the camera changes ("shots" in the API's parlance) in a video and construct a brief video summary of the video showing a representative frame of each shot. What might it look like to apply this to an evening television news broadcast?

In other words, what would it look like to go through an evening news broadcast and identify each time the visual framing substantially changes and construct a summary clip of the first frame of each of these major changes? Thus, if there are 10 seconds of airtime featuring Lester Holt speaking to the camera, followed by 20 seconds of a fixed camera on an on-location reporter, followed by 5 seconds of another angle, followed by another 10 seconds back in the studio, the end result would be a clip featuring four frames, one each for each of these four shots. Thus, 10 seconds of Lester Holt becomes a single frame indicating that the imagery did not change during those 10 seconds. This ability to collapse long spans of visual narratives into a single frame per shot makes it possible to quickly and rapidly summarize the core visual clips that make up a television news broadcast.

The Visual Global Entity Graph 2.0 securely and non-consumptively analyzes each television news broadcast through the Video AI API, generating a count of how many distinct "shots" there are in each second of airtime. A value of 1 means the camera remains unchanged from the previous second of airtime, while a value greater than 1 indicates a camera change.

Thus, compiling a list of all of the seconds in a given news broadcast where there is a shot change is as simple as:

SELECT iaThumbnailUrl FROM `gdelt-bq.gdeltv2.vgegv2_iatv` WHERE DATE(date) = "2020-06-18" and iaShowId='KNTV_20200618_003000_NBC_Nightly_News_With_Lester_Holt' and numShotChanges>1 order by date asc

This outputs a CSV file with one thumbnail image per row for each of the airtime seconds when there was a shot change.

Save this into a file called THUMBS.TXT.

Then use the following GNU parallel command to download those frames to local disk. Note that since we need to hand these to ffmpeg in the next step, we need to rename them as sequential images. Note that we use the advanced "–rpl" option of parallel to zero-pad the files to preserve correct ordering and ensure they match the format needed by ffmpeg in a moment. (Note that there is an error in parallel's documentation in that "seq()" actually needs to be "$job->seq()").

time cat THUMBS.TXT | parallel --eta --rpl '{0#} $f=1+int("".(log(total_jobs())/log(10))); $_=sprintf("%0${f}d",$job->seq())' 'curl -s {} -o ./CACHE/{0#}.jpg'

We can then convert this into the final preview moving using ffmpeg:

time ffmpeg -r 4 -i ./CACHE/%03d.jpg -vcodec libx264 -y -vf "pad=ceil(iw/2)*2:ceil(ih/2)*2,scale=iw*2:ih*2" -an video.mp4

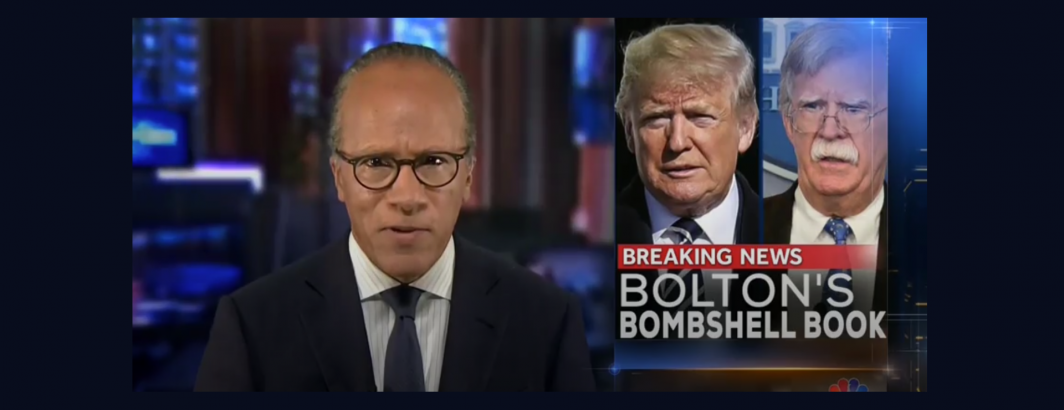

The final summary clip (compare with the actual news broadcast to see how it correctly identifies each of the visual shot changes):