Using our new embedding visualization template, let's revisit our multilingual embedding experiment and visualize how each embedding model clusters our sample sentences using the same set of models: the English-only USEv4, the larger English-only USEv5-Large, the 16-language USEv3-Multilingual and the larger 16-language USEv3-Multilingual-Large models (supporting 16 languages: Arabic, Chinese-simplified, Chinese-traditional, English, French, German, Italian, Japanese, Korean, Dutch, Polish, Portuguese, Spanish, Thai, Turkish, Russian), the 100-language LaBSEv2 model optimized for translation-pair scoring and the Vertex AI Embeddings for Text API.

sentences = [

"A major Texas hospital system has reported its first case of the lambda COVID-19 variant, as the state reels from the rampant delta variant Houston Methodist Hospital, which operates eight hospitals in its network, said the first lambda case was confirmed Monday Houston Methodist had a little over 100 COVID-19 patients across the hospital system last week. That number rose to 185 Monday, with a majority of those infected being unvaccinated, according to a statement released by the hospital Monday",

"得克萨斯州一家主要医院系统报告了第一例 lambda COVID-19 变种病例,因为该州从猖獗的三角洲变种中解脱出来 休斯顿卫理公会医院在其网络中运营着八家医院,并表示周一确认了第一例 lambda 病例 休斯顿卫理公会 上周整个医院系统有 100 多名 COVID-19 患者。 根据医院周一发布的一份声明,该数字周一上升至 185 人,其中大多数感染者未接种疫苗",

"أبلغ أحد أنظمة المستشفيات الكبرى في تكساس عن أول حالة إصابة بفيروس لامدا COVID-19 ، حيث قالت الولاية من مستشفى هيوستن ميثوديست المتغير المنتشر في دلتا ، والتي تشغل ثمانية مستشفيات في شبكتها ، إن أول حالة لامدا تأكدت يوم الاثنين من هيوستن ميثوديست. ما يزيد قليلاً عن 100 مريض COVID-19 عبر نظام المستشفى الأسبوع الماضي. وارتفع هذا العدد إلى 185 يوم الاثنين ، مع عدم تلقيح غالبية المصابين ، بحسب بيان صادر عن المستشفى يوم الاثنين.",

"ကြီးမားသောတက္ကဆက်ဆေးရုံစနစ်တစ်ခုသည် ၎င်း၏ကွန်ရက်ရှိဆေးရုံရှစ်ခုကိုလည်ပတ်နေသည့် lambda ရောဂါကို တနင်္လာနေ့တွင် Houston Methodist ဆေးရုံမှပြန်လည်စုစည်းထားသောကြောင့် ပြည်နယ်သည် lambda COVID-19 မျိုးကွဲ၏ပထမဆုံးဖြစ်ရပ်ကို အစီရင်ခံတင်ပြလိုက်ပါသည်။ ပြီးခဲ့သည့်အပတ်က ဆေးရုံစနစ်တွင် COVID-19 လူနာ ၁၀၀ ကျော် အနည်းငယ်ရှိသည်။ အဆိုပါ အရေအတွက်သည် တနင်္လာနေ့တွင် ၁၈၅ ဦးအထိ မြင့်တက်လာခဲ့ပြီး ရောဂါပိုးကူးစက်ခံရသူ အများစုမှာ ကာကွယ်ဆေးမထိုးရသေးကြောင်း တနင်္လာနေ့က ဆေးရုံမှ ထုတ်ပြန်သော ထုတ်ပြန်ချက်တစ်ခုအရ သိရသည်။",

"【#他撑伞蹲了很久让女孩知道世界很美好#】近日,湖南长沙,一女子因感情问题一时想不开,独自坐在楼顶哭泣。消防员赶赴,温柔蹲在女孩身旁安抚情绪,夜色沉沉,天空下起小雨,救援人员为其撑伞暖心守护,经过近一小时的劝导,将女孩平安带下15楼天台。ps:答应我们,好好爱自己,世间还有很多美好~#女孩感情受挫消防员撑伞守护# 中国消防的微博视频",

"【#He Held an Umbrella and Squatted for a Long Time to Let the Girl Know that the World is Beautiful#】Recently, in Changsha, Hunan province, a woman, overwhelmed by relationship problems, sat alone on the rooftop crying. Firefighters rushed to the scene, gently squatting beside the girl to comfort her. The night was dark, and drizzling rain started to fall from the sky. The rescue team held an umbrella to protect and warm her heart. After nearly an hour of persuasion, they safely brought the girl down from the 15th-floor rooftop. PS: Promise us, love yourself well, there is still much beauty in the world ~ #Girl's Emotions Distressed, Firefighters Holding an Umbrella and Guarding# Video footage from the Chinese Fire Brigade's Weibo account.",

"一堂童画课,用色彩传递温暖!送困境儿童 美育盒子 ,送自闭症儿童艺术疗愈课程。让我们与@延参法师 一堂童画课公益发起人,在这个充满爱与温暖的日子里,一起感受艺术的魅力,用童心绘制美好,为公益事业贡献一份力量!@一堂童画课 北京市行远公益基金会的微博视频",

"A children's painting class, conveying warmth through colors! Sending Art Education Boxes to children in difficult situations, and providing art therapy courses for children with autism. Let us, together with Master Yan Can, the initiator of the public welfare project A Children's Painting Class, experience the charm of art on this loving and warm day. Let's use childlike hearts to create beauty and contribute to the cause of public welfare! @A Children's Painting Class, a video on the official Weibo account of Beijing Xingyuan Public Welfare Foundation."

"Art can be a very powerful tool for children's therapy.",

"艺术可以成为儿童治疗的一个非常强大的工具。"

]

Vertex AI

Despite supporting only English at this time, Vertex did generally position the Covid-related articles away from the others, though still highly stratified them. The most likely reason for this is that each of those machine translations included English words that overlapped with the English translation. For the other English and Chinese articles, Vertex improperly clustered them by language, lending credence to the Covid clustering being merely an artifact of the surviving English words:

[[0.99999639 0.75403089 0.70781558 0.84557946 0.56751408 0.5815553 0.51876257 0.56680003 0.5870215 ] [0.75403089 0.99999719 0.88302315 0.80451682 0.66224435 0.57870722 0.61908054 0.58978685 0.62672988] [0.70781558 0.88302315 0.99998457 0.72829008 0.68110709 0.58630535 0.63151544 0.58216433 0.62582492] [0.84557946 0.80451682 0.72829008 0.99999463 0.62038087 0.57855157 0.64729217 0.58224791 0.63639022] [0.56751408 0.66224435 0.68110709 0.62038087 0.99999595 0.60274483 0.76304865 0.59012055 0.72215898] [0.5815553 0.57870722 0.58630535 0.57855157 0.60274483 0.99999624 0.56453708 0.66506404 0.62457632] [0.51876257 0.61908054 0.63151544 0.64729217 0.76304865 0.56453708 0.99999786 0.57872791 0.75460535] [0.56680003 0.58978685 0.58216433 0.58224791 0.59012055 0.66506404 0.57872791 0.9999968 0.57894322] [0.5870215 0.62672988 0.62582492 0.63639022 0.72215898 0.62457632 0.75460535 0.57894322 0.99999986]]

Universal Sentence Encoder

USE's English-only embedding largely scatters the sentences in a meaningless jumble:

[[ 9.99999642e-01 1.35287493e-01 6.16897345e-02 2.78530627e-01 1.16169766e-01 1.64749399e-01 -2.30342187e-02 6.09423071e-02 1.92657392e-02] [ 1.35287493e-01 9.99999821e-01 1.50608957e-01 6.06229663e-01 4.02009338e-02 8.14152062e-02 9.18879360e-02 -4.55342233e-04 9.51746702e-02] [ 6.16897345e-02 1.50608957e-01 1.00000000e+00 3.82079959e-01 -2.54516676e-02 2.38140687e-01 1.20395131e-01 9.63330045e-02 -6.63711037e-03] [ 2.78530627e-01 6.06229663e-01 3.82079959e-01 9.99999881e-01 7.70147517e-02 1.18573606e-01 1.17261171e-01 4.14442755e-02 7.67353028e-02] [ 1.16169766e-01 4.02009338e-02 -2.54516676e-02 7.70147517e-02 9.99999881e-01 9.50272754e-03 1.21947825e-01 -3.84482443e-02 3.55258048e-01] [ 1.64749399e-01 8.14152062e-02 2.38140687e-01 1.18573606e-01 9.50272754e-03 9.99999762e-01 -5.42870834e-02 3.02406967e-01 6.13755360e-02] [-2.30342187e-02 9.18879360e-02 1.20395131e-01 1.17261171e-01 1.21947825e-01 -5.42870834e-02 9.99999881e-01 7.06044361e-02 -5.75780310e-03] [ 6.09423071e-02 -4.55342233e-04 9.63330045e-02 4.14442755e-02 -3.84482443e-02 3.02406967e-01 7.06044361e-02 9.99999881e-01 3.12432982e-02] [ 1.92657392e-02 9.51746702e-02 -6.63711037e-03 7.67353028e-02 3.55258048e-01 6.13755360e-02 -5.75780310e-03 3.12432982e-02 1.00000024e+00]]

Universal Sentence Encoder Large

USE Large yields similar results:

[[ 0.9999998 0.28019005 0.02825021 0.48163718 -0.01194308 0.0520324 -0.02493934 0.12363835 -0.08127209] [ 0.28019005 0.9999999 0.26236042 0.4949661 0.22146006 0.02746031 0.33639836 -0.03582553 0.05889804] [ 0.02825021 0.26236042 0.9999996 0.2676396 0.07686249 0.12225236 0.23801571 0.01799372 0.07812245] [ 0.48163718 0.4949661 0.2676396 0.9999996 0.09714167 0.13955805 0.3252697 0.09954128 0.06763986] [-0.01194308 0.22146006 0.07686249 0.09714167 0.9999999 0.05129759 0.3400574 -0.06489341 0.27619457] [ 0.0520324 0.02746031 0.12225236 0.13955805 0.05129759 0.9999998 0.13055068 0.36411536 -0.04815776] [-0.02493934 0.33639836 0.23801571 0.3252697 0.3400574 0.13055068 0.99999964 0.06037875 0.16036873] [ 0.12363835 -0.03582553 0.01799372 0.09954128 -0.06489341 0.36411536 0.06037875 1.0000002 -0.0660987 ] [-0.08127209 0.05889804 0.07812245 0.06763986 0.27619457 -0.04815776 0.16036873 -0.0660987 1.0000002 ]]

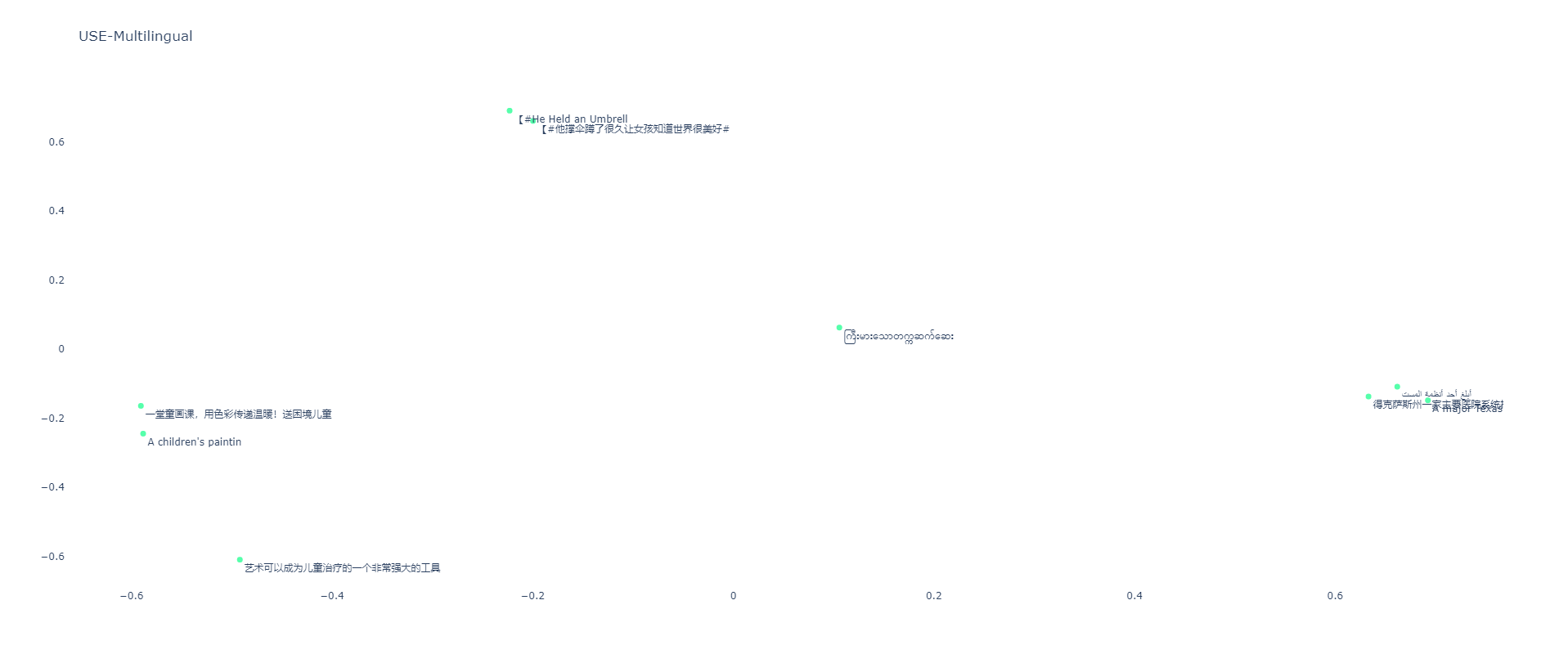

Universal Sentence Encoder Multilingual

In contrast, USE Multilingual correctly clusters the Covid-related articles other than for Burmese, which it does not support, and does a reasonable job of clustering across English and Chinese for the translated phrases:

[[ 1. 0.7992381 0.85700786 0.22502097 0.14996758 0.11595267 -0.01415733 0.04852479 -0.02586996] [ 0.7992381 1. 0.70707387 0.25284973 0.16496974 0.09333448 0.05413792 0.05633178 -0.04936308] [ 0.85700786 0.70707387 0.9999996 0.19829042 0.1672956 0.14345802 -0.00956287 0.05424859 -0.04476817] [ 0.22502097 0.25284973 0.19829042 1. 0.20754763 0.12753576 0.13643257 0.09576015 0.00449403] [ 0.14996758 0.16496974 0.1672956 0.20754763 0.99999976 0.7369143 0.31683004 0.29483852 -0.01595309] [ 0.11595267 0.09333448 0.14345802 0.12753576 0.7369143 0.9999999 0.2562063 0.29808474 -0.03728893] [-0.01415733 0.05413792 -0.00956287 0.13643257 0.31683004 0.2562063 1. 0.754457 0.35469553] [ 0.04852479 0.05633178 0.05424859 0.09576015 0.29483852 0.29808474 0.754457 0.9999999 0.5032175 ] [-0.02586996 -0.04936308 -0.04476817 0.00449403 -0.01595309 -0.03728893 0.35469553 0.5032175 1.0000002 ]]

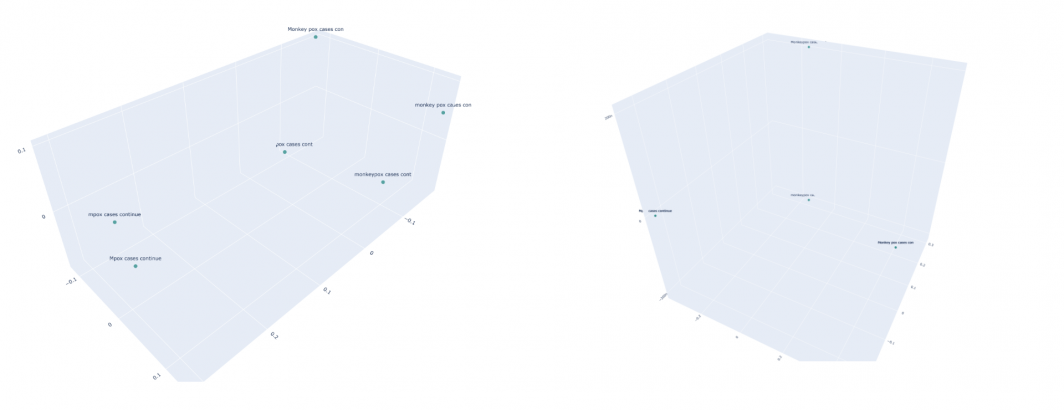

Universal Sentence Encoder Multilingual Large

The Large edition actually performs worse than its smaller counterpart:

[[1. 0.81812036 0.7089486 0.45337695 0.1748184 0.08517185 0.04017882 0.06708082 0.02093087] [0.81812036 0.99999994 0.71610725 0.42675513 0.15524334 0.07584821 0.05492434 0.04840575 0.04943867] [0.7089486 0.71610725 0.99999994 0.33209145 0.1479119 0.07657017 0.06227684 0.05057132 0.01748172] [0.45337695 0.42675513 0.33209145 0.9999995 0.07582554 0.0379662 0.06045617 0.04798054 0.04103216] [0.1748184 0.15524334 0.1479119 0.07582554 1. 0.7732414 0.25287962 0.3157473 0.07165246] [0.08517185 0.07584821 0.07657017 0.0379662 0.7732414 0.99999976 0.19092305 0.36365843 0.05748819] [0.04017882 0.05492434 0.06227684 0.06045617 0.25287962 0.19092305 0.99999976 0.72703046 0.3920198 ] [0.06708082 0.04840575 0.05057132 0.04798054 0.3157473 0.36365843 0.72703046 0.99999976 0.40681928] [0.02093087 0.04943867 0.01748172 0.04103216 0.07165246 0.05748819 0.3920198 0.40681928 0.9999999 ]]

LaBSE

Given that LaBSE is optimized for translation pairs and supports Burmese, it does the best at grouping bitexts, but notably scores the topically-similar (but not exact translation) "艺术可以成为儿童治疗的一个非常强大的工具。" as unrelated to the rest of the art-related cluster, showing the influence of task optimization (a bitext-optimized model scores translation pairs as strongly similar, but scores texts that topically overlap but aren't translations of each other as far less similar, in accordance with its design):

[[1. 0.9405081 0.9275924 0.8841646 0.246873 0.2763008 0.17410922 0.2444013 0.12730592] [0.9405081 1.0000001 0.9076841 0.85752714 0.26318815 0.29913837 0.20596941 0.27494198 0.11301407] [0.9275924 0.9076841 0.9999999 0.9011461 0.23458755 0.27642542 0.17776132 0.26297644 0.19807708] [0.8841646 0.85752714 0.9011461 0.9999999 0.2509857 0.29914016 0.1656245 0.28355375 0.26415676] [0.246873 0.26318815 0.23458755 0.2509857 1. 0.9058601 0.4357208 0.48990172 0.14693527] [0.2763008 0.29913837 0.27642542 0.29914016 0.9058601 1.0000001 0.41394848 0.5543987 0.18645877] [0.17410922 0.20596941 0.17776132 0.1656245 0.4357208 0.41394848 1.0000004 0.8389604 0.30014998] [0.2444013 0.27494198 0.26297644 0.28355375 0.48990172 0.5543987 0.8389604 1.0000001 0.437561 ] [0.12730592 0.11301407 0.19807708 0.26415676 0.14693527 0.18645877 0.30014998 0.437561 1. ]]