At GDELT's scale, even the smallest processing pipelines can span the globe. One challenge with geographically-distributed workflows across large numbers of VMs across large numbers of physical machines is that while the underlying VM clocks are synchronized globally at the platform level within the precision bounds of these workflows, data-parallel processes are by their nature highly independent in their execution schedules. While loosely scheduled, single-node or regional cluster overpressure, variable latency, multi-node loss, spawning latency and other error factors can cause processes to run slightly out of sync during periods of high stress. Execution queues transparently mediate the workflow aspects of these issues, but one area that doesn't always receive sufficient attention is the role that cache mismatches can play in creating challenges during such times.

For example, imagine a distributed workflow in which data is streamed into GCS via a set of ingest nodes and then distributed out globally for processing. Each processing node maintains a variety of caches to reduce computation and network requirements. These caches are independent, but some require synchronization due to downstream dependencies in serialized pipelines. During normal operation this isn't an issue, but during periods of extreme load as fleet management systems cope with unexpected massive sudden-onset surges, the synchronization of these caches can falter, leading to nodes performing redundant work that in turn slows the system further.

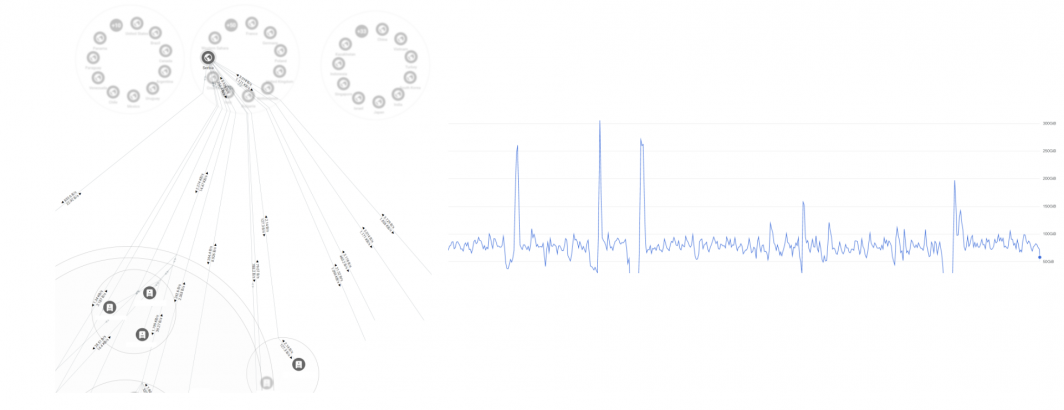

To address these challenges, we are upgrading the first wave of our GEN5 video processing infrastructure to a new distributed cache architecture that uses cascading OOB metadata attached to each cache that flows from the ingest nodes downstream through the entire processing pipeline globally. The ingest nodes, as the ultimate arbitrators of what is available to process, attach their cache status information to the initial queuing stream. This is then passed transparently downstream, with each successive stage using this central "cache clock" to manage their local caches.

This new architecture has already completely eliminated our synchronization issues, even under extreme load, reflecting the outsized impact even small changes can have in massively parallel distributed architectures.