Last week we showed how Laurent Picard's visual shot summary demo could be used to take the shot change catalog from an evening news broadcast and generate a brief visual summary video of it. To recap, it uses the camera change detection of Google's Video AI API to flag each time the visual "shot" changes and selects the first frame of each shot, stringing them together into a summary movie of the "scenes" that make up a given broadcast. This can be used as a rapid triage of the core stories of a broadcast.

Repeating the exact same code as before, we selected ABC World News Tonight With David Muir from February 1, 2020:

SELECT iaThumbnailUrl FROM `gdelt-bq.gdeltv2.vgegv2_iatv` WHERE DATE(date) = "2020-02-02" and iaShowId='KGO_20200202_000000_ABC_World_News_Tonight_With_David_Muir' and numShotChanges>1 order by date asc

We saved the thumbnail list to a file called THUMBS.TXT and created a subdirectory called "CACHE". We then downloaded all of the frames:

time cat THUMBS.TXT | parallel --eta --rpl '{0#} $f=1+int("".(log(total_jobs())/log(10))); $_=sprintf("%0${f}d",$job->seq())' 'curl -s {} -o ./CACHE/{0#}.jpg'

And strung them together into the final summary video:

time ffmpeg -r 4 -i ./CACHE/%03d.jpg -vcodec libx264 -y -vf "pad=ceil(iw/2)*2:ceil(ih/2)*2,scale=iw*2:ih*2" -an video.mp4

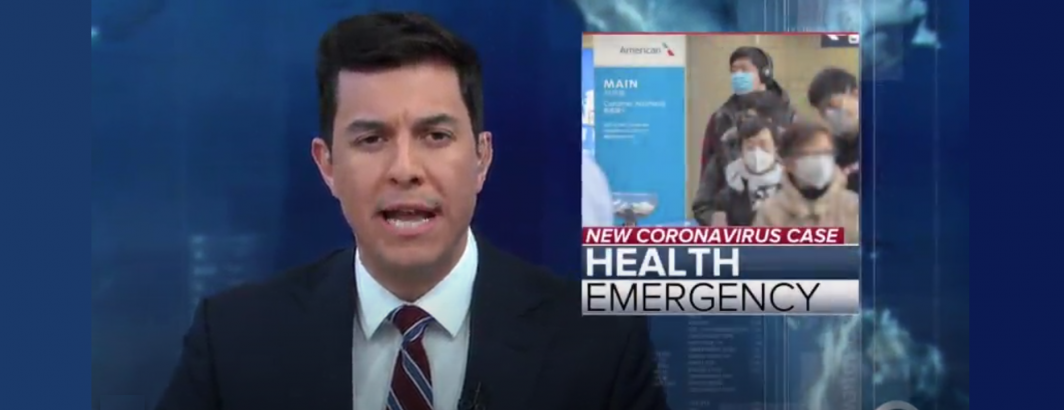

The final summary clip (compare with the actual news broadcast to see how it correctly identifies each of the visual shot changes):