Just over six and a half years ago, in collaboration with the Internet Archive's TV News Archive, we launched the Television Explorer 2.0, which transformed how journalists and scholars are able to use the Archive to understand how television news channels are covering the key issues of the moment. The original 2018 Explorer focused on keyword searching of closed captioning, making it possible for researchers to trace how specific words and topics were being focused on across channels and their contexts. This was followed in 2020 by the launch of the TV AI Explorer, which for the first time made it possible to visually search a subset of the Archive's channels. Almost as an afterthought we also added keyword searching of the onscreen text using OCR. We originally thought the visual search features of the TV AI Explorer would prove to be its most popular, making it possible to search for specific objects and activities across time, such as the density of military or police imagery, masks, natural disasters and the like.

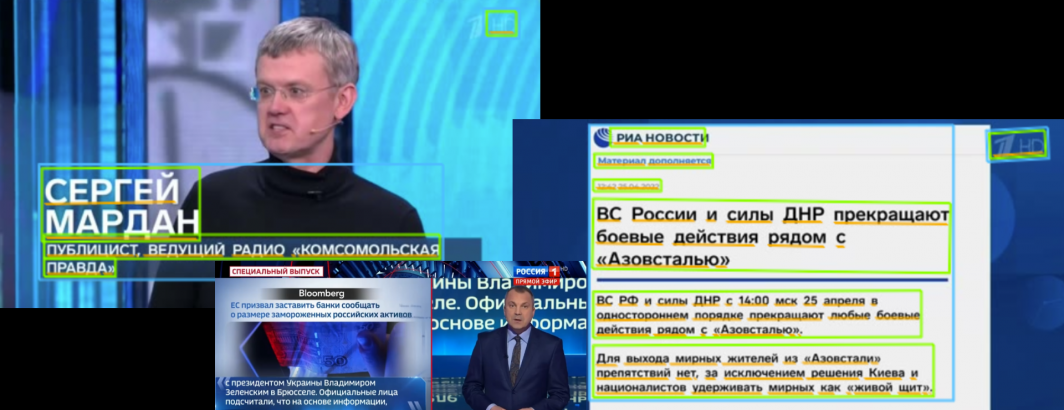

In reality, it turns out that journalists and scholars were far more interested in the onscreen text search capabilities as a way of searching the editorialized summaries that form the "lower thirds" chyrons that have become a fixture of television news across many countries. As but one example of the importance of this information, a live presidential speech from the White House might only mention the president's name and the White House at the beginning of the broadcast, while the chyron text will likely clarify through the length of the entire broadcast these key details.

In contrast, the predefined object and activity dictionary-based visual searching of the pre-multimodal embedding and LMM era meant that it was often difficult to shoehorn a given search into the limited list of around 30,000 objects and activities computer vision models typically recognized. Researchers also struggled to think in terms of visual search when so much of the heritage of their disciplines often focused on computerized textual analysis.

At the same time, OCRing the complete 3-billion-frame Visual Explorer 1/4fps archive would cost more than $4.5M, rising to more than $18M to OCR at the current TV AI Explorer's 1fps temporal resolution, making OCR far more expensive than the automated speech transcription we applied to the full 2.5-million-hour uncaptioned portion of the Archive spanning 150 languages. As we continue our work on image montages and algorithmic assisted visual summarization, one driving force is new approaches to optimizing our use of advanced SOTA OCR systems to finally make it tractable to OCR the complete TV News Archive. Stay tuned for a series of experiments on this front!