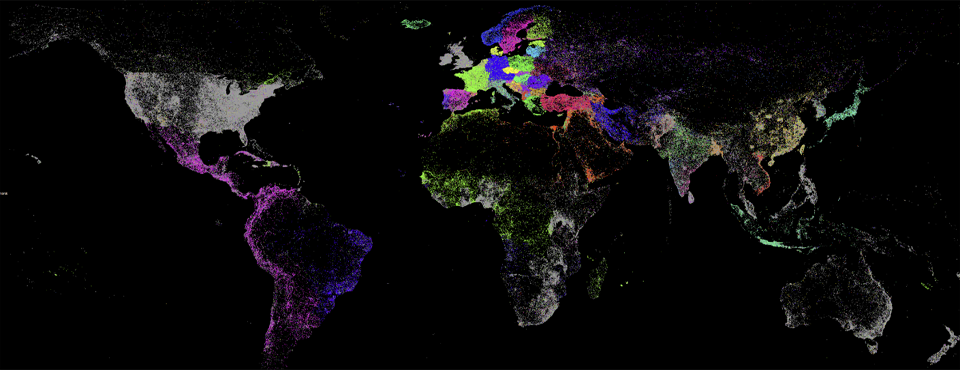

What would it look like to use massive computing power to see the world through others’ eyes, to break down language and access barriers, facilitate conversation between societies, and empower local populations with the information and insights they need to live safe and productive lives? By quantitatively codifying human society’s events, dreams and fears, can we map happiness and conflict, provide insight to vulnerable populations, and even potentially forecast global conflict in ways that allow us as a society to come together to deescalate tensions, counter extremism, and break down cultural barriers? That’s the vision of the GDELT Project.

Creating this vision starts with data. At the most fundamental level, GDELT is a realtime open data index over global human society, inventorying the world’s events, emotions, images and narratives as they happen through mass live data mining of the world’s public information streams. Creating one of the world’s largest social sciences datasets that spans news, television, images, books, academic literature and even the open web itself, mass machine translating it all from 65 languages, codifying millions of themes and thousands of emotions, leveraging algorithms from simple keyword matches to massive statistical models to deep learning approaches, GDELT leverages the full power of today’s bleeding edge technologies to create a computational view of the world that makes it possible to understand what’s happening across the planet.

Cataloging local events requires using local sources in their original languages. GDELT today operates what is believed to be the world’s largest open program to catalog, monitor and translate the media of the non-Western world, ensuring that it is able to peer deeply into the most remote regions of the earth and help those voices to be heard. This is in stark contrast to the majority of all other monitoring initiatives today, which rely primarily on Western sources and very small samples of translated local content.

Taken together, the various collections that make up GDELT represent one of the largest open archives of codified human society today. The dataset’s unique scale, scope, and coverage means working with GDELT requires special attention to analytic methodology, given the number of data modalities contained within that span spatial, temporal and network attributes, text and imagery, narratives and emotions, active and contextual dimensions, content and citation graphs, and so on. The dataset’s global focus means it captures the chaotic and conflicting nature of the real world, from false and conflicting information to shifting narratives and baselines to a constant exponential increase in monitoring volume, requiring analytic constructs that are capable of not only coping with this environment, but ideally conveying the resident uncertainty to end users. There is a need not only for polished print-ready visualizations optimized for consumption by policymakers and the general public that summarize macro-level patterns, but also for interactive displays that allow hypothesis testing and live “speed of thought” exploration of massive multi-modality data. For example, could one build a dashboard that displays macro-level “bursts” of unrest using the news data, bringing to bear the human rights data to highlight those pockets of unrest occurring in areas prone to human rights abuses, leveraging the academic literature to identify the underlying influencing factors and top academic experts on that area based on the citation graph, and finally using the television data to show how American domestic television is portraying the evolving situation. Visualizations that combine the spatial, temporal, and network dimensions are of especial interest, as are those that examine the data in innovative ways that help uncover new and nonobvious underlying patterns. Visualizations that could ultimately be scripted/automated such that they could be updated automatically on a daily or realtime basis are also of particular interest, especially global dashboards.

Below you'll find each of the major datasets available through GDELT today.

GDELT 2.0 EVENT DATABASE

- Type: Event Data

- Time Range: February 2015 – Present (Will extend back to 1979 by Fall 2016)

- Update Interval: Every 15 minutes

- Source: Worldwide news coverage in 100 languages, 65 are live machine translated at 100% volume

- Download / Documentation: http://blog.gdeltproject.org/gdelt-2-0-our-global-world-in-realtime/

The GDELT 2.0 Event Database is a global catalog of worldwide activities (“events”) in over 300 categories from protests and military attacks to peace appeals and diplomatic exchanges. Each event record details 58 fields capturing many different attributes of the event. The GDELT 2.0 Event Database currently runs from February 2015 to present, updated every 15 minutes and is comprised of 326 million mentions of 103 million distinct events as of February 19, 2016. Those needing the highest possible resolution local coverage should use GDELT 2.0, as it leverages GDELT Translingual to provide 100% machine translation coverage of all monitored content in 65 core languages, with a sample of an additional 35 languages hand translated. It also expands upon GDELT 1.0 by providing a separate MENTIONS table that records every mention of each event, along with the offset, context and confidence of each of those mentions.

GDELT 2.0 GLOBAL KNOWLEDGE GRAPH

- Type: Global Knowledge Graph

- Time Range: February 2015 – Present (Will extend back to 1979 by Fall 2016)

- Update Interval: Every 15 minutes

- Source: Worldwide news outlets in 100 languages, 65 are live machine translated at 100% volume

- Download / Documentation: http://blog.gdeltproject.org/gdelt-2-0-our-global-world-in-realtime/

As a complement to the GDELT 2.0 Event Database, the GDELT 2.0 Global Knowledge Graph extracts each person name, organization, company, disambiguated location, millions of themes and thousands of emotions from each article, resulting in an annotated metadata graph over the world's news each day. Totaling over 200 million records and growing at a rate of half a million to a million articles a day, the GKG 2.0 is perhaps the world’s largest open data graph over global human society. Similar to the GDELT 2.0 Event Database, the GKG 2.0 also leverages GDELT Translinugal to provide 100% machine translation coverage of all monitored content in 65 core languages, with a sample of an additional 35 languages hand translated. The GKG can be used as a stand-alone dataset to visualize and explore global narrative and emotional underpinnings, or coupled with the Event Database to contextualize events of interest in their contextual surroundings and reactions.

VISUAL GLOBAL KNOWLEDGE GRAPH

- Type: Visual Global Knowledge Graph

- Time Range: December 2015 – Present

- Update Interval: Every 15 minutes

- Source: Worldwide news imagery, with all annotations performed by Google Cloud Vision API

- Download / Documentation: http://blog.gdeltproject.org/gdelt-visual-knowledge-graph-vgkg-v1-0-available/

A growing fraction of all imagery found in global news coverage monitored by GDELT is fed every 15 minutes through the Google Cloud Vision API, where it is annotated by Google’s state-of-the-art deep learning neural network algorithms. Annotations include objects/activities, OCR (accurate enough to read handwritten Arabic on protest signs in the midst of a street demonstration), content-based geolocation, logo detection, facial sentiment and landmark detection, and Google SafeSearch annotations are applied to all images. A delimited codified version of all fields is available, while the raw JSON output of the Cloud Vision API, including a range of extended fields, is available for the most recent imagery. A boxed set of all image metadata for the 14.6 million images appearing from December to February 21, 2016 is available for easy download. UPDATE: a boxed set of 37 million images through April 2016 is now available.

GDELT 1.0

- Type: Event Data

- Time Range: January 1979 – Present

- Update Interval: Daily

- Source: Worldwide news outlets in 100 languages, selected sample of foreign language content

- Download / Documentation: https://cloudplatform.googleblog.com/2014/05/worlds-largest-event-dataset-now-publicly-available-in-google-bigquery.html

The GDELT 1.0 event dataset comprises over 3.5 billion mentions of over 364 million distinct events from almost every corner of the earth spanning January 1979 to present and updated daily. GDELT 1.0 includes only hand translated foreign language content – for those wanting access to the full-volume machine translation feed, GDELT 2.0 should be used instead. You can download the complete collection as a series of CSV files or query it through Google BigQuery. The file format documentation describes the various fields and their structure. You can also download column headers for the 1979 – March 2013 Back File and April 1, 2013 – Present Front File, as well as the Column IDs.

The GDELT Event Database uses the CAMEO event taxonomy, which is a collection of more than 300 types of events organized into a hierarchical taxonomy and recorded in the files as a numeric code. You can learn more about the schema itself in the CAMEO Code Reference. In addition, tab-delimited lookup files are available that contain the human-friendly textual labels for each of those codes to make it easier to work with the data for those who have not previously worked with CAMEO. Lookups are available for both Event Codes and the Goldstein Scale. In addition, details recording characteristics of the actors involved in each event are stored as a sequence of 3 character codes. Lookups are available for Event Country Codes, Type Codes, Known Group Codes, Ethnic Codes and Religion Codes.

There are also a number of normalization files available. These comma-delimited (CSV) files are updated daily and record the total number of events in the GDELT 1.0 Event Database across all event types broken down by time and country. This is important for normalization tasks, to compensate the exponential increase in the availability of global news material over time. Available normalization files include Daily, Daily by Country, Monthly, Monthly by Country, Yearly and Yearly by Country.

AMERICAN TELEVISION GKG

- Type: Global Knowledge Graph

- Time Range: July 2009 – Present

- Update Interval: Daily with 48 hour embargo

- Source: American English language television news stations monitored by the Internet Archive’s Television News Archive

- Download / Documentation: http://blog.gdeltproject.org/announcing-the-american-television-global-knowledge-graph-tv-gkg/

In collaboration with the Internet Archive, this special collection processes the Archive’s Television News Archive, which today monitors over 100 television stations across the United States, in order to understand what Americans see when they turn on their televisions. The dataset is updated each morning with a 48 hour rolling embargo, meaning the dataset released on a given morning covers television coverage that aired two days prior. Each GKG record represents a single television show, which can range from a brief 30 minute segment to a single “show” that spans many hours, depending on how a given network organizes its programming. At this time only English-language programming is being processed. Processing is performed on the raw closed captioning stream aired by each network, the quality of which can vary from network to network. Closed captioning streams do not include case information, meaning all text is uppercase and some streams do not include punctuation or other delimiters. Captioning tends to include a high level of error, so this GKG tends to have less accuracy than the primary GKG. Some GKG fields may not be available for all shows or stations depending on whether a given algorithm determines it is able to generate high-quality output on a given show based on the level of captioning error it is seeing and the overall quality of a particular broadcast’s captioning.

AFRICA AND THE MIDDLE EAST ACADEMIC LITERATURE GKG

- Type: Global Knowledge Graph

- Time Range: 1950-2012 (some material dating back to 1906 and newer than 2012 may be present)

- Update Interval: None at this time

- Source: More than 21 billion words of academic literature covering Africa and the Middle East, including all JSTOR holdings mentioning locations in the region 1950-2007, all identifiable academic literature found online since 1996 and still accessible in 2014 in the Internet Archive’s Wayback Machine holdings of 1.6 billion PDFs, all unclassified/declassified Defense Technical Information Center (DTIC) holdings 1906-2012, all unclassified/declassified CIA holdings 1946 to 2012, all CORE fulltext research collection holdings 1940 to 2012 and CiteSeerX holdings 1947 to 2011.

- Download / Documentation: http://blog.gdeltproject.org/announcing-the-gdelt-2-0-release-of-the-africa-and-middle-east-global-knowledge-graph-ame-gkg/

The Africa and Middle East Global Knowledge Graph encodes a massive array of socio-cultural information, including ethnic and religious group mentions, and the underlying citation graph over more than 21 billion words of academic literature comprising the majority of output of the humanities and social sciences literature over Africa and the Middle East since 1945 (JSTOR+DTIC+CORE+CiteSeerX+CIA+Internet Archive). This dataset uniquely includes the full extracted list of all citations from each journal article, making it possible to explore and visualize the entire citation graph over this literature. Defense Technical Information Center (DTIC) articles additionally include publication metadata, including human-assigned subject tags provided by DTIC, in the “EXTRASXML” field. The entire collection was reprocessed into the final GDELT GKG 2.0 format in March 2016, at which time some additional content was processed beyond the materials found in the original September 2014 release.

HUMAN RIGHTS KNOWLEDGE GRAPH

- Type: Global Knowledge Graph

- Time Range: 1960-2014

- Update Interval: None at this time

- Source: More than 110,000 documents from Amnesty International, FIDH, Human Rights Watch, ICC, ICG, US Department of State and the United Nations dating back to 1960 documenting human rights abuses across the world

- Download / Documentation: http://blog.gdeltproject.org/announcing-the-new-human-rights-global-knowledge-graph-hr-gkg/

The Human Rights Global Knowledge Graph encodes more than 110,000 documents from Amnesty International, FIDH, Human Rights Watch, ICC, ICG, US State, and the United Nations dating back to 1960 documenting human rights abuses across the world. The collection was processed in September 2014 so is available in an alpha version of the GKG 2.0 format that is slightly different from the final GKG 2.0 format used today, see the documentation page above for details. This was a prototype project exploring the creation of topic-specific deep dive collections that provide high resolution contextual background to contemporary events such as human rights concerns.

HISTORICAL AMERICAN BOOKS ARCHIVE

- Type: Global Knowledge Graph

- Time Range: 1800-2015

- Update Interval: None at this time

- Source: Complete set of English-language public domain books digitized by the Internet Archive and HathiTrust from 1800 to Fall 2015 totaling 3.5 million volumes

- Download / Documentation: http://blog.gdeltproject.org/3-5-million-books-1800-2015-gdelt-processes-internet-archive-and-hathitrust-book-archives-and-available-in-google-bigquery/

World history doesn’t begin with the birth of mass computerized born-digital news distribution in the late 1970’s. To understand current events often requires looking back at how the world has evolved over the centuries. Towards this end the GDELT Historical American Books Global Knowledge Graph processed more than 3.5 million volumes comprising the entirety of the Internet Archive and HathiTrust English-language public domain collections through the GDELT GKG 2.0 system.