Earlier this week we unveiled our new work on high-precision alignment of English translations of Russian and Ukrainian television news broadcasts. Aligning translations between languages with very different word ordering is extremely difficult: the sentence-starting words in the source language may become the sentence-ending words in the target language and words throughout the sentence may be transposed not by a single word as in highly-aligned languages, but intermixed nearly perfectly throughout the target sentence.

The most common approach to high-resolution machine translation of spoken word transcripts is either to aggregate them to the sentence level or to translate them a single captioning line at a time.

The problem with aggregating machine-generated transcripts to the sentence level is that machine-generated punctuation tends to group transcripts into large run-on sentences that can aggregate 30 seconds or more (sometimes up to a minute) of airtime into a single sentence, rendering such an approach ill-suited for following the kinds of multi-speaker exchanges common on television news.

Alternatively, translating transcripts one captioning line at a time yields highly disfluent and stilted translations that in some cases may entirely change the meaning of the text in a way that fundamentally alters what it conveys.

Instead, what we need is to translate the text at a paragraph level (to maximize coref and other pass-down processes), but to preserve the internal timing of each block of spoken words.

In our internal GDELT Translingual infrastructure, our translation models for each language are designed to pass through word-level alignment data, yielding a lookup table for each translation that precisely aligns each source word(s) with their corresponding target word(s), allowing us to perform this kind of word-level precision mapping. In the case of external tools like Google Translate, how can we best achieve a similar mapping process within the constraints of a model architecture that was not designed to provide such high-precision mapping information?

In our demo earlier this week we showcased how this can be trivially achieved by grouping each SRT line into a <SPAN> block that is transparent to Google Translate's model, while using <P> tags to maintain a chunk size beneath its maximum. While this works for the vast majority each transcript, there is still a measurable percentage of each transcript that is not handled properly by this workflow due to extreme differences in the internal word ordering of the source and target languages.

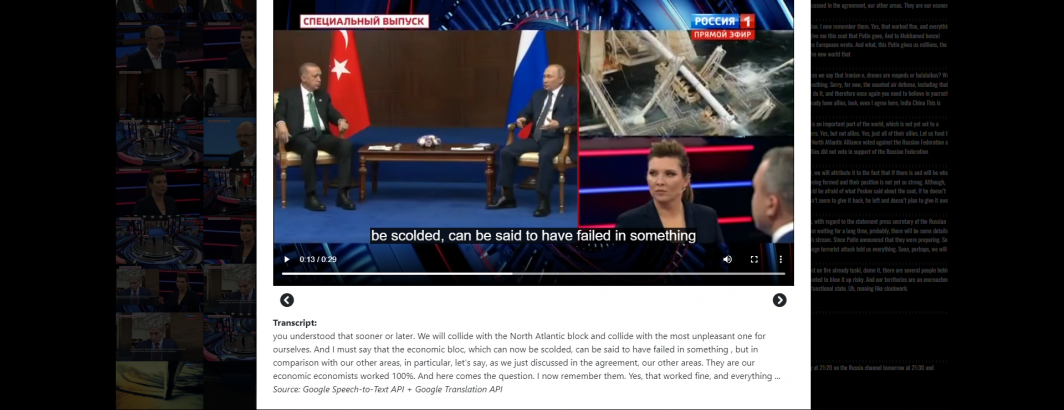

For example, take the following translation of two Russian sentences that are spoken over the span of 32 seconds:

<p> <s id=120>This</s> <s id=121>year, the leaders met almost on a monthly</s> <s id=122>basis. The presidents spoke on the phone 13 times,</s> <s id=126>when</s> <s id=124>the</s> <s id=125>delegations</s> <s id=123>left the hall</s> <s id=127>Putin and Erdogan talked in private for a long time.</s> </p>

Note how the span IDs are highly unordered from the fourth chunk onward. That reflects the highly dissimilar word ordering of the Russian and English versions of that sentence. Our original workflow did not handle these cases properly, but still yielded an extremely useable transcript with vastly greater accuracy than the per-line translation of traditional approaches.

Our improved workflow drops the idea of grouping lines into spans and instead treats them merely as time markers, requiring monotonic timecode advancing (timecodes that move in reverse are dropped on reassembly). This solves these reassembly challenges!