It is remarkable that in an era in which petabytes have become commoditized, petascale analyses still drive fear into the heart of data analysts. The modern cloud removes the complexity associated with data storage and management and implicitly colocates computation and data, making working with petabytes trivial. As far back as four years ago BigQuery could table scan a petabyte in under 3.7 minutes, making it possible to run adhoc brute force analyses at unlimited scale.

The size of modern datasets means that even relatively simple analyses can often consume hundreds of terabytes to petabytes in an analysis. Yet, between GCS and high incoming commodity bandwidth to VMs, it is possible to consume petabytes from GCS or external sources with nearly linear scaling across a set of VMs.

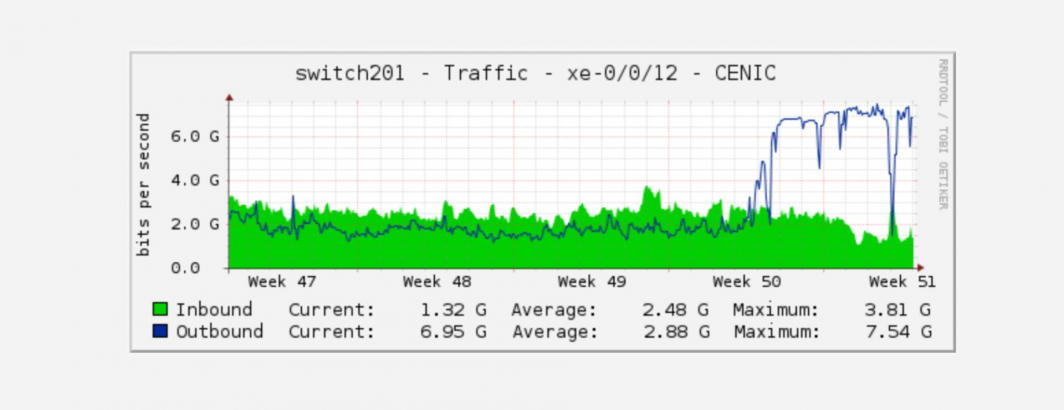

In one recent analysis we processed more than 2.2 petabytes of video, ingesting it from a remote data source and streaming it through a small cluster of just 24 1-core VMs, with the limiting factor being the external data sources' 10GbE external link, rather than the VMs themselves. The VMs themselves were simply 1-core machines with 20GB of RAM and a 10GB boot disk that simply streamed a set of videos in, processed them in flight through "ccextractor" and streamed the results to GCS, with nothing touching local disk. In fact, the processing consisted of nothing more than a single script that requested the URL of a video file and then handed it to wget piped into ccextractor piped into gsutil. That's literally it!

To put this another way, processing 2.2 petabytes of video through ccextractor required nothing more than 24 single-core VMs that each ran a simple PERL script that streamed the video in via wget and used standard Unix pipes to pipe the results through ccextractor, then piped it again to GCS.

A handful of VMs and a simple PERL script that connected a few utilities together via Unix pipes is all it took to conduct a petascale analysis. That's the power of the modern cloud!