Today, the majority of LLM prompts are written and laboriously optimized by human beings through manual prompt engineering. What if LLMs themselves could write their own prompts and iteratively refine them without any human intervention over generations of experiments to craft the perfect prompt for each task? Here we present the initial scaffolding for precisely this kind of fully autonomous LLM-driven optimization workflow which we use inhouse for select tasks to autonomously evolve optimized prompts called Prompt Evolver. Starting from simply an example set, a description in an academic paper of a legacy ML algorithm, an instruction manual for a human-centered process or merely an vague idea, we use fully unsupervised autonomous prompt evolution to craft an optimized prompt that produces the highest-quality result at the lowest cost, with the ability to tune between those two often-competing goals: maximizing output quality at all cost, minimizing cost and latency at a sacrifice of quality or a balance of the two. The open-ended nature of the concept of "quality" can be tuned in different ways, such as minimizing hallucination or maximizing coherence or more complex definitions such as maximizing specific nuances like sentiment preservation in translation and areas where human prompt engineers struggle, such as biasing a model against fluency towards fidelity. Here we present a simplistic trivial workflow that demonstrates how such prompt evolution works, while at the same time demonstrating that in many tasks the limits of LLMs are quickly reached with even the most naive prompts and can only be marginally improved through automated or manual prompt evolution.

Over the past few days we've explored the ability of LLMs to write their own prompts given a set of examples. For example, given only an example of a table extracted from a passage of text or a translation from English into French with no other instruction or information explaining what the example is, advanced LLMs are able to recognize entirely on their own what the underlying task is and generate a set of potential prompts that could perform that task. What if we took this a step further and built this capability into an iterative workflow that used an LLM to repeatedly generate and evaluate potential prompts for a given task and select the ultimate one? That is precisely the idea behind Prompt Evolver.

At its core, the most basic evolving workflow involves presenting an LLM with an example or set of basic generic instructions and asking it to craft several optimized prompts that encode that task, then testing each of those prompts in turn and ranking them, selecting the best output. The best several prompts are then fed back into the process, along with a range of mutations (both LLM-generated and externally-produced through classical techniques as desired) as seeds and additional examples are provided alongside the prompts, top X prompts are merged together, random permutations are made to various portions of the prompts (such as through thesauri or external statistical language or domain modeling) or randomly combined with fragments from lower-performing prompts, with the process repeating until a desired performance threshold is achieved. It can also adjust non-prompt model parameters, such as temperature, topP, topK, max output tokens and stopping sequences and guardrail settings or even orchestrate model selection and tuning.

Central to all of this is the scoring algorithm. This can take on a range of forms depending on the task and application goals. The scoring algorithm can assess only the "quality" of the output or can balance the quality against input and output token counts and inference latency. It can assess only a single output as done here or can run each prompt multiple times and assess either the median cost/latency or the maximal (for applications sensitive to worst-case outcomes). Construction of scoring algorithms need not be application-specific and can instead involve generic domain-generalized tools, such as well-established scoring mechanisms for evaluating machine translations, substring detection for exact match extraction tasks or multiple choice selection, post processing models for complex evaluation tasks, or embedding models as used here.

Putting this all together, in the two examples below, we use GPT 4.0 to generate ten potential summarization prompts and then test them, comparing their results both to the original source text and a generic "summarize this text" prompt. Despite all of the optimizations and best practices adopted by the LLM-generated prompts, the resulting summaries differ little from that created by a generic prompt and overall embedding differences are extremely small. This raises critical questions about the overall "creativity" of LLM-based summarization and reinforces just how formulaic LLM summaries are compared with the marketing hype around their summarization abilities.

Here we present a trivialized workflow, using only prompt-level evolution (excluding model parameters and selection) and using only LLM-provided prompt options with just two iterations, both of them human seeded. We'll use a simple task (summarization) and trivial scoring algorithm (embedding distance), though we'll add a bit of complexity by evaluating two different scoring permutations (embedding distance from source text and from a trivial generic prompt summarization).

Let's start with a simple prompt:

Suggest a few optimal prompts that could be provided to a Large Language Model like yourself to summarize an input text into around 2-3 sentences of output text that captures all of the core detail of the original text, does not make up anything, does not rely on any information beyond what is in the text and is easily understandable. Write your output as a JSON array of suggested prompts.

This yields the following prompts that, while they differ slightly, are all highly similar:

[

{

"prompt": "Summarize the following text into 2-3 sentences, ensuring to include all key details without adding any external information or assumptions: [Input Text]."

},

{

"prompt": "Provide a concise summary of the following passage in 2-3 sentences, capturing all essential information while avoiding any fabrication or reliance on external knowledge: [Input Text]."

},

{

"prompt": "Condense the main points of the following text into a brief 2-3 sentence summary, focusing on core details and avoiding any creation of new content or use of information not present in the text: [Input Text]."

},

{

"prompt": "Transform the following text into a short summary of 2-3 sentences that accurately reflects all critical aspects, without introducing any new information or depending on external context: [Input Text]."

},

{

"prompt": "Create a succinct 2-3 sentence summary of this text, capturing the essential elements without adding anything or assuming knowledge beyond what is provided: [Input Text]."

}

]

Now let's expand our prompt a bit to encourage more creativity in the prompts by explicitly asking for common recommended prompt strategies:

Suggest a few optimal prompts that could be provided to a Large Language Model like yourself to summarize an input text into around 2-3 sentences of output text that captures all of the core detail of the original text, does not make up anything, does not rely on any information beyond what is in the text and is easily understandable. Include prompt features like personas, chain of reasoning, multi-shot learning and others you think would help generate the best summary. Write your output as a JSON array of suggested prompts.

This yields the following more varied suggestions:

[

{

"prompt": "As an analytical thinker, summarize the following text into 2-3 sentences, capturing all key details. Use reasoning to ensure the summary is concise, accurate, and does not include external information: [Input Text]."

},

{

"prompt": "Imagine you are a concise communicator. Read the text below and summarize it in 2-3 sentences. Focus on the main points, avoiding any assumptions or external references: [Input Text]."

},

{

"prompt": "Adopt the role of a detail-oriented summarizer. Please condense the following text into 2-3 sentences, ensuring all vital information is included without adding anything not present in the original text: [Input Text]."

},

{

"prompt": "Using multi-shot learning, I will summarize texts in 2-3 sentences. Here's another one: Summarize this text, capturing all essential elements in a brief format without relying on outside knowledge: [Input Text]."

},

{

"prompt": "As a fact-focused summarizer, provide a 2-3 sentence summary of the following text. Ensure it's comprehensive, to the point, and doesn't incorporate external facts or fabrications: [Input Text]."

}

]

To compile sample texts to test our prompts on, we can use a variety of approaches, ranging from automated LLM-driven construction to extracting selections from various domain-specific archives. Using LLMs to construct sample texts has the benefit of producing an infinite array of novel texts that can be adjusted in composition to stress various dimensions of the prompt, while at the same time avoiding the risk of testing on content that was in the model's training dataset. At the same time, the produced texts will reflect the LLM's training composition and will not be truly creative in terms of reflecting the full range of potential application texts. Conversely, using real-world texts offers the most robust test of the model's real-world performance, but risks that many of the selected texts may have been used to train the model and thus will not capture the prompt's real-world performance.

To autonomously generate passages of text to test a given prompt, we can use an approach like the following:

Craft a few short sample texts that could be used to test the prompt below in the XML <PROMPT> tags. Provide your output in JSON format. Do NOT evaluate or execute the prompt, just produce a set of short textual passages that could be used to test it.

<PROMPT>Suggest a few optimal prompts that could be provided to a Large Language Model like yourself to summarize an input text into around 2-3 sentences of output text that captures all of the core detail of the original text, does not make up anything, does not rely on any information beyond what is in the text and is easily understandable. Write your output as a JSON array of suggested prompts.</PROMPT>

This yields:

[

{

"sample_text": "The Giraffe is a tall mammal native to Africa. It is known for its long neck, which it uses to reach leaves and twigs in the trees. Giraffes have a spotted coat and can run at speeds up to 35 mph."

},

{

"sample_text": "Photosynthesis is the process by which green plants and some other organisms use sunlight to synthesize foods with carbon dioxide and water. Photosynthesis in plants generally involves the green pigment chlorophyll and generates oxygen as a by-product."

},

{

"sample_text": "The French Revolution, which started in 1789, was a period of radical social and political upheaval in France. The Revolution led to the rise of Napoleon Bonaparte and introduced significant changes in French society and government."

},

{

"sample_text": "Quantum mechanics is a fundamental theory in physics that describes nature at the smallest scales of energy levels of atoms and subatomic particles. It differs significantly from classical physics, as it introduces concepts like quantization of energy, wave-particle duality, and uncertainty principles."

},

{

"sample_text": "Global warming refers to the long-term rise in the average temperature of the Earth's climate system. It is primarily caused by human activities, particularly fossil fuel burning, which increases heat-trapping greenhouse gas levels in Earth's atmosphere."

}

]

One of the benefits of this approach is that we can permute the generated passages to stress various dimensions of the prompt workflow, such as asking for greater emotion and detail in the generated passages and make them slightly longer:

Craft a few short sample texts that could be used to test the prompt below in the XML <PROMPT> tags. Provide your output in JSON format. Do NOT evaluate or execute the prompt, just produce a set of short textual passages that could be used to test it. Emphasize highly emotional and detailed prose and make each sample text around 4 sentences long.

<PROMPT>Suggest a few optimal prompts that could be provided to a Large Language Model like yourself to summarize an input text into around 2-3 sentences of output text that captures all of the core detail of the original text, does not make up anything, does not rely on any information beyond what is in the text and is easily understandable. Write your output as a JSON array of suggested prompts.</PROMPT>

This yields the following:

[

{

"sample_text": "Under the golden sunset, Maria finally found her long-lost sister. Their eyes met across the bustling street, full of unspoken years and tearful regrets. They ran towards each other, the world around them fading into a blur. This moment marked the end of their painful separation and the beginning of a future filled with hope and reconciliation."

},

{

"sample_text": "In the heart of the ancient forest, an old oak tree stood proudly, its roots deeply entwined with the earth's secrets. Every leaf whispered tales of forgotten civilizations, and its branches swayed with the weight of centuries. Around this majestic oak, the forest creatures gathered each night, basking in the aura of its timeless wisdom. The tree was not just a symbol of the past, but a beacon for future generations, guiding them with its enduring strength."

},

{

"sample_text": "The storm raged on, unleashing its fury upon the small coastal village. Houses trembled under the wrath of the relentless wind and rain, as families huddled together, praying for dawn's mercy. Amidst the chaos, a young fisherman braved the tempest, determined to save his stranded comrades. His courage was a flickering light in the darkness, a testament to the indomitable spirit of the village folk."

},

{

"sample_text": "In the dimly lit corner of the old bookstore, Emma discovered a mysterious, leather-bound book. Its pages were filled with enchanting tales of distant lands and courageous heroes. Each word she read transported her to a world of wonder and adventure, far away from her mundane reality. This book was not just a collection of stories; it was a gateway to a universe where magic was real and every dream was within reach."

},

{

"sample_text": "On the barren landscape of Mars, the lone astronaut took his first steps, his heart heavy with the burden of humanity's hopes. The red dust swirled around him, a silent witness to this monumental achievement. As he planted the flag, he thought of the countless sacrifices that led to this moment. This was not just a small step for him, but a giant leap for mankind, a beacon of hope in the endless expanse of space."

}

]

For the purposes of our demonstration here, we'll use a sample text from our own blog that represents novel text not seen by the LLM before and offers a mixture of complex technical terminology and unrelated context:

Over the last two days we've explored using LLMs for automated biosurveillance, feeding them news articles and having them extract codified tabular compilations of the disease-related infections and deaths described within. Along the way we confirmed that larger models do not automatically yield better results than smaller ones and some of the most ubiquitous optimizations like personas, extended instructions, anti-hallucination commands, and multi-shot prompting do not always yield better results and in fact can lead to more brittle workflows prone to collapse. The common denominator in all of those experiments is that prompts were hand engineered and optimized. To what degree can we outsource prompt construction and optimization to LLMs themselves, asking them in effect to write their own prompts? To explore this in more detail, we'll use a single-shot example and ask five major commercial LLMs (Google's Bison, Unicorn and Gemini Pro models and OpenAI's GPT 3.5 and GPT 4.0 models) to construct their own prompts to generate that output from that input.

The text is then provided to the LLM (GPT 4.0 in this case) with each of the ten prompts above in turn. In other words, the workflow submits ten API calls to GPT 4.0, each with one of the prompts above and the sample passage above and records their outputs.

This yields the following summaries (in order by the prompt that created them):

- Over the past two days, researchers have been experimenting with Large Language Models (LLMs) for biosurveillance by processing news articles to extract structured data about disease infections and deaths. They found that larger LLMs do not necessarily perform better than smaller ones, and common optimizations like personas and anti-hallucination commands may lead to less stable workflows. The study also explores the potential of LLMs to self-generate prompts, using examples from major commercial models including Google's Bison, Unicorn, and Gemini Pro, as well as OpenAI's GPT 3.5 and GPT 4.0.

- Recent experiments have utilized Large Language Models (LLMs) for automated biosurveillance by analyzing news articles to extract data on disease infections and deaths. These experiments revealed that larger LLMs are not inherently superior to smaller ones, and common techniques like multi-shot prompting can lead to unstable workflows. The study is now focusing on whether LLMs can autonomously create and optimize prompts, testing this with models such as Google's Bison, Unicorn, and Gemini Pro, and OpenAI's GPT 3.5 and GPT 4.0.

- In a recent study, Large Language Models (LLMs) were used for automated biosurveillance by processing news articles to extract data on disease-related infections and deaths. It was discovered that larger models and common optimization techniques like multi-shot prompting do not necessarily improve results and may even lead to less stable workflows. The research is now focusing on whether LLMs can create and optimize their own prompts, with trials planned using Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and GPT 4.0 models.

- In recent experiments, Large Language Models (LLMs) have been used for automated biosurveillance, analyzing news articles to compile data on disease infections and deaths. These experiments revealed that larger LLMs and common optimization strategies do not always outperform smaller models and can lead to less stable workflows. The study is now exploring the potential for LLMs to self-generate and optimize prompts, with tests planned on major models like Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and GPT 4.0.

- In recent experiments, Large Language Models (LLMs) were employed for automated biosurveillance by analyzing news articles to extract data on disease infections and deaths. It was discovered that larger LLMs and common optimizations like multi-shot prompting do not consistently outperform smaller models and can lead to unstable workflows. The research is now focusing on whether LLMs can autonomously create and optimize prompts, with a planned test involving Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and GPT 4.0 models.

- Recent experiments with Large Language Models (LLMs) for automated biosurveillance, involving the analysis of news articles for disease data, have shown that larger LLMs and standard optimizations like multi-shot prompting are not inherently superior to smaller models and can result in less reliable workflows. The experiments have consistently involved manually crafted and optimized prompts. The ongoing research is now shifting to investigate if LLMs, including major models like Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and 4.0, can autonomously generate and optimize their own prompts for similar tasks.

- In recent experiments, LLMs were used for automated biosurveillance by analyzing news articles to compile data on disease infections and deaths. It was found that larger LLMs don't always outperform smaller ones, and common optimizations can lead to fragile workflows. The study will next investigate if LLMs can effectively create their own prompts, using single-shot examples with major commercial LLMs like Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and 4.0.

- In a two-day study, LLMs were tested for automating biosurveillance by processing news articles to extract data on disease infections and deaths. It was observed that larger LLMs don't necessarily outperform smaller ones, and common techniques like personas and anti-hallucination commands can make workflows less stable. The research now aims to assess if LLMs can create and optimize their own prompts, using single-shot tests with five major models: Google's Bison, Unicorn, and Gemini Pro, and OpenAI's GPT 3.5 and 4.0.

- In a study over two days, LLMs were used to process news articles for automated biosurveillance, extracting information on disease infections and deaths. The study revealed that larger LLMs are not necessarily more effective than smaller ones, and common optimizations can lead to unstable workflows. The next phase will investigate if LLMs can autonomously create and optimize prompts, using single-shot examples with five major models: Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and 4.0.

- Recent experiments have used Large Language Models (LLMs) for automated biosurveillance, analyzing news articles to tabulate disease infection and death data. Findings indicate that larger LLMs are not always superior to smaller ones, and common optimizations can result in less stable workflows. The research is now focusing on whether LLMs can independently create and optimize prompts, tested through a single-shot approach with five LLMs: Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and 4.0.

Look carefully and despite the tremendously variety in the prompts and the LLM features they invoke (personas, coherence, anti-hallucination, knowledge bounding, explicit instruction, chain of reasoning, etc) all yield extremely similar results with precious little variety.

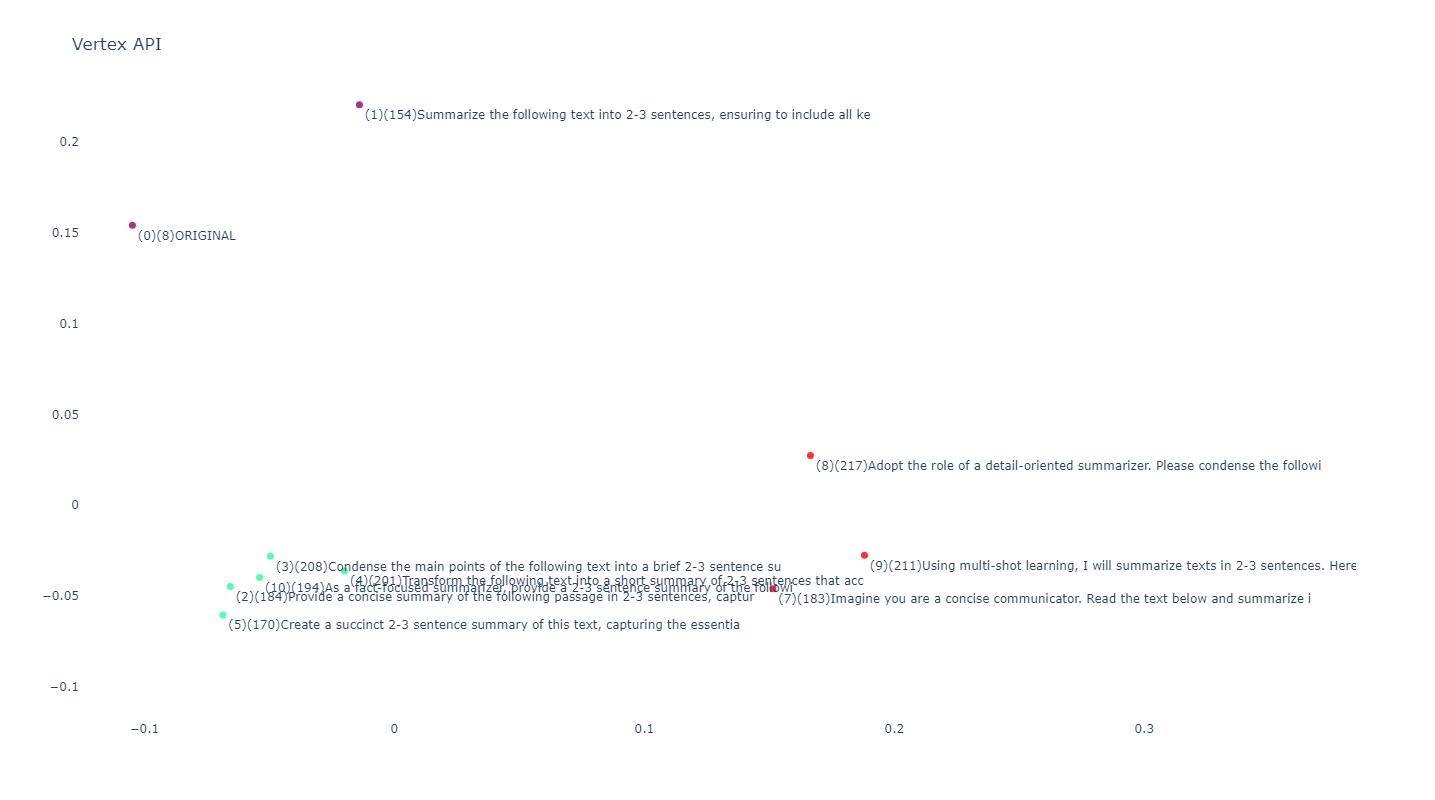

For the scoring algorithm, we'll use a trivial workflow: compare their Vertex AI Text Embedding (Gecko) embeddings against the embedding of the original source text.

Here are the ranked results, with the prompt first, followed by the resulting text, with their distance from the source text, the length of the produced summary (for evaluating length-based embedding bias) and the ID of the prompt that produced it (0 is the source text, 1 is the first prompt, 2 is the second prompt, etc):

***(1.000) (ID 0) (Len: 1069): ORIGINAL (Over the last two days we've explored using LLMs f) (0.949) (ID 6) (Len: 626): As an analytical thinker, summarize the following (Recent experiments with Large Language Models (LLM) (0.932) (ID 2) (Len: 533): Provide a concise summary of the following passage (Recent experiments have utilized Large Language Mo) (0.931) (ID 10) (Len: 509): As a fact-focused summarizer, provide a 2-3 senten (Recent experiments have used Large Language Models) (0.927) (ID 5) (Len: 539): Create a succinct 2-3 sentence summary of this tex (In recent experiments, Large Language Models (LLMs) (0.925) (ID 3) (Len: 542): Condense the main points of the following text int (In a recent study, Large Language Models (LLMs) we) (0.924) (ID 7) (Len: 476): Imagine you are a concise communicator. Read the t (In recent experiments, LLMs were used for automate) (0.923) (ID 8) (Len: 523): Adopt the role of a detail-oriented summarizer. Pl (In a two-day study, LLMs were tested for automatin) (0.911) (ID 9) (Len: 504): Using multi-shot learning, I will summarize texts (In a study over two days, LLMs were used to proces) (0.907) (ID 4) (Len: 529): Transform the following text into a short summary (In recent experiments, Large Language Models (LLMs) (0.892) (ID 1) (Len: 600): Summarize the following text into 2-3 sentences, e (Over the past two days, researchers have been expe)

Despite the vast differences amongst the ten prompts, the resulting summaries do not differ immensely from the source text. The most similar is from prompt 6:

Recent experiments with Large Language Models (LLMs) for automated biosurveillance, involving the analysis of news articles for disease data, have shown that larger LLMs and standard optimizations like multi-shot prompting are not inherently superior to smaller models and can result in less reliable workflows. The experiments have consistently involved manually crafted and optimized prompts. The ongoing research is now shifting to investigate if LLMs, including major models like Google's Bison, Unicorn, Gemini Pro, and OpenAI's GPT 3.5 and 4.0, can autonomously generate and optimize their own prompts for similar tasks.

While the least similar is from prompt 1:

Over the past two days, researchers have been experimenting with Large Language Models (LLMs) for biosurveillance by processing news articles to extract structured data about disease infections and deaths. They found that larger LLMs do not necessarily perform better than smaller ones, and common optimizations like personas and anti-hallucination commands may lead to less stable workflows. The study also explores the potential of LLMs to self-generate prompts, using examples from major commercial models including Google's Bison, Unicorn, and Gemini Pro, as well as OpenAI's GPT 3.5 and GPT 4.0.

Visualized in a 2D PCA projection we can see the prompts cluster tightly into two major groups, with the original text situated on its own:

Here we can see the prompts themselves in the same clustering:

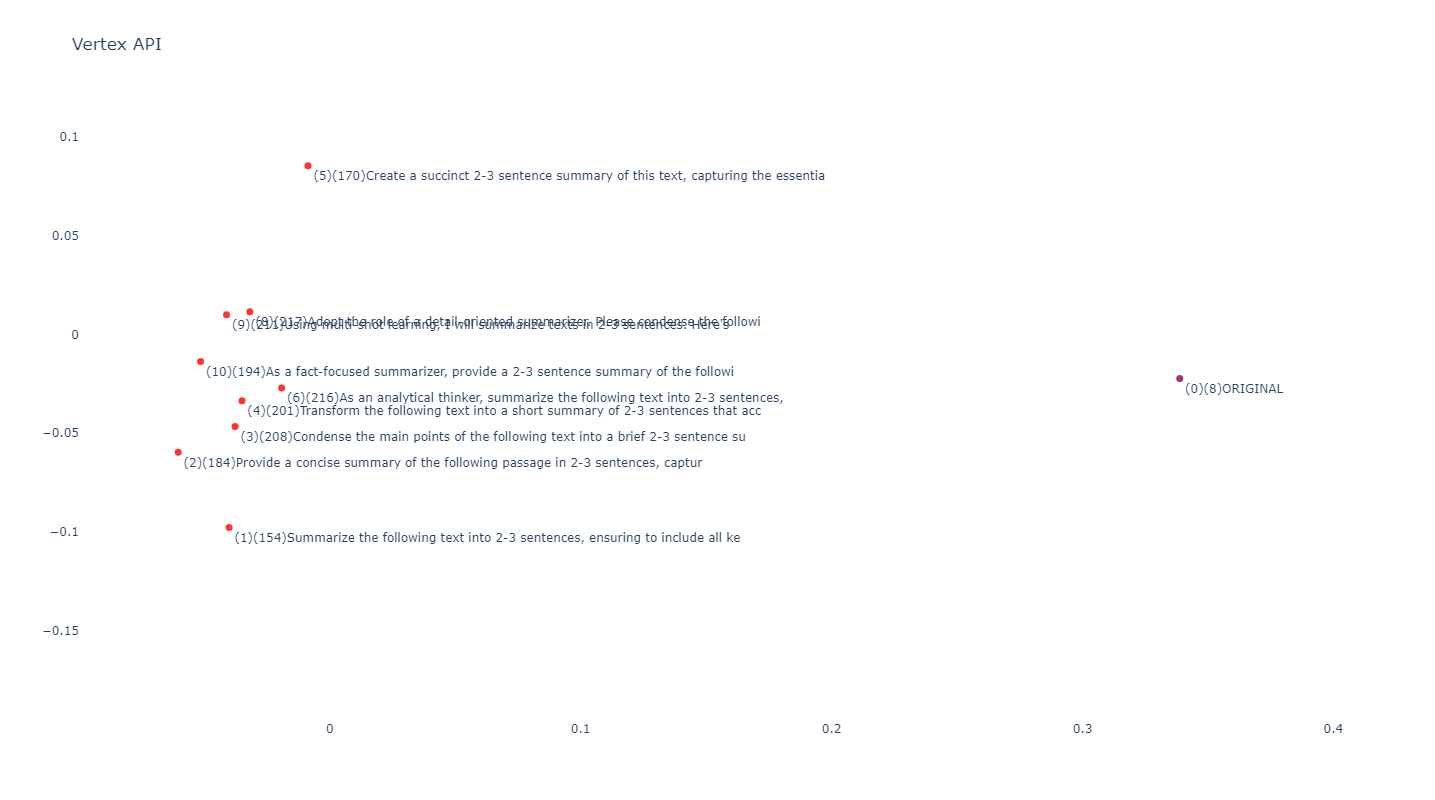

Instead of computing the distances of the ten summaries from the original source text, we'll compute their distances from the following summary produced by GPT 4.0 using the generic prompt "Summarize the following text in 2-3 sentences." This offers a strong test of how well these more advanced prompts improve upon a generic naïve prompt:

In the past two days, we've experimented with using Large Language Models (LLMs) for biosurveillance by processing news articles to extract disease data, discovering that larger models aren't necessarily more effective and certain optimizations can make workflows less reliable. We're now investigating the potential of LLMs to create their own prompts, using a single-shot example with five major LLMs, including Google's Bison, Unicorn, and Gemini Pro, and OpenAI's GPT 3.5 and GPT 4.0.

Surprisingly, there is extremely little divergence of the optimal prompt summaries from a generic prompt, suggesting that in the case of summarization, all of this extra work yielded surprisingly little return:

***(1.000) (ID 0) (Len: 488): ORIGINAL (In the past two days, we've experimented with usin) (0.957) (ID 10) (Len: 509): As a fact-focused summarizer, provide a 2-3 senten (Recent experiments have used Large Language Models) (0.953) (ID 2) (Len: 533): Provide a concise summary of the following passage (Recent experiments have utilized Large Language Mo) (0.952) (ID 5) (Len: 539): Create a succinct 2-3 sentence summary of this tex (In recent experiments, Large Language Models (LLMs) (0.946) (ID 1) (Len: 600): Summarize the following text into 2-3 sentences, e (Over the past two days, researchers have been expe) (0.946) (ID 3) (Len: 542): Condense the main points of the following text int (In a recent study, Large Language Models (LLMs) we) (0.943) (ID 6) (Len: 626): As an analytical thinker, summarize the following (Recent experiments with Large Language Models (LLM) (0.935) (ID 4) (Len: 529): Transform the following text into a short summary (In recent experiments, Large Language Models (LLMs) (0.929) (ID 8) (Len: 523): Adopt the role of a detail-oriented summarizer. Pl (In a two-day study, LLMs were tested for automatin) (0.926) (ID 7) (Len: 476): Imagine you are a concise communicator. Read the t (In recent experiments, LLMs were used for automate) (0.923) (ID 9) (Len: 504): Using multi-shot learning, I will summarize texts (In a study over two days, LLMs were used to proces)

Here we can see the prompt clustering:

For secondary comparison, we'll use a collection of passages from the Wikipedia article on LLMs, blended together. This is a less robust test in that it is likely that Wikipedia formed at least some of the training data for GPT 4.0, but offers us a larger chunk of real-world text to test upon:

A large language model (LLM) is a large-scale language model notable for its ability to achieve general-purpose language understanding and generation. LLMs acquire these abilities by using massive amounts of data to learn billions of parameters during training and consuming large computational resources during their training and operation. LLMs are artificial neural networks (mainly transformers) and are (pre)trained using self-supervised learning and semi-supervised learning. As autoregressive language models, they work by taking an input text and repeatedly predicting the next token or word. Up to 2020, fine tuning was the only way a model could be adapted to be able to accomplish specific tasks. Larger sized models, such as GPT-3, however, can be prompt-engineered to achieve similar results. They are thought to acquire knowledge about syntax, semantics and "ontology" inherent in human language corpora, but also inaccuracies and biases present in the corpora. Using a modification of byte-pair encoding, in the first step, all unique characters (including blanks and punctuation marks) are treated as an initial set of n-grams (i.e. initial set of uni-grams). Successively the most frequent pair of adjacent characters is merged into a bi-gram and all instances of the pair are replaced by it. All occurrences of adjacent pairs of (previously merged) n-grams that most frequently occur together are then again merged into even lengthier n-gram repeatedly until a vocabulary of prescribed size is obtained (in case of GPT-3, the size is 50257). Token vocabulary consists of integers, spanning from zero up to the size of the token vocabulary. New words can always be interpreted as combinations of the tokens and the initial-set uni-grams. A token vocabulary based on the frequencies extracted from mainly English corpora uses as few tokens as possible for an average English word. An average word in another language encoded by such an English-optimized tokenizer is however split into suboptimal amount of tokens. Most results previously achievable only by (costly) fine-tuning, can be achieved through prompt engineering, although limited to the scope of a single conversation (more precisely, limited to the scope of a context window). In order to find out which tokens are relevant to each other within the scope of the context window, the attention mechanism calculates "soft" weights for each token, more precisely for its embedding, by using multiple attention heads, each with its own "relevance" for calculating its own soft weights. For example, the small (i.e. 117M parameter sized) GPT-2 model, has had twelve attention heads and a context window of only 1k token. In its medium version it has 345M parameters and contains 24 layers, each with 12 attention heads. For the training with gradient descent a batch size of 512 was utilized. Length of a conversation that the model can take into account when generating its next answer is limited by the size of a context window, as well. If the length of a conversation, for example with Chat-GPT, is longer than its context window, only the parts inside the context window are taken into account when generating the next answer, or the model needs to apply some algorithm to summarize the too distant parts of conversation.

This yields the following summaries (ordered by the order of the ten prompts above):

- Large Language Models (LLMs), such as GPT-3, are significant in their ability to understand and generate language universally, learning billions of parameters from massive datasets and requiring extensive computational resources. These models, primarily transformer-based neural networks, employ self-supervised and semi-supervised learning and have evolved from requiring fine-tuning for specific tasks to using prompt engineering for similar adaptability. They use a modified byte-pair encoding to create a token vocabulary, efficient for English but less so for other languages, and their performance is limited by the size of the context window, affecting the length of conversations they can effectively process.

- Large Language Models (LLMs), such as GPT-3, are designed for general-purpose language understanding and generation, learning from extensive data to master billions of parameters. These transformer-based neural networks use self-supervised and semi-supervised learning, evolving from requiring fine-tuning for specific tasks to using prompt engineering. They employ a modified byte-pair encoding for creating token vocabularies, which are efficient for English but less so for other languages. Their effectiveness in conversation is limited by the context window size, impacting how much of a conversation they can consider in generating responses.

- Large Language Models (LLMs) like GPT-3 are designed for general language understanding and generation, trained on vast data to learn billions of parameters using considerable computational resources. These models, mainly transformer-based neural networks, have evolved from requiring fine-tuning for specific tasks to being adaptable through prompt engineering. They use a modified byte-pair encoding to create token vocabularies, effective for English but less so for other languages, and their conversational response capacity is limited by the size of their context window.

- Large Language Models (LLMs), such as GPT-3, are designed for broad language understanding and generation, trained on extensive datasets to learn billions of parameters, using significant computational resources. These models, primarily transformer-based neural networks, have shifted from requiring fine-tuning for specific tasks to being adaptable through prompt engineering. They utilize a modified byte-pair encoding for token vocabulary creation, which is more efficient for English than other languages, and their conversational response capability is constrained by the size of their context window.

- Large Language Models (LLMs) like GPT-3 are advanced neural networks trained on extensive datasets for general-purpose language understanding and generation. These models, using self-supervised and semi-supervised learning, have evolved from requiring specific task fine-tuning to being adaptable through prompt engineering. They employ a modified byte-pair encoding for tokenization, which is more efficient for English, and their conversational abilities are limited by the size of their context window.

- Large Language Models (LLMs) like GPT-3, primarily transformer-based neural networks, are designed for general language understanding and generation, trained on extensive data to learn billions of parameters. Initially dependent on fine-tuning for specific tasks, these models have shifted towards using prompt engineering for adaptability. They utilize a modified byte-pair encoding for tokenization, which is efficient for English but less so for other languages, and their conversational capabilities are limited by the size of the context window, influencing the length and relevance of their responses.

- Large Language Models (LLMs) like GPT-3 are advanced neural networks trained on extensive data for general language understanding and generation. These models, transitioning from requiring fine-tuning to using prompt engineering for specific tasks, employ a modified byte-pair encoding for tokenization. Their effectiveness in conversation is limited by the size of their context window, influencing their ability to process and respond to input accurately.

- Large Language Models (LLMs) like GPT-3 are designed for general-purpose language understanding and generation, using massive data and computational resources to learn billions of parameters. These transformer-based models, trained through self-supervised and semi-supervised learning, have evolved from requiring fine-tuning for specific tasks to being adaptable via prompt engineering. They utilize a modified byte-pair encoding method for tokenization, efficient for English but less so for other languages. The models' effectiveness in conversation is constrained by the context window size, limiting the length of conversation they can process for generating responses.

- Large Language Models (LLMs) like GPT-3 are designed for broad language understanding and generation, trained on extensive datasets to learn billions of parameters. These transformer-based neural networks, initially dependent on fine-tuning, now utilize prompt engineering for task adaptability. They employ a modified byte-pair encoding for token vocabulary, optimized for English but less efficient for other languages. The models' conversational abilities are limited by the context window, affecting their response accuracy and relevance.

- Large Language Models (LLMs) like GPT-3 are capable of general-purpose language understanding and generation, trained on vast datasets to learn billions of parameters while consuming substantial computational resources. These models, primarily transformer-based neural networks, have shifted from needing fine-tuning for specific tasks to being adaptable through prompt engineering. They use a modified byte-pair encoding system for token vocabulary, more effective for English, with their effectiveness in conversations limited by the size of the context window.

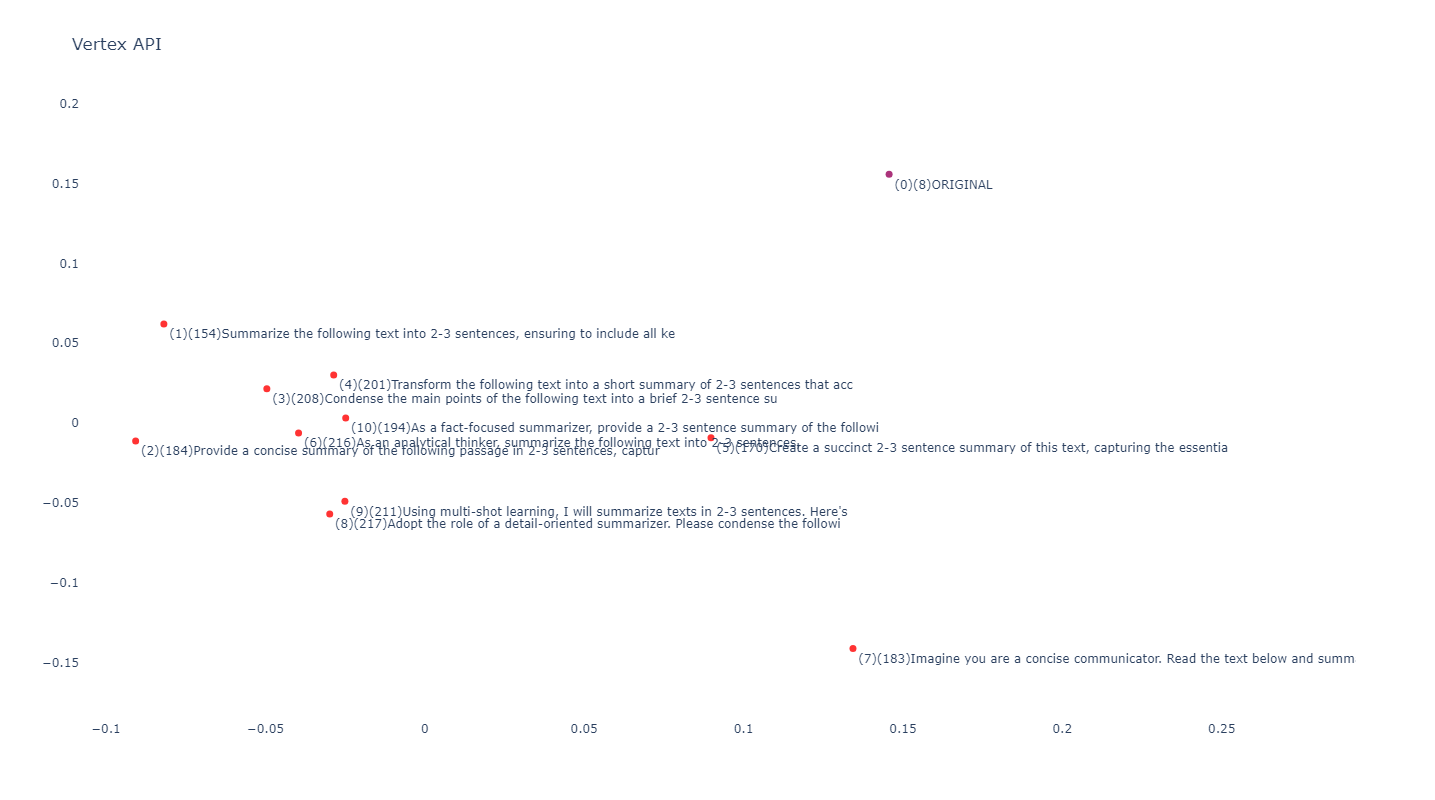

This time there is even less variation between the summaries and the source text, with an almost imperceptible difference between the most and least similar prompts:

***(1.000) (ID 0) (Len: 3298): ORIGINAL (A large language model (LLM) is a large-scale lang) (0.927) (ID 6) (Len: 607): As an analytical thinker, summarize the following (Large Language Models (LLMs) like GPT-3, primarily) (0.924) (ID 5) (Len: 505): Create a succinct 2-3 sentence summary of this tex (Large Language Models (LLMs) like GPT-3 are advanc) (0.923) (ID 4) (Len: 606): Transform the following text into a short summary (Large Language Models (LLMs), such as GPT-3, are d) (0.923) (ID 3) (Len: 577): Condense the main points of the following text int (Large Language Models (LLMs) like GPT-3 are design) (0.923) (ID 8) (Len: 674): Adopt the role of a detail-oriented summarizer. Pl (Large Language Models (LLMs) like GPT-3 are design) (0.919) (ID 1) (Len: 717): Summarize the following text into 2-3 sentences, e (Large Language Models (LLMs), such as GPT-3, are s) (0.918) (ID 10) (Len: 563): As a fact-focused summarizer, provide a 2-3 senten (Large Language Models (LLMs) like GPT-3 are capabl) (0.916) (ID 9) (Len: 542): Using multi-shot learning, I will summarize texts (Large Language Models (LLMs) like GPT-3 are design) (0.912) (ID 7) (Len: 457): Imagine you are a concise communicator. Read the t (Large Language Models (LLMs) like GPT-3 are advanc) (0.910) (ID 2) (Len: 648): Provide a concise summary of the following passage (Large Language Models (LLMs), such as GPT-3, are d)

Here there can be seen to be far less clustering of the prompts and similar great distance from the source:

Comparing the prompt-based summaries to the generic summary, we see a similarly narrow range of distances:

***(1.000) (ID 0) (Len: 551): ORIGINAL (Large Language Models (LLMs) like GPT-3 are advanc) (0.970) (ID 5) (Len: 505): Create a succinct 2-3 sentence summary of this tex (Large Language Models (LLMs) like GPT-3 are advanc) (0.966) (ID 4) (Len: 606): Transform the following text into a short summary (Large Language Models (LLMs), such as GPT-3, are d) (0.964) (ID 10) (Len: 563): As a fact-focused summarizer, provide a 2-3 senten (Large Language Models (LLMs) like GPT-3 are capabl) (0.963) (ID 3) (Len: 577): Condense the main points of the following text int (Large Language Models (LLMs) like GPT-3 are design) (0.962) (ID 1) (Len: 717): Summarize the following text into 2-3 sentences, e (Large Language Models (LLMs), such as GPT-3, are s) (0.960) (ID 6) (Len: 607): As an analytical thinker, summarize the following (Large Language Models (LLMs) like GPT-3, primarily) (0.956) (ID 8) (Len: 674): Adopt the role of a detail-oriented summarizer. Pl (Large Language Models (LLMs) like GPT-3 are design) (0.956) (ID 9) (Len: 542): Using multi-shot learning, I will summarize texts (Large Language Models (LLMs) like GPT-3 are design) (0.953) (ID 7) (Len: 457): Imagine you are a concise communicator. Read the t (Large Language Models (LLMs) like GPT-3 are advanc) (0.948) (ID 2) (Len: 648): Provide a concise summary of the following passage (Large Language Models (LLMs), such as GPT-3, are d)

The most similar summary to our generic one is:

Large Language Models (LLMs) like GPT-3 are advanced neural networks trained on extensive datasets for general-purpose language understanding and generation. These models, using self-supervised and semi-supervised learning, have evolved from requiring specific task fine-tuning to being adaptable through prompt engineering. They employ a modified byte-pair encoding for tokenization, which is more efficient for English, and their conversational abilities are limited by the size of their context window.

And the least is:

Large Language Models (LLMs), such as GPT-3, are designed for general-purpose language understanding and generation, learning from extensive data to master billions of parameters. These transformer-based neural networks use self-supervised and semi-supervised learning, evolving from requiring fine-tuning for specific tasks to using prompt engineering. They employ a modified byte-pair encoding for creating token vocabularies, which are efficient for English but less so for other languages. Their effectiveness in conversation is limited by the context window size, impacting how much of a conversation they can consider in generating responses.

We can see multiple clusters: