Earlier this week we touched on some of the incredible and unparalleled new classes of research questions that are now explorable through the scale and scope of GDELT's planetary-scale realtime multimodal knowledge graphs. Standing in the way of many of these questions are the technical limitations of exploring graphs of this magnitude. Some of the questions we'd love to see explored through GDELT's massive graphs:

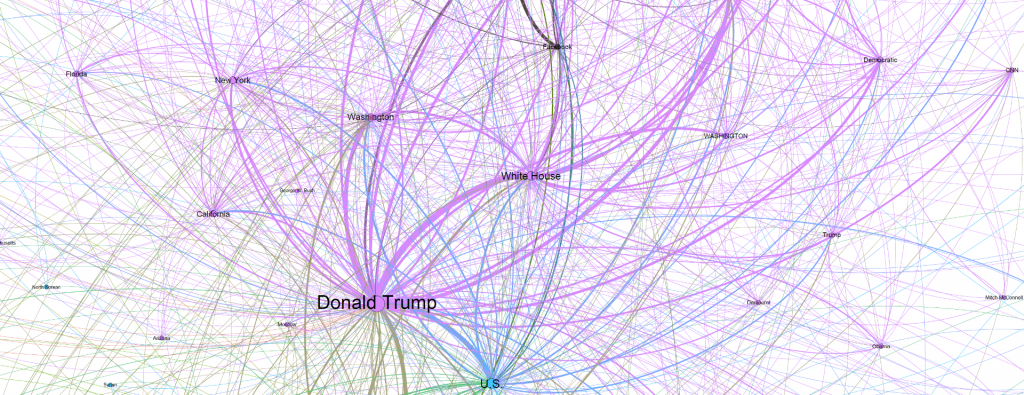

- Visualization of massive graphs at scale. Visualizing the largest graphs typically entails either zooming to just one tiny slice (and missing the macro-level trends that are often the most important) or extreme sampling that can decimate critical structure, leading to incorrect assessments of graph connectivity and structure. How can layout and visualization algorithms be adapted to robustly and interactively visualize terascale, petascale and even exascale graphs?

- Clustering graphs to extract structure. Last year we showed how t-SNE, UMAP and other clustering algorithms can be used with TensorFlow Projector to cluster large embedding graphs and visualize the underlying structure. Each algorithm represents a specific set of tradeoffs and limitations. What are the best algorithms to use on GDELT-scale graphs and which produce the most useful clusterings for different kinds of applications when applied to the news?

- Similarity scanning. Approaches like Approximate Nearest Neighbor (ANN) offer scalable similarity scanning across large graphs, but, like clustering, represent a series of tradeoffs and still require substantial computational resources. What are new approaches to "more like this" similarity scanning over extremely large graphs – ideally with realtime tuning?

- Conflict scanning. Unlike most hand-curated expert knowledge graphs for which there is one "right" answer that is encoded into the graph, GDELT encodes the realtime stream of global news reporting, which means it encodes the chaotic conflicting cacophony of world knowledge presented by the world's realtime journalism and the very different narratives and contextualizations of global events that are seen across the world's rich diversity of societies. How might realtime scalable algorithms scan such graphs for conflicting connections that can be used for misinformation and disinformation research and to better understand how the world's communities are contextualizing events?

- Anomaly detection / breaking alerts. What kinds of algorithms can scalably assess planetary-scale knowledge graphs in realtime to detect the first glimmers of anomalies representing the first glimpses of tomorrow's most important stories?

- Question answering, autonomous reasoning and automated decision making. What kinds of algorithms can traverse these complex and conflicting graphs to answer realtime questions from human or machine queries? Perhaps most importantly of all, what kinds of algorithms can reason over such graphs to make completely autonomous decisions based on global events, such as alerting human analysts to emerging anomalies of specific concern?

We'd love to assist "grand challenge" competitions for conferences and other open venues so please contact us, we'd love to hear from you!