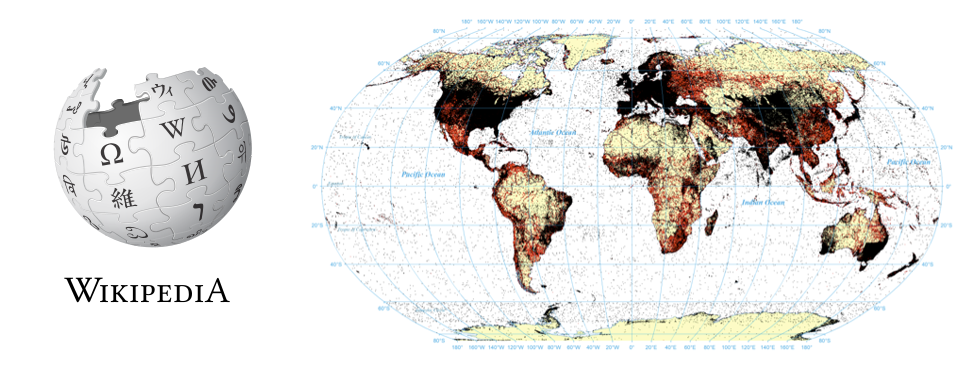

The rise of "born geographic" information and the increasing creation and mediation of information in a spatial context has given rise to a demand for extracting and indexing the spatial information in large textual archives. Spatial indexing of archives has traditionally been a manual process, with human editors reading and assigning country-level metadata indicating the major spatial focus of a document. The demand for subnational saturation indexing of all geographic mentions in a document, coupled with the need to scale to archives totaling hundreds of billions of pages or those accessioning hundreds of millions of new items a day requires automated approaches. Fulltext geocoding refers to the process of using software algorithms to parse through a document, identify textual mentions of locations, and using databases of places and their approximate locations known as gazetteers, to convert those mentions into mappable geographic coordinates. The basic workflow of a fulltext geocoding system is presented, together with an overview of the GNS and GNIS gazetteers that lie at the heart of nearly every global geocoding system. Finally, a case study comparing manually-specified geographic indexing terms versus fulltext geocoding on the English-language edition of Wikipedia demonstrates the significant advantages of automated approaches, including finding that previous studies of Wikipedia's spatial focus using its human-provided spatial metadata have erroneously identified Europe as its focal point because of bias in the underlying metdata.