Yesterday we introduced a Colab notebook template for visualizing embedding models and explored the impact of capitalization, word spacing and knowledge cutoffs on how embedding models semantically encode their inputs. Using the same template, let's explore how Covid-19 references are encoded by each model: the English-only USEv4, the larger English-only USEv5-Large, the 16-language USEv3-Multilingual and the larger 16-language USEv3-Multilingual-Large models (supporting 16 languages: Arabic, Chinese-simplified, Chinese-traditional, English, French, German, Italian, Japanese, Korean, Dutch, Polish, Portuguese, Spanish, Thai, Turkish, Russian), the 100-language LaBSEv2 model optimized for translation-pair scoring and the Vertex AI Embeddings for Text API.

COVID-19 offers a unique comparison point for embedding models in that the word "Covid" was invented to describe the new coronavirus and thus it will not exist in older-generation models like the Universal Sentence Encoder family that were trained before the pandemic, while more modern models like Vertex will likely have extreme overrepresentation of the word in their training data due to its saturation of the global information space. The use of all-caps, mixed caps and lower-case forms, along with the "-19" suffix, varied heavily across information sources and over the course of the pandemic, offering additional confounding factors.

Here, the impact of knowledge cutoffs is even more stark than yesterday's Monkeypox/mpox example. Vertex's post-pandemic cutoff allows it to strongly cluster all forms of COVID together across variants and capitalization differences, alongside coronavirus, while the other models strongly stratify them. This demonstrates the pitfalls of model aging: even the Gecko LLM model used by Vertex will fail to capture new terminology that emerges after its knowledge cutoff (as yesterday's mpox demo illustrated), requiring periodic model updates that, in turn, will require mass recomputation of the underlying embedding database. Unlike traditional keyword-based inverted indexes that are append-only, embedding databases will have to be regularly regenerated to capture the relentless evolution of language, posing unique challenges to technical scaling for very large document archives.

We'll use this set of test sentences that compares common forms of COVID, along with the underlying type of coronavirus, another respiratory disease (pneumonia) and Ebola:

sentences = [ "COVID cases continue to climb", "COVID-19 cases continue to climb", "Covid cases continue to climb", "Covid-19 cases continue to climb", "covid cases continue to climb", "covid-19 cases continue to climb", "Coronavirus cases continue to climb", "coronavirus cases continue to climb", "Pneumonia cases continue to climb", "pneumonia cases continue to climb", "Ebola cases continue to climb", "ebola cases continue to climb", ]

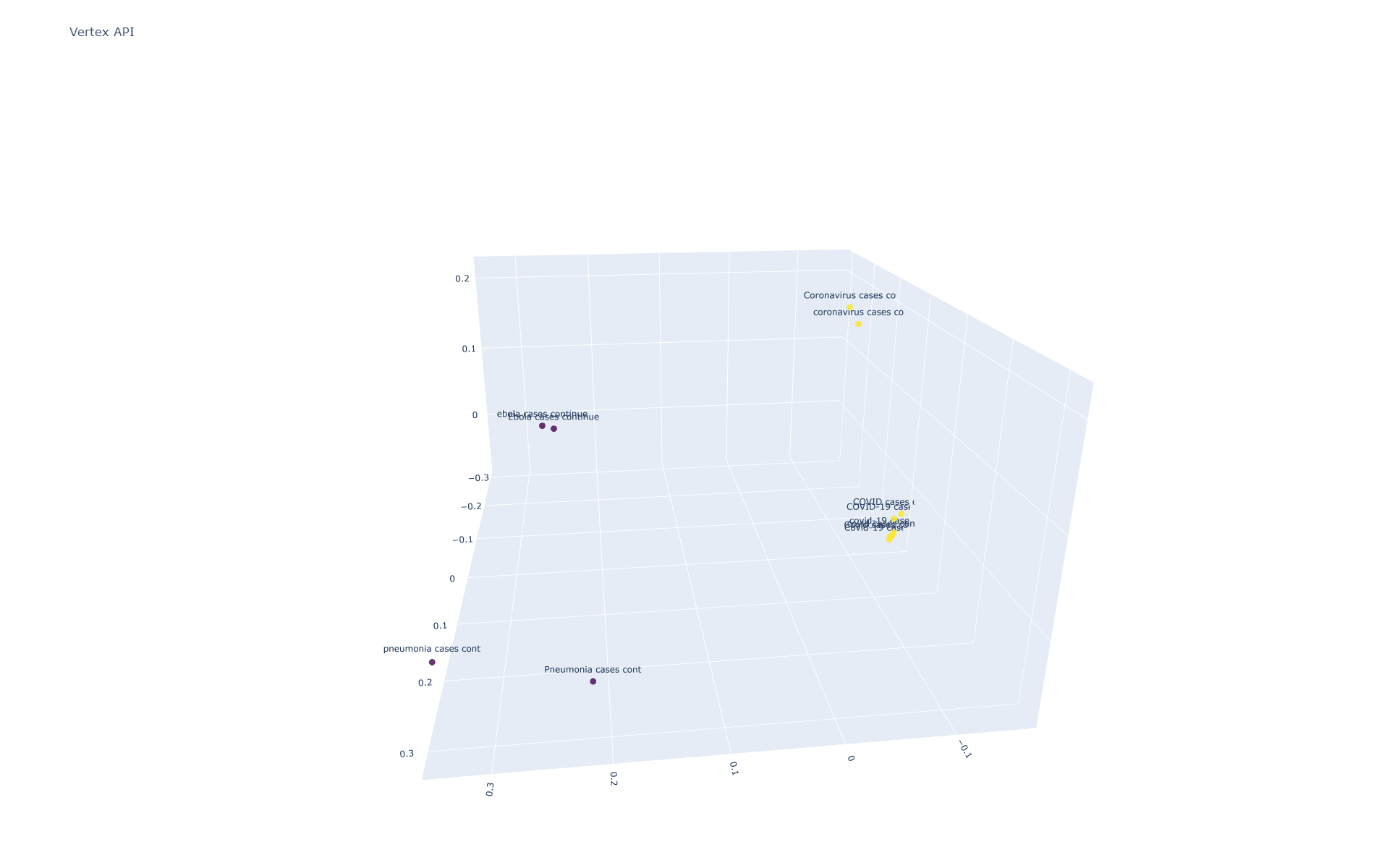

Vertex AI

As expected given its knowledge cutoff, Vertex tightly clusters all forms of Covid (though it does stratify based on capitalization and the presence of the "-19" suffix) and also groups it tightly with coronavirus. Strangely, both capitalized and lowercase Ebola are scored as more similar than lower-case pneumonia and roughly equally similar to upper-case pneumonia. At first glance this seems confusing, since pneumonia semantically is closer to COVID as a fellow respiratory disease. Instead, this is most likely due to COVID and Ebola's contextualization in Vertex's training data as pandemics that caused global concern and were both described in the media as having worldwide society-scale disruptive potential (realized in the case of COVID).

[[0.99999975 0.98092319 0.98553394 0.96871645 0.98126794 0.96790445 0.95037059 0.95508276 0.86890146 0.80671904 0.85036317 0.8406134 ] [0.98092319 0.99999965 0.96937647 0.98971007 0.96438763 0.98657499 0.94933547 0.95454953 0.8657603 0.80882442 0.8539151 0.84766074] [0.98553394 0.96937647 0.99999975 0.97196406 0.99101923 0.96816276 0.94452257 0.94821153 0.87313622 0.8028441 0.85684009 0.84560692] [0.96871645 0.98971007 0.97196406 0.99999977 0.96541719 0.99417176 0.94090222 0.94620429 0.86064692 0.80047543 0.85558001 0.85028666] [0.98126794 0.96438763 0.99101923 0.96541719 0.99999973 0.97416273 0.9409889 0.95178346 0.86806369 0.81444663 0.8473267 0.84449627] [0.96790445 0.98657499 0.96816276 0.99417176 0.97416273 0.99999977 0.94240246 0.95356359 0.86039372 0.81244455 0.84836602 0.84949048] [0.95037059 0.94933547 0.94452257 0.94090222 0.9409889 0.94240246 0.99999982 0.98273057 0.86860737 0.82393192 0.85692826 0.85037582] [0.95508276 0.95454953 0.94821153 0.94620429 0.95178346 0.95356359 0.98273057 0.99999958 0.86170361 0.8184852 0.85772613 0.85768796] [0.86890146 0.8657603 0.87313622 0.86064692 0.86806369 0.86039372 0.86860737 0.86170361 0.9999997 0.92962021 0.81186309 0.80940488] [0.80671904 0.80882442 0.8028441 0.80047543 0.81444663 0.81244455 0.82393192 0.8184852 0.92962021 0.99999967 0.78549213 0.79133546] [0.85036317 0.8539151 0.85684009 0.85558001 0.8473267 0.84836602 0.85692826 0.85772613 0.81186309 0.78549213 0.99999966 0.98128964] [0.8406134 0.84766074 0.84560692 0.85028666 0.84449627 0.84949048 0.85037582 0.85768796 0.80940488 0.79133546 0.98128964 0.99999961]]

One major concern, however, is that the capitalized and lowercase versions of pneumonia are scored so dissimilarly from each other (0.999 vs 0.929), which is starkly visible in the 2D projection below. This means that a semantic search engine processing a search for "covid" will easily return matches for "COVID-19", but a search for "pneumonia" will be scored less similarly to news articles that happen to mention "Pneumonia" at the start of sentences where it is capitalized:

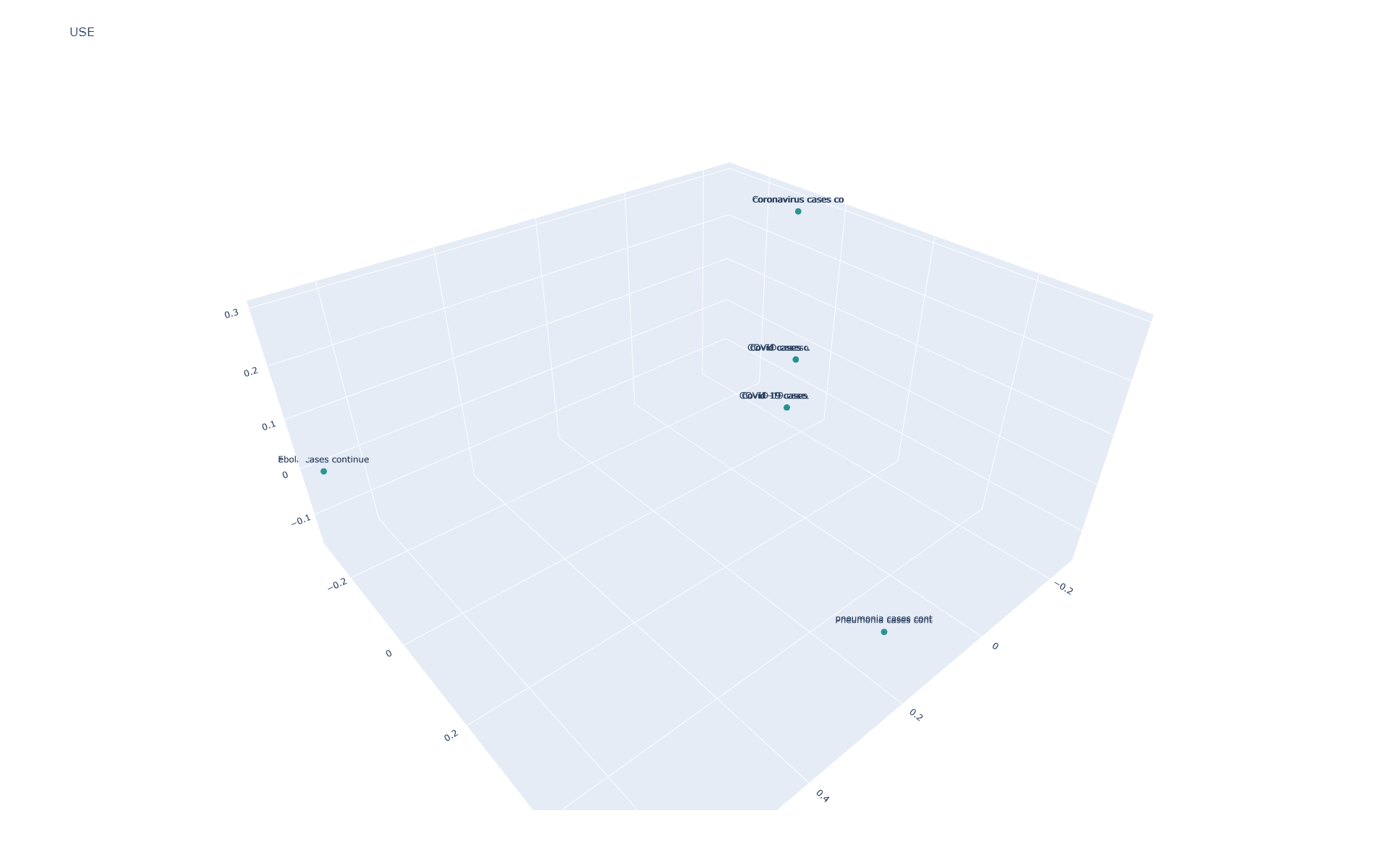

Universal Sentence Encoder

As expected given its knowledge cutoff, USE is unable to group COVID, COVID-19 and coronavirus mentions together, though its case-insensitivity allows it to look across capitalization variants:

[[0.99999964 0.9278996 0.99999964 0.9278996 0.99999964 0.9278996 0.90179324 0.90179324 0.66501963 0.66501963 0.53290194 0.53290194] [0.9278996 0.9999999 0.9278996 0.9999999 0.9278996 0.9999999 0.89491713 0.89491713 0.6680422 0.6680422 0.5427735 0.5427735 ] [0.99999964 0.9278996 0.99999964 0.9278996 0.99999964 0.9278996 0.90179324 0.90179324 0.66501963 0.66501963 0.53290194 0.53290194] [0.9278996 0.9999999 0.9278996 0.9999999 0.9278996 0.9999999 0.89491713 0.89491713 0.6680422 0.6680422 0.5427735 0.5427735 ] [0.99999964 0.9278996 0.99999964 0.9278996 0.99999964 0.9278996 0.90179324 0.90179324 0.66501963 0.66501963 0.53290194 0.53290194] [0.9278996 0.9999999 0.9278996 0.9999999 0.9278996 0.9999999 0.89491713 0.89491713 0.6680422 0.6680422 0.5427735 0.5427735 ] [0.90179324 0.89491713 0.90179324 0.89491713 0.90179324 0.89491713 1.0000002 1.0000002 0.65955544 0.65955544 0.5343034 0.5343034 ] [0.90179324 0.89491713 0.90179324 0.89491713 0.90179324 0.89491713 1.0000002 1.0000002 0.65955544 0.65955544 0.5343034 0.5343034 ] [0.66501963 0.6680422 0.66501963 0.6680422 0.66501963 0.6680422 0.65955544 0.65955544 0.9999998 0.9999998 0.5448904 0.5448904 ] [0.66501963 0.6680422 0.66501963 0.6680422 0.66501963 0.6680422 0.65955544 0.65955544 0.9999998 0.9999998 0.5448904 0.5448904 ] [0.53290194 0.5427735 0.53290194 0.5427735 0.53290194 0.5427735 0.5343034 0.5343034 0.5448904 0.5448904 0.9999999 0.9999999 ] [0.53290194 0.5427735 0.53290194 0.5427735 0.53290194 0.5427735 0.5343034 0.5343034 0.5448904 0.5448904 0.9999999 0.9999999 ]]

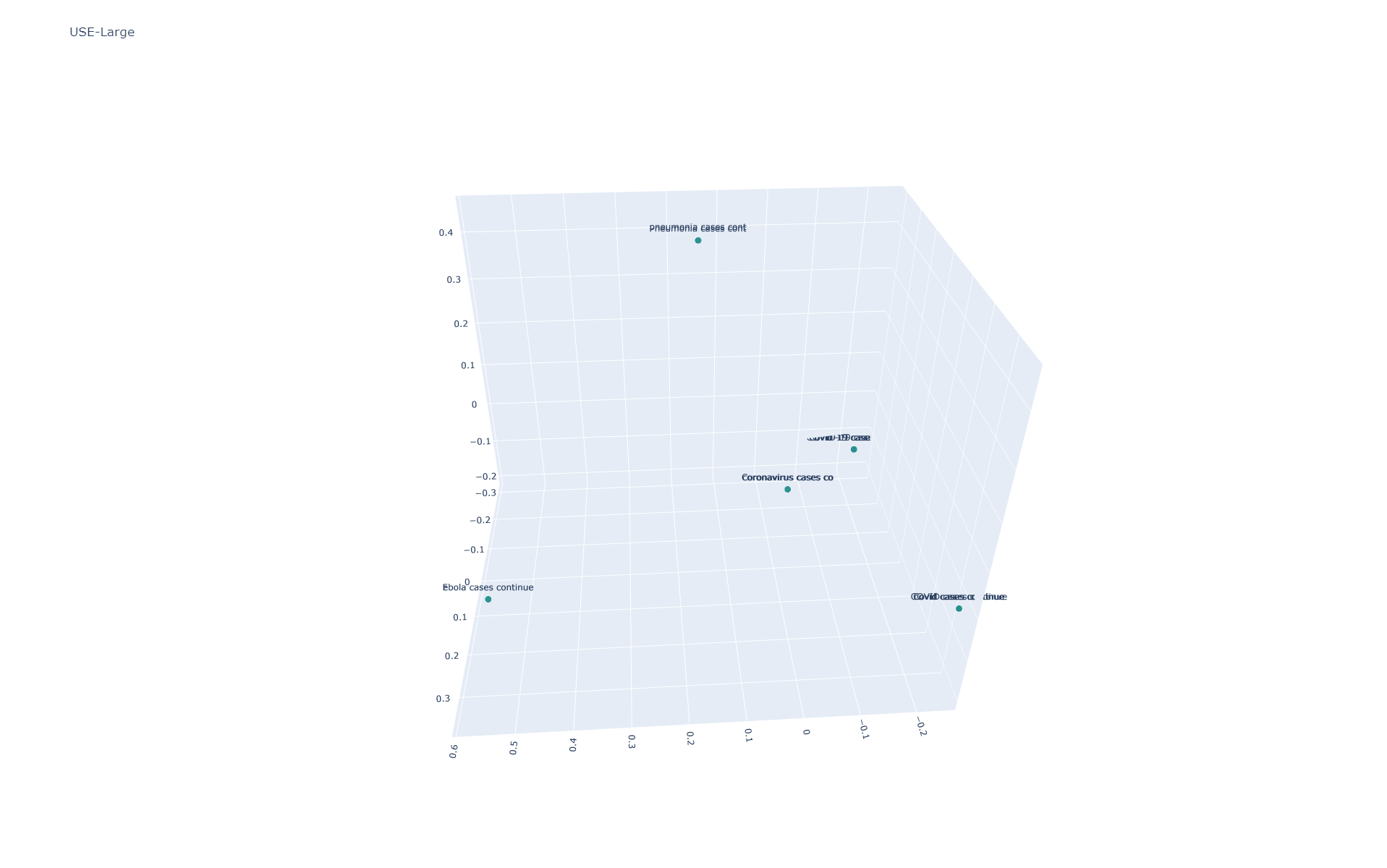

Universal Sentence Encoder Large

The USE Large model's results are roughly similar to the smaller variant's:

[[0.9999996 0.7600823 0.9999996 0.7600823 0.9999996 0.7600823 0.7741569 0.7741569 0.7017479 0.7017479 0.59687877 0.59687877] [0.7600823 0.9999998 0.7600823 0.9999998 0.7600823 0.9999998 0.7891427 0.7891427 0.72855777 0.72855777 0.5941544 0.5941545 ] [0.9999996 0.7600823 0.9999996 0.7600823 0.9999996 0.7600823 0.7741569 0.7741569 0.7017479 0.7017479 0.59687877 0.59687877] [0.7600823 0.9999998 0.7600823 0.9999998 0.7600823 0.9999998 0.7891427 0.7891427 0.72855777 0.72855777 0.5941544 0.5941545 ] [0.9999996 0.7600823 0.9999996 0.7600823 0.9999996 0.7600823 0.7741569 0.7741569 0.7017479 0.7017479 0.59687877 0.59687877] [0.7600823 0.9999998 0.7600823 0.9999998 0.7600823 0.9999998 0.7891427 0.7891427 0.72855777 0.72855777 0.5941544 0.5941545 ] [0.7741569 0.7891427 0.7741569 0.7891427 0.7741569 0.7891427 0.9999999 0.9999999 0.7452704 0.7452704 0.6705071 0.67050713] [0.7741569 0.7891427 0.7741569 0.7891427 0.7741569 0.7891427 0.9999999 0.9999999 0.7452704 0.7452704 0.6705071 0.67050713] [0.7017479 0.72855777 0.7017479 0.72855777 0.7017479 0.72855777 0.7452704 0.7452704 1.0000001 1.0000001 0.671585 0.6715851 ] [0.7017479 0.72855777 0.7017479 0.72855777 0.7017479 0.72855777 0.7452704 0.7452704 1.0000001 1.0000001 0.671585 0.6715851 ] [0.59687877 0.5941544 0.59687877 0.5941544 0.59687877 0.5941544 0.6705071 0.6705071 0.671585 0.671585 1.0000004 1.0000002 ] [0.59687877 0.5941545 0.59687877 0.5941545 0.59687877 0.5941545 0.67050713 0.67050713 0.6715851 0.6715851 1.0000002 1.0000002 ]]

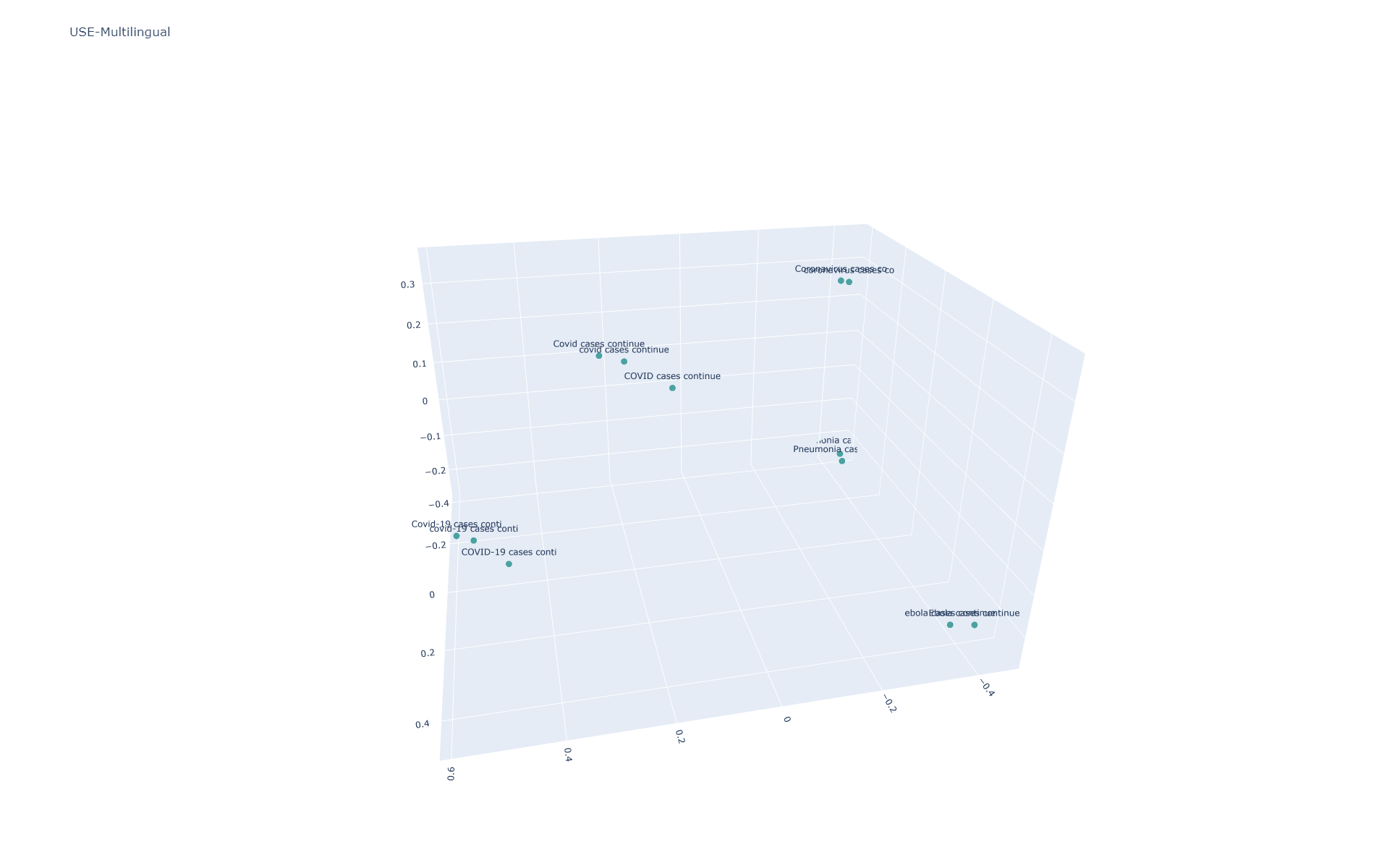

Universal Sentence Encoder Multilingual

USE Multilingual's case sensitivity, combined with its knowledge cutoff, results in extremely poor performance:

[[0.9999998 0.73056793 0.82046366 0.6055933 0.80287075 0.6082659 0.668017 0.6415869 0.54542375 0.50826716 0.5890119 0.60984695] [0.73056793 0.9999999 0.6268901 0.8741808 0.5893573 0.858492 0.4921174 0.46956462 0.3805078 0.34740177 0.4260134 0.44710025] [0.82046366 0.6268901 1.0000001 0.75318176 0.94163066 0.73321235 0.6416339 0.6065457 0.5197705 0.47947818 0.51809335 0.54619884] [0.6055933 0.8741808 0.75318176 1. 0.69101477 0.95894706 0.46517643 0.43296748 0.35021883 0.31109983 0.36635023 0.39445165] [0.80287075 0.5893573 0.94163066 0.69101477 0.9999999 0.75546056 0.6265229 0.6324425 0.51785266 0.5280088 0.5268305 0.5971628 ] [0.6082659 0.858492 0.73321235 0.95894706 0.75546056 1.0000002 0.4606353 0.4620733 0.36445796 0.3638317 0.38791728 0.4484858 ] [0.668017 0.4921174 0.6416339 0.46517643 0.6265229 0.4606353 0.9999994 0.9640982 0.64368516 0.60883206 0.6523031 0.63190055] [0.6415869 0.46956462 0.6065457 0.43296748 0.6324425 0.4620733 0.9640982 1. 0.6308917 0.637239 0.63525116 0.6415191 ] [0.54542375 0.3805078 0.5197705 0.35021883 0.51785266 0.36445796 0.64368516 0.6308917 1.0000004 0.90962255 0.6196134 0.59948146] [0.50826716 0.34740177 0.47947818 0.31109983 0.5280088 0.3638317 0.60883206 0.637239 0.90962255 0.9999998 0.57103026 0.58156776] [0.5890119 0.4260134 0.51809335 0.36635023 0.5268305 0.38791728 0.6523031 0.63525116 0.6196134 0.57103026 1.0000005 0.96649176] [0.60984695 0.44710025 0.54619884 0.39445165 0.5971628 0.4484858 0.63190055 0.6415191 0.59948146 0.58156776 0.96649176 1.0000001 ]]

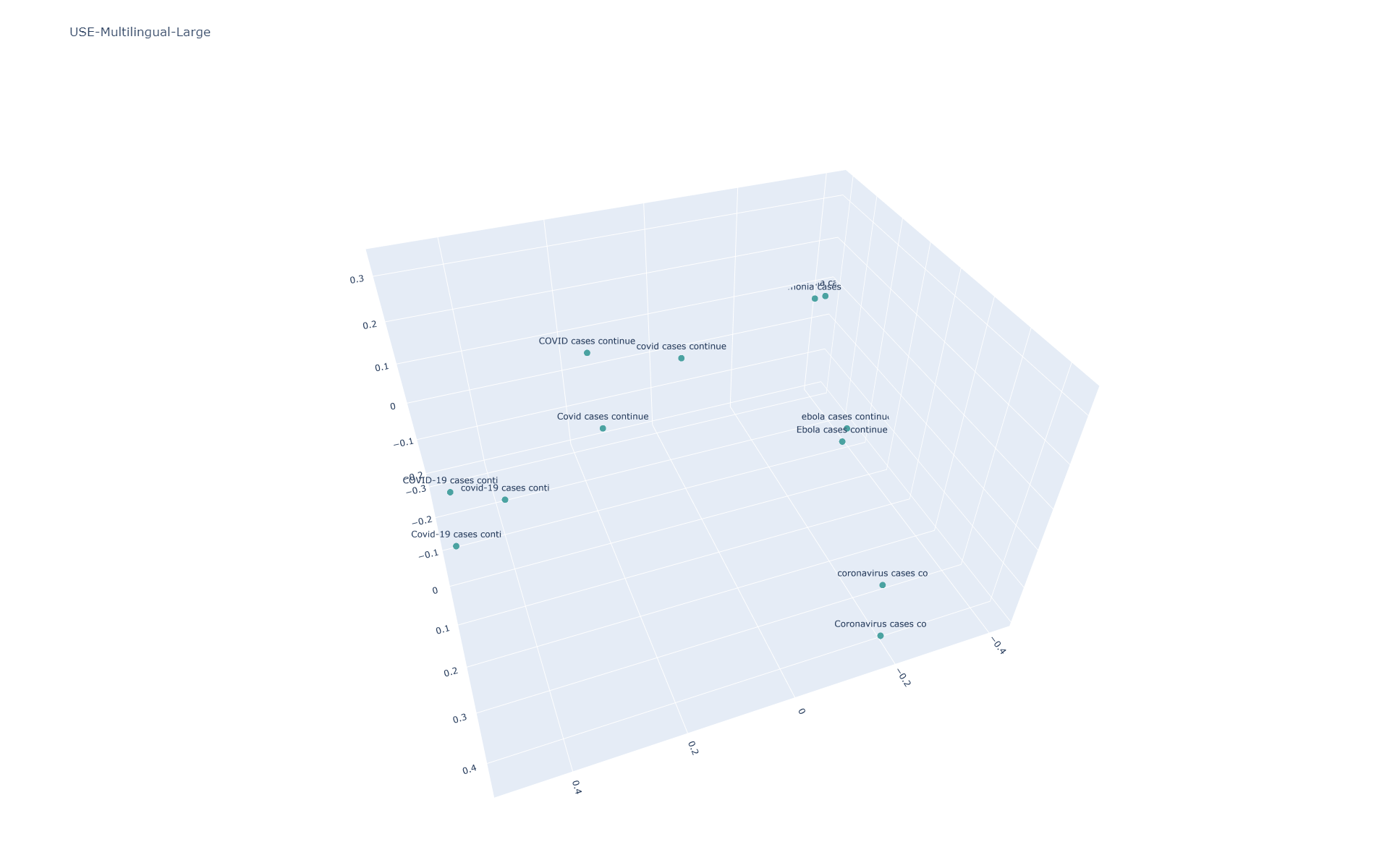

Universal Sentence Encoder Multilingual Large

The results here are even worse:

[[1. 0.8348667 0.8374959 0.7086766 0.8026265 0.7045014 0.6842052 0.68715966 0.60934913 0.5811648 0.655679 0.6449177 ] [0.8348667 0.99999964 0.72338426 0.8993598 0.6898842 0.90216756 0.5745934 0.57719135 0.5282691 0.4956299 0.56905913 0.55609095] [0.8374959 0.72338426 1. 0.82897115 0.8769098 0.75775015 0.73727506 0.7163767 0.6166638 0.5692521 0.6765578 0.6671535 ] [0.7086766 0.8993598 0.82897115 1. 0.7130029 0.93250185 0.6068863 0.5882567 0.51539445 0.4719713 0.56548214 0.55246603] [0.8026265 0.6898842 0.8769098 0.7130029 0.99999976 0.81653655 0.7225721 0.7765403 0.7047334 0.7404459 0.73145664 0.774832 ] [0.7045014 0.90216756 0.75775015 0.93250185 0.81653655 1.0000001 0.60984886 0.64467645 0.5882945 0.60369205 0.61852163 0.6418808 ] [0.6842052 0.5745934 0.73727506 0.6068863 0.7225721 0.60984886 0.9999999 0.9718406 0.6656413 0.64798033 0.76659954 0.7611302 ] [0.68715966 0.57719135 0.7163767 0.5882567 0.7765403 0.64467645 0.9718406 0.9999999 0.70993173 0.7293755 0.7766607 0.79613554] [0.60934913 0.5282691 0.6166638 0.51539445 0.7047334 0.5882945 0.6656413 0.70993173 0.99999976 0.9491775 0.8054427 0.81116366] [0.5811648 0.4956299 0.5692521 0.4719713 0.7404459 0.60369205 0.64798033 0.7293755 0.9491775 0.9999999 0.77830076 0.81872463] [0.655679 0.56905913 0.6765578 0.56548214 0.73145664 0.61852163 0.76659954 0.7766607 0.8054427 0.77830076 0.9999999 0.98497176] [0.6449177 0.55609095 0.6671535 0.55246603 0.774832 0.6418808 0.7611302 0.79613554 0.81116366 0.81872463 0.98497176 0.9999999 ]]

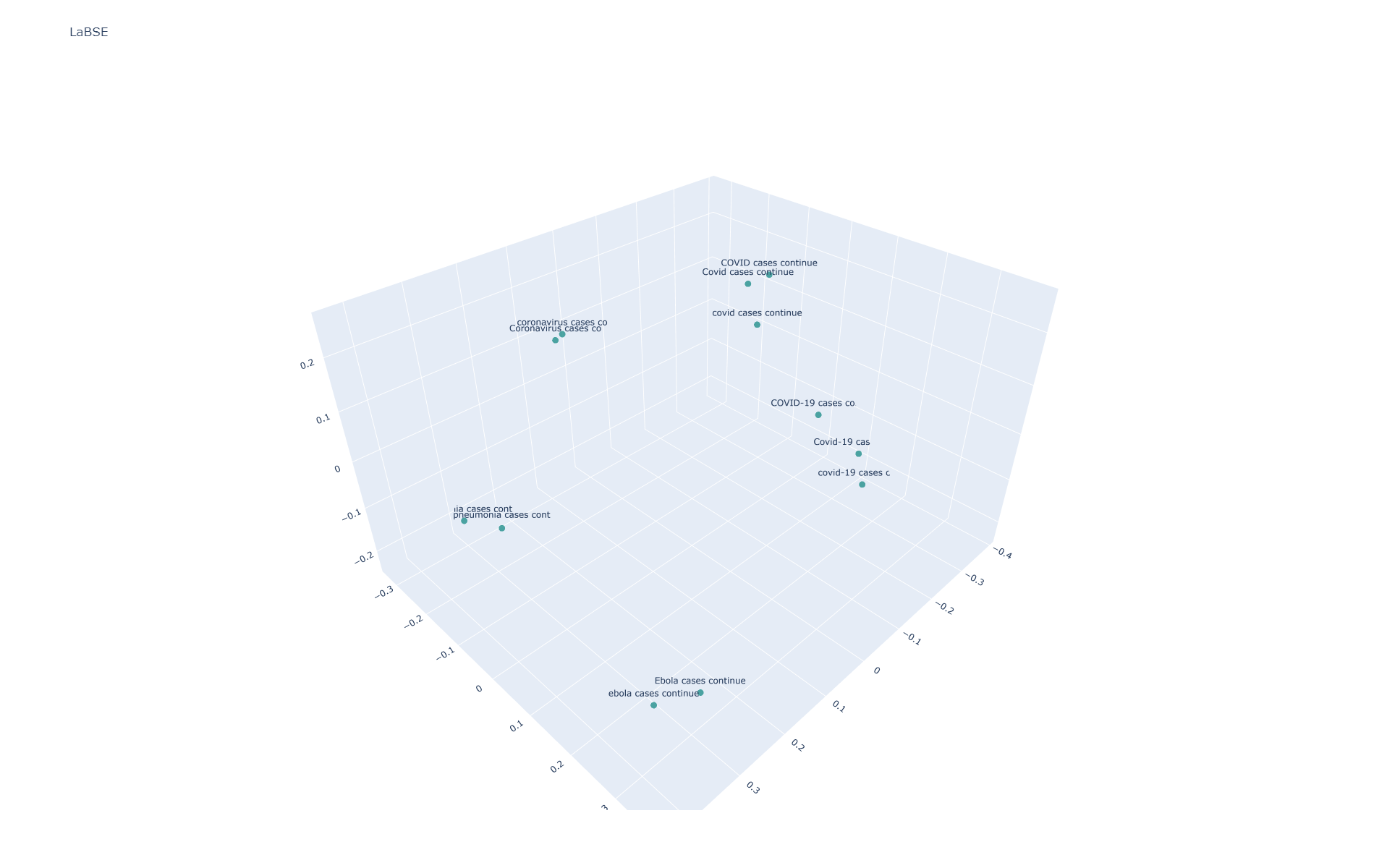

LaBSE

Performance here is even worse, with much broader improper stratification:

[[0.9999999 0.90940624 0.9272418 0.8369644 0.90540624 0.7995325 0.82824385 0.84310925 0.6911467 0.7269058 0.6598303 0.64767486] [0.90940624 1. 0.82524014 0.95265114 0.8172855 0.9266573 0.7909525 0.7953573 0.6808177 0.7201351 0.64769185 0.5902961 ] [0.9272418 0.82524014 1. 0.83504355 0.9578215 0.79350495 0.85280937 0.84938186 0.7274875 0.7477082 0.71365917 0.722188 ] [0.8369644 0.95265114 0.83504355 1.0000002 0.8107048 0.96431947 0.74259585 0.7378358 0.6480083 0.6863828 0.63517994 0.5880841 ] [0.90540624 0.8172855 0.9578215 0.8107048 0.99999976 0.83674204 0.82521784 0.838705 0.7164506 0.777403 0.71339566 0.75329953] [0.7995325 0.9266573 0.79350495 0.96431947 0.83674204 1. 0.7160311 0.72053707 0.638822 0.6958891 0.6325657 0.60617477] [0.82824385 0.7909525 0.85280937 0.74259585 0.82521784 0.7160311 1. 0.9733484 0.8326554 0.82846165 0.7392101 0.6904469 ] [0.84310925 0.7953573 0.84938186 0.7378358 0.838705 0.72053707 0.9733484 1. 0.8107335 0.8395932 0.73116475 0.71467686] [0.6911467 0.6808177 0.7274875 0.6480083 0.7164506 0.638822 0.8326554 0.8107335 0.9999999 0.9275176 0.67925185 0.6868994 ] [0.7269058 0.7201351 0.7477082 0.6863828 0.777403 0.6958891 0.82846165 0.8395932 0.9275176 1.0000002 0.6961761 0.7549248 ] [0.6598303 0.64769185 0.71365917 0.63517994 0.71339566 0.6325657 0.7392101 0.73116475 0.67925185 0.6961761 1. 0.91201377] [0.64767486 0.5902961 0.722188 0.5880841 0.75329953 0.60617477 0.6904469 0.71467686 0.6868994 0.7549248 0.91201377 0.99999994]]