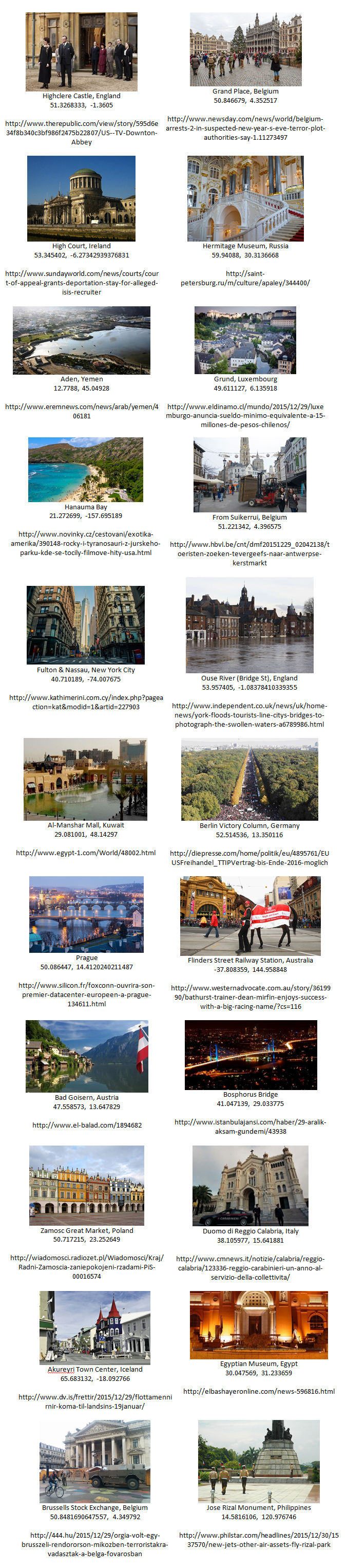

Having processed over 200,000 images from across the world's news media over the last two days as we ramp up to our public alpha deployment of the Google Cloud Vision API in GDELT, we wanted to share with you some of the early results. One of the features we are incredibly excited about is the Cloud Vision API's ability to recognize the geographic location of an image based purely on what is depicted in the image (it does not rely on EXIF or other metadata, text or other captions, or any other information, meaning it is able to process any image from anywhere).

Below we present a brief sample of 22 images found in worldwide news articles over the last 48 hours that depict scenes from around the globe. Images include interiors, front doors, sweeping panoramas, tourist snapshots, and everyday life. In each case the Cloud Vision API was able to correctly identify the location where the image was taken, even from the background of everyday snapshots, or when the location in question was partially underwater from flooding or blocked by vehicles and pedestrians. Even images of entranceways and cropped cutaways of structures was enough for the API to correctly estimate the location.

What is so remarkable about the images below is the diversity of locations, scenes, angles, lighting, and background life and the ability of the API to see through all of that to correctly recognize the location. Yet, perhaps most striking of all is the ability of the API to leverage the world's imagery to take an image of an anonymous beach or entranceway and pick it out of every beach and entranceway on the planet to identify it. No human or even team of humans could recognize such a diversity of locations from across the entire world, yet for the deep learning algorithms behind the Cloud Vision API, recognizing each of the images below took just 2-3 seconds each.

This is the future of the deep learning revolution.