One of the most fascinating aspects of being able to see the world at GDELT's scale is our ability to explore how current approaches to machine understanding see the world and the algorithmic, methodological, data and workflow challenges we must overcome in order to build machines that can freely interpret and reason about the chaotic conflicting cacaphony that is our global world.

For example, while exploring how the word "dog" has been used in worldwide English news coverage over the past three and a half years using GDELT's new Web News Part Of Speech Dataset, we came across a fascinating example that illustrates how the future integration of external world knowledge will change how natural language systems "see" the world.

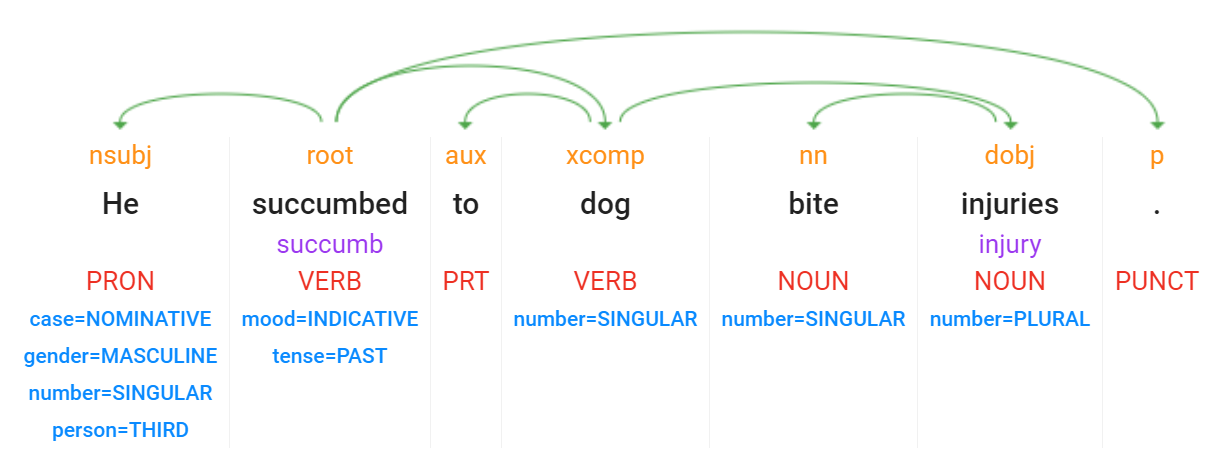

Take the sentence "He succumbed to dog bite injuries." Most native English speakers would understand that sentence as describing a person who was bitten by a canine and died from those injuries. The word "dog" would be understood as part of the phrase "dog bite" referring to a canine-inflicted injury. Instead, Cloud Natural Language API understands that sentence as the following, in which "dog" in this case is a verb.

How can "dog" be a verb in this case? The presence of "to" preceding dog in "to dog" can be suggestive of the action of dogging, such as "the scandal seems destined to dog him on his path to the White House."

In this case, the API interpreted the sentence as an individual who gave up and began pursuing and causing problems for bite injuries. Grammatically this is a valid interpretation of the sentence and is representative of a broader class of linguistic challenges in both human and machine understanding given the infinite creativity of human expression and the limitless opportunities for ambiguity and multiple interpretations of language.

Most humans would have trivially understood this sentence to reference a canine-inflicted injury rather than the action of bothering bite injuries. The difficulty lies in the machine's lack of semantic understanding of the concept of a canine, canine injuries, succumbing to injury and the like. Humans use their understanding of dog bites and their lived experience of seeing the word "dog" as almost always referring to a canine in order to see the sentence as a death from a canine attack rather than an individual pestering bite injuries.

Today's NLP systems learn from vast quantities of human-annotated training data, meaning they must see large numbers of high-quality examples in order to learn a particular grammatical construct. Absent sufficiently differentiating examples, today's correlative pattern deep learning struggles to abstract from its literal training data towards the high-order semantic understanding required to fully understand human communication.

Interestingly, the API's entity extraction engine in this case actually did correctly identify "dog bite injuries" as a common noun, suggesting in this case that had the two models been paired, the identification of "dog" as part of the following nounphrase would have allowed the dependency parse to see the word as a noun as well.

Looking to the future, as deep learning moves from pattern recognition towards abstractive reasoning, machines will become better and better at understanding cases like this. In fact, one of the areas that GDELT is exploring is the ability to blend entity extraction and dependency parses with external world knowledge.

A particularly powerful dataset is the induced usage patterns from GDELT's vast catalog of more than two billion global news articles spanning 152 languages, within which an almost unimaginable array of world knowledge is encoded.

We are just beginning to scratch the surface of the kinds of powerful new ways in which GDELT can help us reimagine how we see the world around us.