One of the most amazing aspects of BigQuery is not only its incredible speed and scale, but its versatility. Most large-scale data analytics systems enforce enormous sacrifices that severely constrain the kinds of analyses they can perform in order to achieve their speed. Not so with BigQuery, which can perform almost unimaginably massive analyses with a single SQL query with the only limit being the imagination of the analyst. If you can imagine it, BigQuery can answer it.

Eight years ago when I created Culturomics 2.0, I used one of the academic world's most powerful shared memory supercomputers to analyze tiny fragments of a 100-trillion-edge relationship graph. Even with NSF's largest SMP HPC system and vast amounts of purpose-built bespoke code and performance tuning, the project could only investigate the most microscopic fraction of the full graph, akin to shining a penlight to guide the way in a vast darkened city. In fact, I couldn't even maintain any meaningful portion of the graph in memory or storage, instead having to reconstruct the given subgraph on-the-fly as I sampled the full graph.

Fast forward to today and Google's BigQuery makes it possible to both construct and analyze terascale graphs with just a single SQL query.

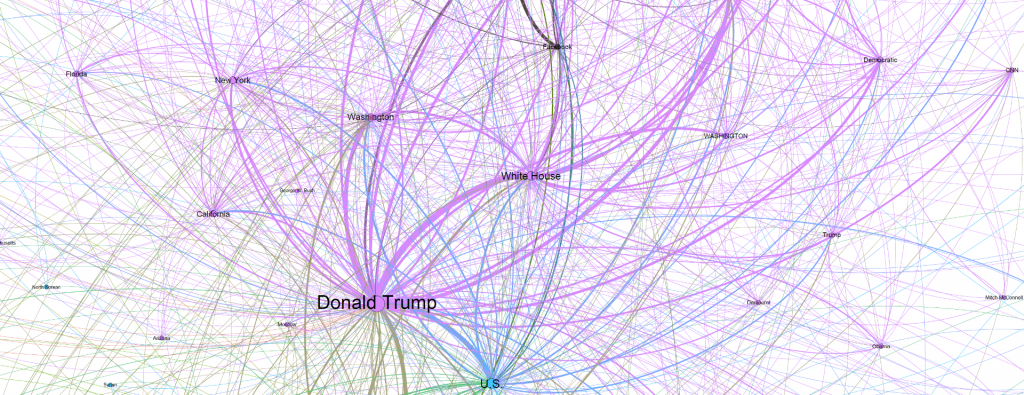

With just a single SQL query, BigQuery was able to permute an 11-billion entity annotation dataset computed by its deep learning-powered Cloud Natural Language API into a co-occurrence graph encoding 1.1 trillion edges in just 6 hours.

In place of the months of development, performance tuning and vast archives of bespoke HPC software, a brief snippet of SQL and just 6 hours of compute time was all that BigQuery required to construct this 1.1-trillion-edge graph.

A 3.8-billion-edge graph took BigQuery just 2.8 hours, again with just a single SQL query, with the resulting graph once again trivially analyzable in BigQuery with brief SQL queries.

In fact, even a set of exploratory large-scale analyses last week that examined more than half a petabyte took only a few SQL queries, with the enormously complex work of worker scheduling, coordination, data distribution and massively parallel joins all being taken care completely automatically by BigQuery. As far as the analyst is concerned, clicking the "Run" button to execute an SQL query is the end of their responsibilities – all of the actual work of figuring out how to tractably execute that query across potentially thousands or even tens of thousands of cores is all handled by BigQuery, allowing analysts to focus on the questions they want to ask rather than figuring out how to ask them.

We've reached a point today where even the largest analyses can be achieved with a single SQL query through BigQuery.