This study explores temporal knowledge graph reasoning:

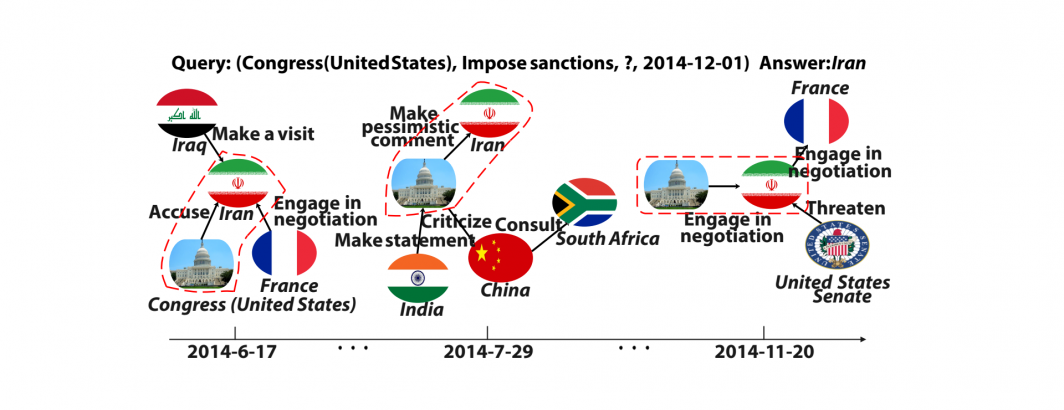

Temporal knowledge graph (TKG) reasoning that infers missing facts in the future is an essential and challenging task. When predicting a future event, there must be a narrative evolutionary process composed of closely related historical facts to support the event’s occurrence, namely fact precursors. However, most existing models employ a sequential reasoning process in an auto-regressive manner, which cannot capture precursor information. This paper proposes a novel auto-encoder architecture that introduces a relation-aware graph attention layer into transformer (rGalT) to accommodate inference over the TKG. Specifically, we first calculate the correlation between historical and predicted facts through multiple attention mechanisms along intra-graph and inter-graph dimensions, then constitute these mutually related facts into diverse fact segments. Next, we borrow the translation generation idea to decode in parallel the precursor information associated with the given query, which enables our model to infer future unknown facts by progressively generating graph structures. Experimental results on four benchmark datasets demonstrate that our model outperforms other state-of-the-art methods, and precursor identification provides supporting evidence for prediction.