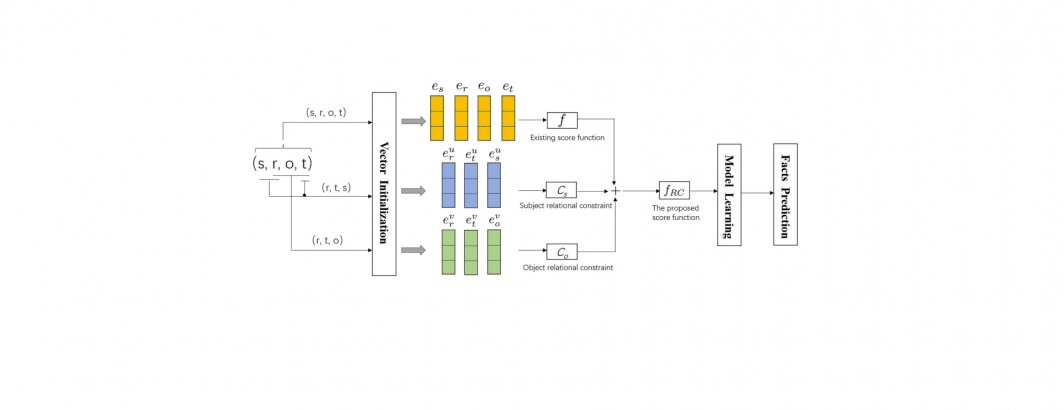

Temporal knowledge graphs (TKGs) have become an effective tool for numerous intelligent applications. Due to their incompleteness, TKG embedding methods have been proposed to infer the missing temporal facts, and work by learning latent representations for entities, relations and timestamps. However, these methods primarily focus on measuring the plausibility of the whole temporal fact, and ignore the semantic property that there exists a bias between any relation and its involved entities at various time steps. In this paper, we present a novel temporal knowledge graph completion framework, which imposes relational constraints to preserve the semantic property implied in TKGs. Specifically, we borrow ideas from two well-known transformation functions, i.e., tensor decomposition and hyperplane projection, and design relational constraints associated with timestamps. We then adopt suitable regularization schemes to accommodate specific relational constraints, which combat overfitting and enforce temporal smoothness. Experimental studies indicate the superiority of our proposal compared to existing baselines on the task of temporal knowledge graph completion.