Last night I went to AMC's early access screening of Death of a Unicorn (WARNING: spoilers below) and had some questions about the meaning of the ending and specifically whether the two main characters live or die. After some quick web searches turned up no answers, I turned to the modern era of AI search overviews to see whether they could provide answers. Comparing Google's built-in AI Search Overview and Gemini 2.0 and ChatGPT's results, Google's Search Overview results vary wildly each time it is run, with the results ranging from comically to utterly wrong, depending on which sources the Overview's internal search turned up, while ChatGPT's and Gemini's vastly better search results results in a fairly accurate overall summary, if not an answer to the actual question posed. Remarkably, it takes six attempts for Google's AI Results to actually report on the correct movie at all, rather than mistakenly summarizing a 1982 animated film of a different name or an utterly related Netflix murder drama. In contrast, Gemini 2.0 and ChatGPT exhibit markedly better sourcing that allow them to correctly report on the movie. Gemini 2.0 Flash correctly identifies the movie on its first attempt, but its summary of the movie is vastly poorer than ChatGPT's, which is likely related to the fact that it cites just two sources for its entire summary, rather than ChatGPT's considerable array of sources. These results reinforce the existential centrality of search quality to the AI revolution and how even the most advanced AI engines are utterly worthless without strong search engines underneath them.

In keeping with today's brief and informal mobile-first queries, the following prompt was used in all examples below:

death of a unicorn movie ending do they die

Google's AI Search Overview produced the following on its first attempt. Bizarrely, the summary has nothing to do with the movie or either of the listed sources. A closer look at the snippet text of the second result shows what happened: a sidebar snippet about Adolescence was incorrectly extracted by Google search as the text of the article and instead of seeing that the text had nothing to do with the title or query, Google incorrectly used the title to determine relevance and then used the incorrectly extracted text to generate its summary.

The second conflates Death of a Unicorn with an entirely unrelated 1982 animated film called The Last Unicorn. While any human would recognize that a movie with an entirely different name and release year of forty years prior is not relevant to the query, both search engine and generative AI system fail to understand the importance of these sourcing details:

Trying a third time yields an even more conflated result, merging The Last Unicorn and incorrectly extracted Adolescence together (Source 1, Source 2, Source 3):

A fourth time again centers on The Last Unicorn:

And the fifth:

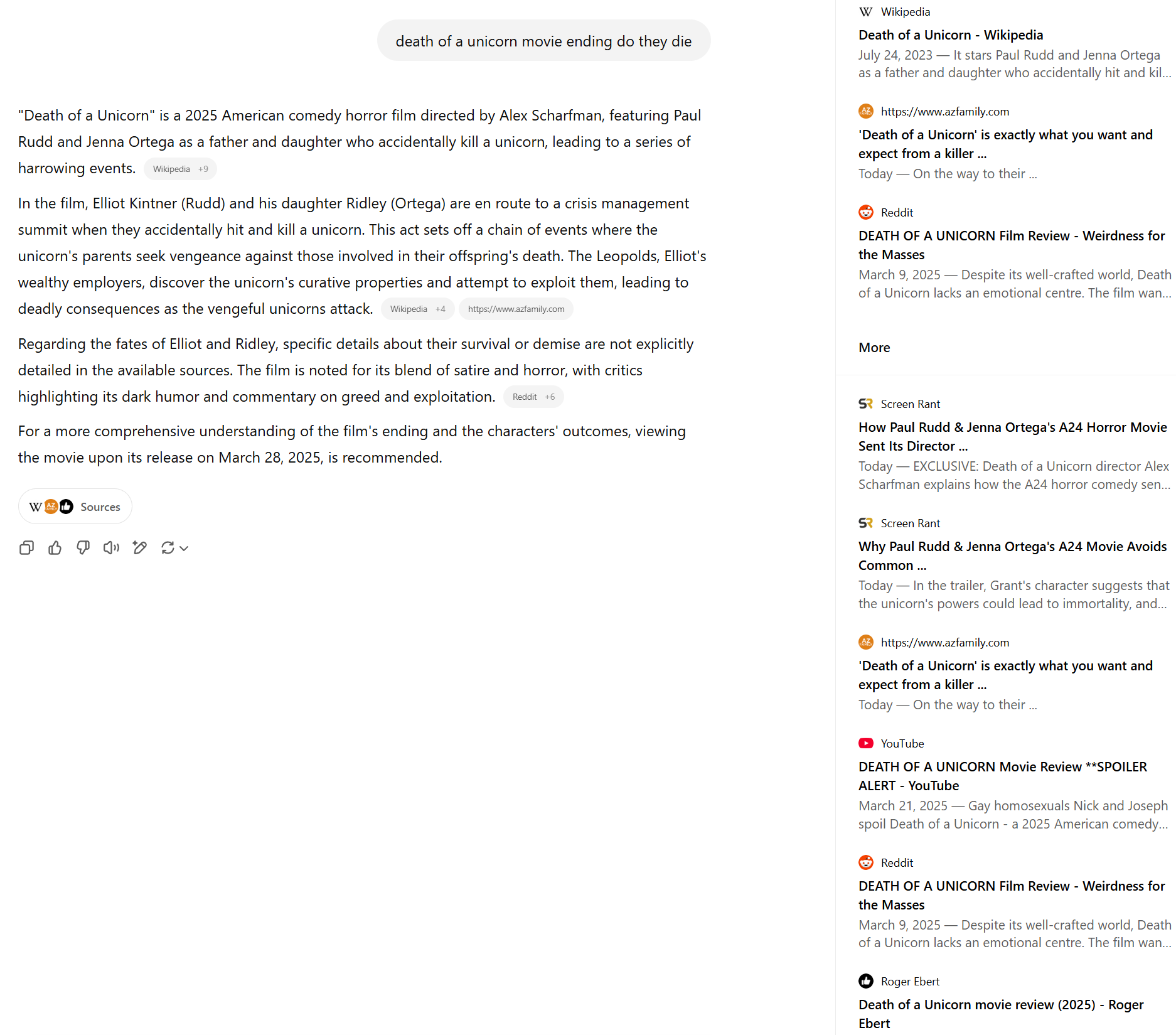

Finally, on the sixth try, Google's search engine finally provides at least one correct relevant source to its summarization engine (though one is still unrelated). In this case the summary is inaccurate regarding the movie itself, but IS an accurate summarization of the source material provided.

In contrast, ChatGPT 4o correctly summarizes the movie's key details on the first attempt:

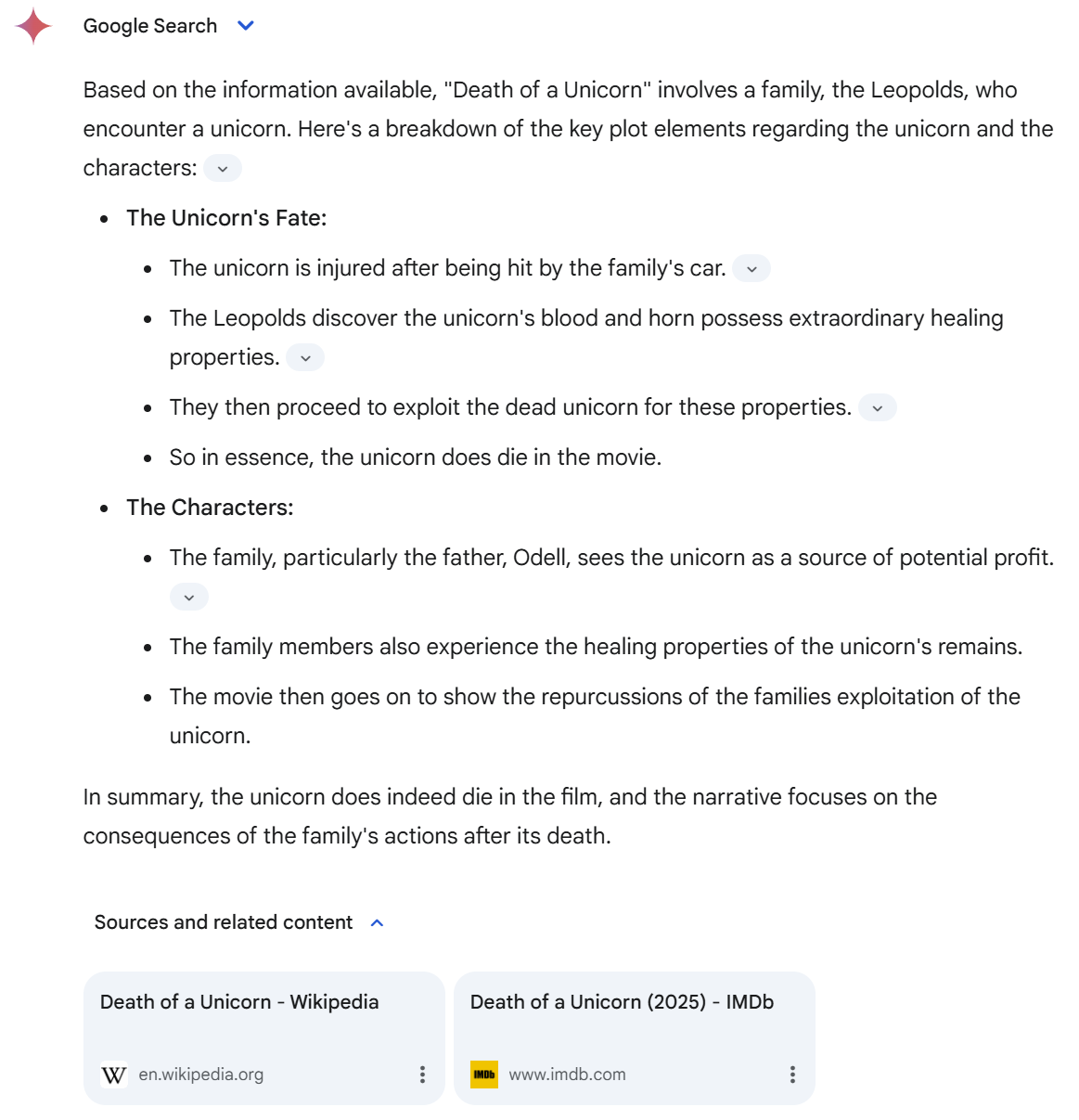

Gemini 2.0 Flash correctly identifies the movie on its first attempt, but its summary of the movie is vastly poorer than ChatGPT's, which is likely related to the fact that it cites just two sources for its entire summary, rather than ChatGPT's considerable array of sources: