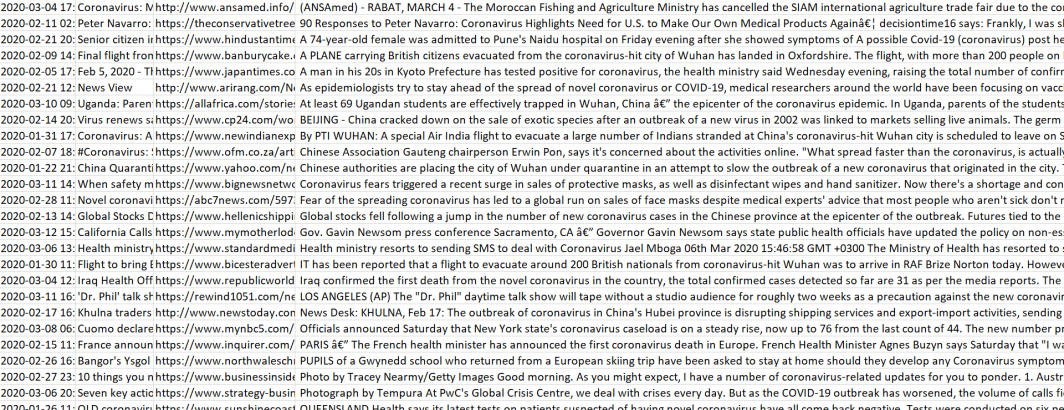

How is the world's news media covering the Coronavirus pandemic? Building on the massive television news narratives dataset we released yesterday, today we are releasing a powerful new dataset of the URLs, titles, publication dates and brief snippet of more than 1.1 million worldwide English language online news articles mentioning the virus to enable researchers and journalists to understand the global context of how the outbreak has been covered over the past two and a half months.

While you can track for yourself the latest global coverage (across the 65 languages GDELT monitors) using GDELT Summary and use the underlying DOC 2.0 API to build your own trackers and analytic pipelines, we wanted to build a comprehensive dataset that would enable large-scale study of the evolution of these media narratives.

To begin with, we have built a dataset of all English articles GDELT has monitored since 2015 (for snippets) or November 1, 2019 (for sentences) that contained "coronavirus" OR "covid-19" OR "corona virus" OR "sars-cov-2". We set the start time this early to make it possible to track earlier mentions of other coronaviruses and assess the baseline narrative around them.

There are two versions of the final dataset. They largely overlap, but were created through two very different workflows and thus there may be some differences between them.

The "150 Character Snippet" version was created by using a regular expression to identify the first mention of any of the keywords above in each article and then extract the mention along with up to 150 characters before and after the mention, for a total of up to 300 characters around the mention (mentions at the beginning or end of an article will obviously have a smaller window).

The "3 Sentence Snippet" version was created by taking one sentence containing one of the keywords from each article and combining it with the sentence before and after the matching sentence (sentences at the start or end of the an article will obviously lack a preceding/succeeding sentence). A best effort attempt was made to select the first matching sentence in each article, but the parallel workflow used to generate this dataset does not guarantee that.

The idea in creating two datasets is to test the finite 150-character-window snippet workflow, which is efficient to construct rapidly, compared with the 3-sentence snippet workflow that is more computationally demanding, to see whether the additional context is truly important for these kinds of narrative analyses.

- 150 Character Snippet Version. (207MB compressed / 618MB uncompressed)

- 3 Sentence Snippet Version. (302MB compressed / 811MB uncompressed)