For those interested in global-scale multimodal knowledge graphs covering text, speech, imagery and video and constructed through both neural and statistical approaches and how to transform those vast annotation archives into actionable insights, we have a new hour-long talk available focusing specifically on GDELT's approach to knowledge graphs:

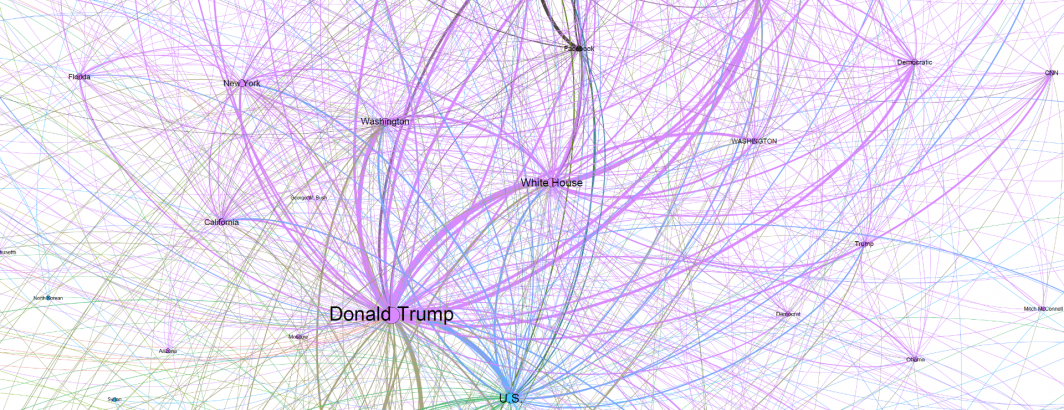

The GDELT Project (https://www.gdeltproject.org/) is one of the largest open datasets for understanding global human society, totaling more than 8.1 trillion datapoints spanning 200 years in 152 languages. From mapping global conflict and modeling global narratives to providing the data behind one of the earliest alerts of the COVID-19 pandemic, GDELT explores how we can use data to let us see the world through the eyes of others and even forecast the future, capturing the realtime heartbeat of the planet we call home. What does it look like to analyze, visualize and even forecast the world in realtime through the eyes of the cloud’s vast array of AI and analytic offerings, from sampling billions of news articles through Google’s Natural Language API to cataloging half a billion images through Cloud Vision to watching a decade of television news with Cloud Video and annotating billions of words of speech through Cloud Speech to Text? From visual search to misinformation research to planetary-scale semantic and visual analysis, what does it look like to analyze the global news landscape through the eyes of today’s cloud AI and how does one transform the resulting vast archives of JSON annotations into actionable insights and look across the myriad resulting planetary-scale knowledge graphs?