Since Typhoon Ruby last December, GDELT has provided a special live filtered stream of global news coverage of all natural and manmade disasters worldwide in a collaboration with OCHA, the Standby Task Force, and MicroMappers. One of those filtered data feeds includes a list of all imagery emerging in news reports about the disaster, which is distributed to a global team of volunteers via MicroMappers to bin into coarse topical and damage intensity categories.

We've been extremely active in trying to find technologies that would alleviate the need for so much human review, with the ultimate goal being automated computer vision algorithms that could triage all of the incoming images, filter out photographs of politicians at podiums, images of relief workers, volunteers, or missing persons, and focus in on actual images that offer insights into the level of impact and damage across the area. Ideally such a system would additionally separate images by the kind of damage, objects, and activities shown, and whether the image is an aerial photograph or whether it was captured from the ground.

Enter the Google Cloud Vision API, which applies Google's latest generation deep learning neural network image recognition algorithms to categorize the contents of arbitrary real world images. GDELT is an early access user of the Cloud Vision API, applying it to global news imagery from throughout the world.

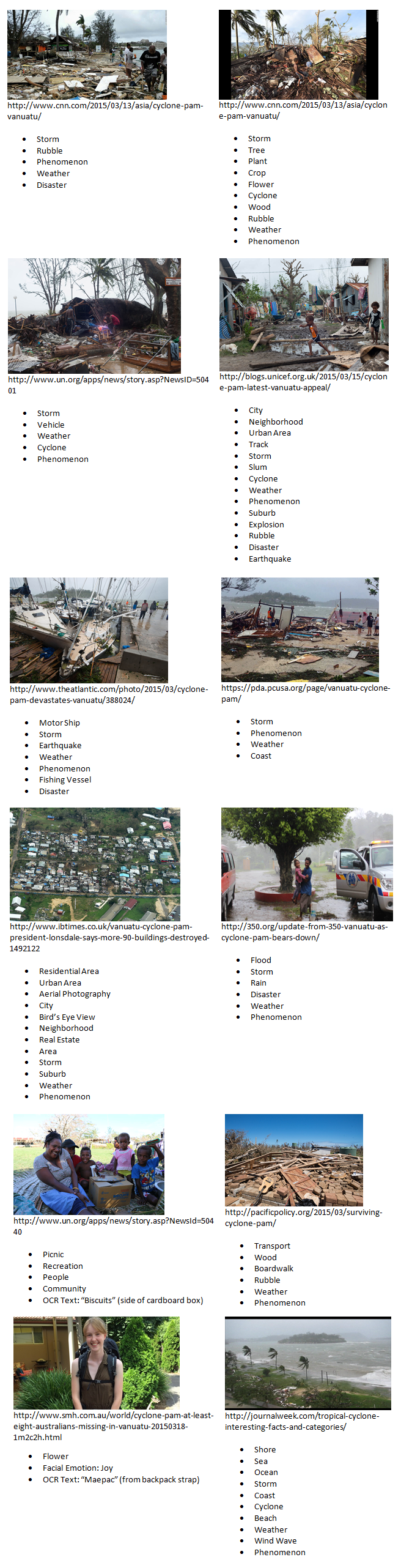

The montage below shows a selection of 12 images drawn from the coverage monitored by GDELT of Vanuatu in the immediate aftermath of the Cyclone Pam, showing the output of the Cloud Vision API on real world disaster images just as it might be deployed to triage a live stream of arriving imagery. Under each image is a list of the topical labels applied by the Cloud Vision API to that image. Remember that these labels are 100% automated – the image was passed to the Cloud Vision API, which then returned this list of labels. Also keep in mind that the Cloud Vision API is looking solely at the contents of the images – it has no information about the original filename of the image or any of the text that might have occurred near it on the page. Despite this, it correctly classifies many of the images below as cyclone damage (others it identifies as an earthquake or an explosion, both of which can yield similar kinds of damage). Moreover, it assigns the topic "Rubble" to many of the images exhibiting the most loose damaged material, allowing fairly accurate filtering to just those images of heavy damage, correctly identifies damaged boats and aerial photography, filters out images featuring just individual people and families, and even separates images of just the storm and coastline that don't show any damage. Its easy to see how the labels below could be used to rapidly triage incoming imagery.