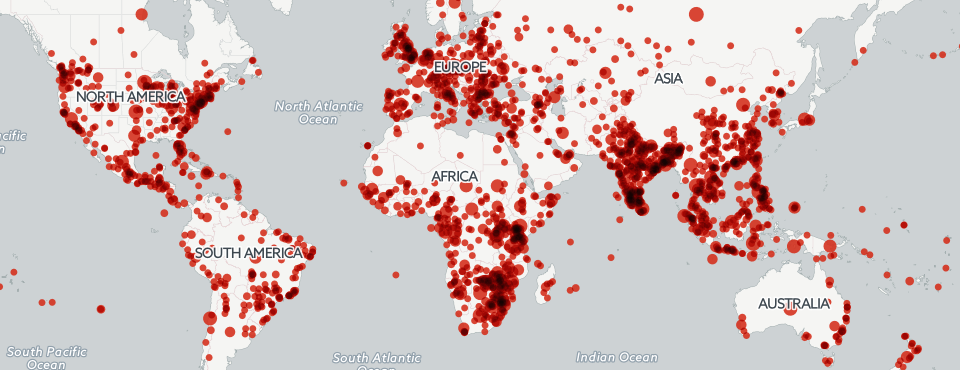

This past Saturday, on Halloween, the GDELT 2.0 Global Knowledge Graph reached 150 million articles in 65 languages from just the last eight months alone. The entire GKG 2.0 table now stands at 1.47 terabytes and growing rapidly. Those 150 million articles offer an incredibly rich view onto global cartography, containing 1.17 billion location references that can be used to georeference everything from world leaders to millions of themes and thousands of emotions.

When creating a map of a topic like MANPADs or poaching, you don't want to map every single location mentioned anywhere in the article. Instead, you want to place on the map only those locations that actually relate to the topic. In the case of the GKG, GDELT records the relative location within each article of all of the locations and themes mentioned therein and this information can be used as a primitive form of proximity affiliation, associating each theme with the location mentioned closest to it in the article. While imperfect and not taking into account semantic information from the article, such a simple proximity operation works surprisingly well, does not require access to the underlying text at query time, and allows for efficient and fast querying.

The problem is that performing this kind of associative linking is beyond the capability of SQL to express, meaning that historically one had to query BigQuery for matching articles, then download to your local computer the list of themes and locations from matching articles, which might be hundreds of gigabytes in size. You then had to run a PERL script over this downloaded file to perform the actual linking of themes and locations, collapse by location, construct an analytic window to limit to a maximum of 50 results by location, and so on. This ultimately was not a scalable solution and has become an increasingly limiting obstacle as GDELT has been used to map ever-larger and more complex topics.

Earlier this afternoon we unveiled a tutorial showcasing the use of BigQuery's new User Defined Function (UDF) capability, in which you can write arbitrarily complex JavaScript applications that run entirely within the BigQuery computing environment. Leveraging the power of the UDF environment, we were able to move all of the functionality of the PERL script entirely into the BigQuery environment, meaning that 100% of the processing pipeline, from initial query through final CSV file ready to load into CartoDB, is executed by BigQuery.

At first glance this might seem to simply be a nice technical achievement, a technical demonstration of the capabilities of a new technology. In reality, however, it represents something far more significant: a tectonic shift in the scalability of geographic analysis. The BigQuery platform today is being actively used by customers querying multiple petabytes of data or tens of trillions of rows in a single query, across tens of thousands of processors, with just a single line of SQL. This is data analysis in the BigQuery era – a world at "Google scale" in which multi-petabyte datasets are merely par for the course and where a single line of SQL can transparently marshal tens of thousands of processors as needed to complete a single query in seconds.

When coupled with massive geographic datasets like GDELT's 1.5TB Global Knowledge Graph, this capability enables an era of "terascale cartography" in which multi-terabyte datasets can be processed with complex georeferencing workflows in just tens of seconds with just a single query, and with the ability to transparently scale into the petabytes, collapsing the final results into a form that can be readily interactively visualized and explored. This is the future of mapping at infinite scale.