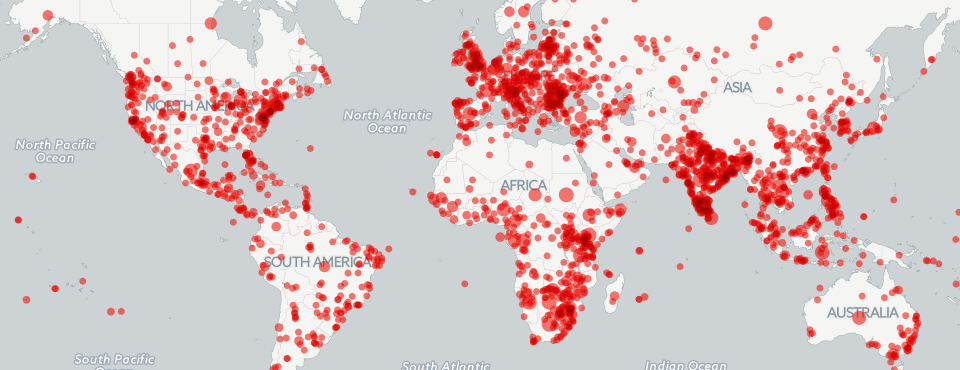

Despite being up less than 12 hours, we've already been getting some great feedback on our latest map series, which mapped global coverage over the last three months about Poaching/Wildlife Crime, Drones, and Cyber. We've gotten several emails already asking if we could post a quick tutorial on how to build your own maps that extend these to other topics, so here we go!

To build all three maps, we used the copy of the GDELT GKG 2.0 housed in Google BigQuery. While the raw GKG 2.0 CSV files are available for download beginning from February 19, 2015, they require a massive amount of disk and processing power to work with. The full GKG 2.0 dataset requires 166GB disk compressed and 573GB uncompressed, holding 56 million rows, with each row having several thousand possible columns if you expand the linearized fields like GCAM. Simply performing a grep across the complete set on a 16 core VM took over three hours. Attempting to interactively filter this much data is simply not feasible without standing up a small cluster.

Once again, its Google BigQuery to the rescue! Here we are going to use BigQuery as a simple (but massively powerful) filtering service. We're going to ask BigQuery to scan the entire GKG 2.0 dataset and return to us a subset of fields from all rows containing a specific set of themes. While BigQuery could actually do a lot of the advanced parsing for us, in this case we're just going to have it output rows for us to process in a subsequent PERL script.

NOTE before you proceed – the queries as written below will query and export a LOT of data and you will likely exceed your free monthly BigQuery quota if you aren't careful. At the very least you should add a "LIMIT 5000" or similar to the end of the queries to limit how much data they export.

For the poaching map, the following query was used:

SELECT DATE, DocumentIdentifier, SourceCommonName, V2Themes, V2Locations, V2Tone, SharingImage, TranslationInfo FROM [gdeltv2.gkg] where V2Themes like '%ENV_POACH%' and (V2Themes like '%ENV_POACH% ENV_POACH %' or V2Themes like '%SEIZE%' or V2Themes like '%ENVIR%' or V2Themes like '%BLACK_MARKET%' or V2Themes like '%ENV_SPECIESENDANGERED%' or V2Themes like '% SLFID_NATURAL_RESOURCES%')

It requires that all returned records include the ENV_POACH theme, but since poaching can be mentioned casually in an article not otherwise about poaching, the second half of the query requires the article to either mention ENV_POACH twice, or to mention it in context with one of a set of other related terms.

For the drone map, this query was used:

SELECT DATE, DocumentIdentifier, SourceCommonName, V2Themes, V2Locations, V2Tone, SharingImage, TranslationInfo FROM [gdeltv2.gkg] where V2Themes like '%DRONE%DRONE%'

It simply requires the DRONE theme to be mentioned twice in the article. And for the cyber map, this query was used:

SELECT DATE, DocumentIdentifier, SourceCommonName, V2Themes, V2Locations, V2Tone, SharingImage, TranslationInfo FROM [gdeltv2.gkg] where V2Themes like '%CYBER_ATTACK%CYBER_ATTACK%'

It simply requires the CYBER_ATTACK theme to be mentioned twice. Note that we do not include the WB_2457_CYBER_CRIME theme in this case, since it is far broader and tends to catch general discussion of passwords and computer authentication.

Each of these queries will generate a substantial results set, which is too large for BigQuery's web interface to display. Before you run the query, click on the "Show Options" button under the query textbox, then pick a “Destination Table” to output to a new table in your own account, then enable “Allow Large Results”. Once the export has completed, BigQuery will have created a new table in your account populated with the results. Then mouse over the table in the table listing on the left side of the page and hover over the entry and a dropdown arrow should appear at the right of the table name. Click this, choose “Export table” and provide a fully-qualified path to a directory in your Google Cloud Storage (GCS) account, along with a filename, and BigQuery will export the results to your GCS account. Make sure to delete the table in BigQuery when you are done. (NOTE these may incur additional charges to your Google Cloud account).

Download the results file to your local computer and delete it from your Google Cloud Storage account. Now download the "parsebqcsvtogeojson.pl" PERL script (you will need PERL installed on your computer). It processes the CSV export from BigQuery, computes which themes appear closest to each location mention in the article, and creates a hash table of every location in a given article and which themes appeared closest to it, which it then scans to find only those locations which appear in closest proximity to your theme in the article (using this as a proxy for likely relevance), and outputs a final GeoJSON file suitable for importing directly into CartoDB. NOTE that this PERL script is NOT very efficient, it was designed for ease-of-modification to offer a template upon which you can build to perform more sophisticated kinds of filtering and export of the GKG 2.0 file format.

To run the "parsebqcsvtogeojson.pl" PERL script, just run it as "perl ./parsebqcsvtogeojson.pl NAMEOFTHECSVFILEYOUDOWNLOADED YOURTHEME". It will create a new GeoJSON file with the filename of the input file with ".geojson" added to the end.

Now, upload this new GeoJSON file to your CartoDB account and use it to create your final map! Just create a new "Bubble" map, set the bubble size to the "numarts" field, use the "sharingimage" for the "image header" type infobox, set "urllinks" to display in the click infobox, and you're all set!