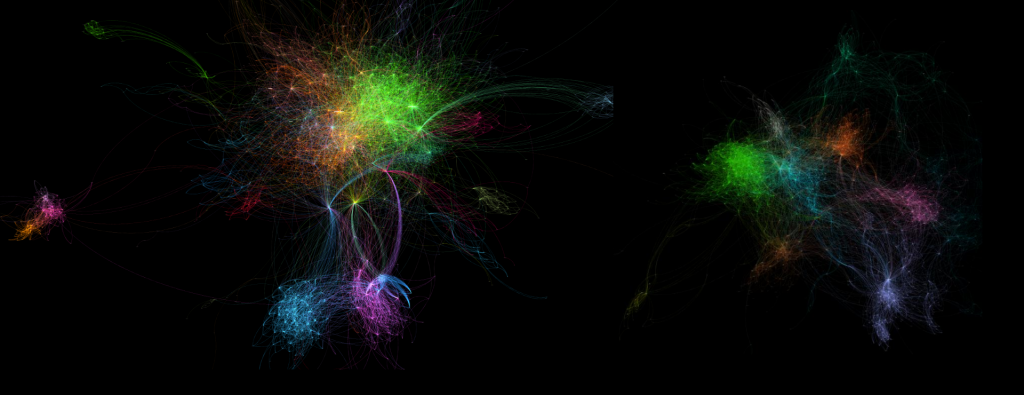

In April 2016 we began recording the list of all hyperlinks included in the body text of each article in the GDELT Global Knowledge Graph (GKG). Only links from the actual article itself are recorded (the myriad links in the header/footer/insets/ads/etc are excluded), meaning this dataset in essence records the external sources of information deemed relevant to the current story or authoritative by that news outlet. Over the nearly three years since we began recording outlinks, we have archived 1.78 billion links from 304 million articles.

The GKG outlinks data provide powerful insights into the underlying reference graph of the global news industry, capturing the web sources each news outlet has believed was sufficiently relevant or authoritative to link to from its stories.

Today we're releasing this massive 1.78 billion link dataset as a domain-level graph recording over the period April 22, 2016 through January 28, 2019 how many times each news outlet linked to URLs on any other domain (including subdomains of itself). To compute the dataset, all 1.78 billion outlinks URLs were collapsed to their registered/registerable domain (via BigQuery's NET.REG_DOMAIN() function). Then, all links from a given news outlet domain to URLs on another domain were collapsed to a single record of the format "FromSite,ToSite,NumDays,NumLinks" that records how many different days there was at least one link from that news outlet to to that other site and how many total links (including duplicate links) there were from that outlet to that site. The dataset is in CSV format.

In all there are 30,072,787 records, corresponding to 30 million domain edges in the final connectivity graph.

One of the most useful ways to use this dataset is to sort by the "NumDays" field to rank the top outlets linking to a given site or the top outlets that linked to another outlet. Using the NumDays field allows you to rank connections based on their longevity and filter out momentary bursts (such as a major story leading an outlet to run dozens and dozens of articles linking to an outside website for several days and then never linking to that website again).

The entire dataset was created with a single line of SQL in Google BigQuery, taking just 64.9 seconds and processing 199GB.

- Download the GKG Outlink Domain Graph (396MB compressed / 986MB uncompressed)

TECHNICAL DETAILS

Creating this massive dataset required just a single line of code in BigQuery (NOTE that this query is in BigQuery Standard SQL).

select fromsite,tosite,count(distinct day) numdays, sum(cnt) totlinks from ( select fromsite, tosite, day, count(1) cnt from ( select day, fromsite, NET.REG_DOMAIN(link) tosite from ( SELECT SUBSTR(CAST(DATE as string), 0, 8) day, SourceCommonName fromsite, SPLIT(REGEXP_EXTRACT(Extras, r'<PAGE_LINKS>(.*?)</PAGE_LINKS>'), ';') tolinks FROM `gdelt-bq.gdeltv2.gkg_partitioned` ), unnest(tolinks) as link ) group by fromsite,tosite,day having fromsite != tosite ) group by fromsite,tosite